AI 101

What is a Generative Adversarial Network (GAN)?

Generative Adversarial Networks (GANs) are types of neural network architectures capable of generating new data that conforms to learned patterns. GANs can be used to generate images of human faces or other objects, to carry out text-to-image translation, to convert one type of image to another, and to enhance the resolution of images (super resolution) among other applications. Because GANs can generate entirely new data, they are at the head of many cutting-edge AI systems, applications, and research. Yet how exactly do GANs work? Let’s explore how GANs function and take a look at some of their primary uses.

Defining Generative Models and GANs

A GAN is an example of a generative model. Most AI models can be divided into one of two categories: supervised and unsupervised models. Supervised learning models are typically used to discriminate between different categories of inputs, to classify. In contrast, unsupervised models are typically used to summarize the distribution of data, often learning a Gaussian distribution of the data. Because they learn the distribution of a data set they can pull samples from this learned distribution and generate new data.

Different generative models have different methods of generating data and calculating probability distributions. For example, the Naive Bayes model operates by calculating a probability distribution for the various input features and the generative class. When the Naive Bayes model renders a prediction, it calculates the most likely class by taking the probability of the different variables and combining them together. Other non-deep learning generative models include Gaussian Mixture Models and Latent Dirichlet Allocation (LDA). Deep leaning-based generative models include Restricted Boltzmann Machines (RBMs), Variational Autoencoders (VAEs), and of course, GANs.

Generative Adversarial Networks were first proposed by Ian Goodfellow in 2014, and they were improved upon by Alec Redford and other researchers in 2015, leading to a standardized architecture for GANs. GANs are actually two different networks joined together. GANs are composed of two halves: a generation model and a discrimination model, also referred to as the generator and discriminator.

The GAN Architecture

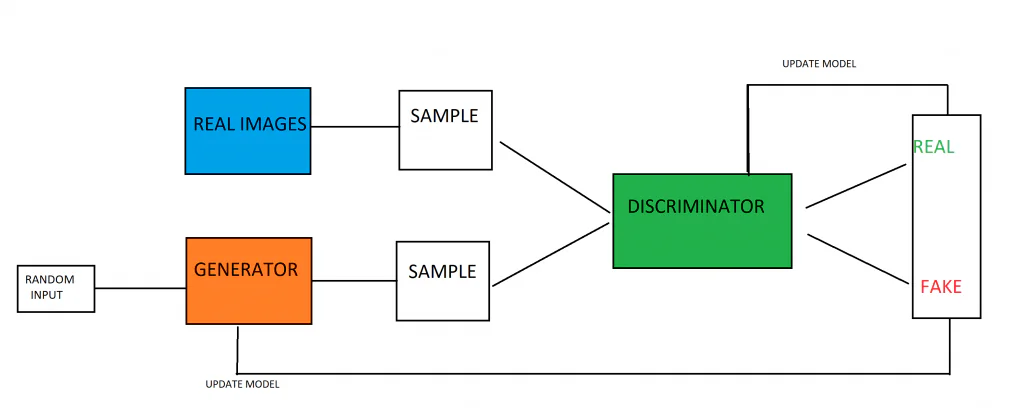

Generative Adversarial Networks are built out of a generator model and discriminator model put together. The job of the generator model is to create new examples of data, based on the patterns that the model has learned from the training data. The job of the discriminator model is to analyze images (assuming it is trained on images) and determine if the images are generated/fake or genuine.

The two models are pitted against one another, trained in a game theoretic fashion. The generator model’s goal is to produce images that deceive its adversary – the discriminator model. Meanwhile, the discriminator model’s job is to overcome its adversary, the generator model, and catch the fake images that the generator produces. The fact that the models are pitted against each other results in an arms race where both models improve. The discriminator gets feedback about which images were genuine and which images were produced by the generator, while the generator is fed information on which of its images were flagged as false by the discriminator. Both models improve during training, with the goal of training a generation model that can produce fake data that is basically indistinguishable from real, genuine data.

Once a Gaussian distribution of data has been created during training, the generative model can be used. The generator model is initially fed a random vector, which it transforms based on the Gaussian distribution. In other words, the vector seeds the generation. When the model is trained, the vector space will be a compressed version, or representation, of the data’s Gaussian distribution. The compressed version of the data distribution is referred to as latent space, or latent variables. Later on, the GAN model can then take the latent space representation and draw points from it, which can be given to the generation model and used to generate new data that is highly similar to the training data.

The discriminator model is fed examples from the entire training domain, which is made up of both real and generated data examples. The real examples are contained within the training dataset, while the fake data is produced by the generative model. The process of training the discriminator model is exactly the same as basic, binary-classification model training.

GAN Training Process

Let’s look at the entire training process for a hypothetical image generation task.

To begin with, the GAN is trained using genuine, real images as part of the training dataset. This sets up the discrimnator model to distinguish between generated images and real images. It also produces the data distribution that the generator will use to produce new data.

The generator takes in a vector of random numerical data and transforms them based on the Gaussian distribution, returning an image. This generated images, alongside some genuine images from the training dataset, is fed into the discriminator model. The discriminator will render a probabilistic prediction about the nature of the images it receives, outputting a value between 0 and 1, where 1 is typically authentic images and 0 is a fake image.

There is a double feedback loop in play, as the ground discriminator is fed the ground truth of the images, while the generator is given feedback on its performance by the discriminator.

The generative and discrimination models are playing a zero-sum game with each other. A zero-sum game is one where the gains by one side come at the cost of the other side (the sum is both actions is zero ex). When the discriminator model is able to successfully distinguish between real and fake examples, no changes are made to the parameters of the discriminator. However, large updates are made to the model’s parameters when it fails to distinguish between real and fake images. The inverse is true for the generative model, it is penalized (and its parameters updated) when it fails to fool the discriminative model, but otherwise it’s parameters are unchanged (or it is rewarded).

Ideally, the generator is able to improve its performance to a point where the discriminator cannot discern between the fake and real images. This means that the discriminator will always be rendering probabilities of %50 for real and fake images, meaning the generated images should be indistinguishable from the genuine images. In practice, GANs typically won’t reach this point. However, the generative model doesn’t need to create perfectly similar images to still be useful for the many tasks GANs are used for.

GAN Applications

GANs have a number of different applications, most of them revolving around the generation of images and components of images. GANs are commonly used in tasks where the required image data is missing or limited in some capacity, as a method of generating the required data. Let’s examine some of the common uses cases for GANs.

Generating New Examples For Datasets

GANs can be used to generate new examples for simple image datasets. If you only have a handful of training examples and need more of them, GANs could be used to generate new training data for an image classifier, generating new training examples at different orientations and angles.

Generating Unique Human Faces

The woman in this photo doesn’t exist. The image was generated by StyleGAN. Photo: Owlsmcgee via Wikimedia Commons, Public Domain (https://commons.wikimedia.org/wiki/File:Woman_1.jpg)

When sufficiently trained, GANs can be used to generate extremely realistic images of human faces. These generated images can be used to help train face recognition systems.

Image-To-Image Translation

GANs excel at image translation. GANs can be used to colorize black and white images, translate sketches or drawings to photographic images, or convert images from day to night.

Text-To-Image Translation

Text-to-Image translation is possible through the use of GANs. When provided with text that describes an image and that accompanying image, a GAN can be trained to create a new image when provided with a description of the desired image.

Editing And Repairing Images

GANs can be used to edit existing photographs. GANs remove elements like rain or snow from an image, but they can also be used to repair old, damaged images or corrupted images.

Super Resolution

Super resolution is the process of taking a low-resolution image and inserting more pixels into the image, improving the resolution of that image. GANs can be trained to take an image an generate a higher-resolution version of that image.