AI 101

What is Computer Vision?

What is Computer Vision?

Computer vision algorithms are one of the most transformative and powerful AI systems in the world, at the moment. Computer vision systems see use in autonomous vehicles, robot navigation, facial recognition systems, and more. However, what are computer vision algorithms exactly? How do they work? In order to answer these questions, we’ll dive deep into the theory behind computer vision, computer vision algorithms, and applications for computer vision systems.

How Do Computer Vision Systems Work?

In order to fully appreciate how computer vision systems work, let’s first take a moment to discuss how humans recognize objects. The best explanation neuropsychology has for how we recognize objects is a model that describes the initial phase of object recognition as one where the basic components of objects, such as form, color, and depth are interpreted by the brain first. The signals from the eye that enter the brain are analyzed to pull out the edges of an object first, and these edges are joined together into a more complex representation that complete’s the object’s form.

Computer vision systems operate very similarly to the human visual system, by first discerning the edges of an object and then joining these edges together into the object’s form. The big difference is that because computers interpret images as numbers, a computer vision system needs some way to interpret the individual pixels that comprise the image. The computer vision system will assign values to the pixels in the image and by examining the difference in values between one region of pixels and another region of pixels, the computer can discern edges. For instance, if the image in question is greyscale, then the values will range from black (represented by 0) to white (represented by 255). A sudden change in the range of values of pixels near each other will indicate an edge.

This basic principle of comparing pixel values can also be done with colored images, with the computer comparing differences between the different RGB color channels. So know that we know how a computer vision system examines pixel values to interpret an image, let’s take a look at the architecture of a computer vision system.

Convolutional Neural Networks (CNNs)

The primary type of AI used in computer vision tasks is one based on convolutional neural networks. What’s a convolution exactly?

Convolutions are mathematical processes the network uses to determine the difference in values between pixels. If you envision a grid of pixel values, picture a smaller grid being moved over this main grid. The values underneath the second grid are being analyzed by the network, so the network is only examining a handful of pixels at a time. This is often called the “sliding windows” technique. The values being analyzed by the sliding window are summarized by the network, which helps reduce the complexity of the image and make it easier for the network to extract patterns.

Convolutional neural networks are divided into two different sections, the convolutional section and the fully connected section. The convolutional layers of the network are the feature extractors, whose job is to analyze the pixels within the image and form representations of them that the densely connected layers of the neural network can learn patterns from. The convolutional layers start by just examining the pixels and extracting the low-level features of the image like edges. Later convolutional layers join the edges together into more complex shapes. By the end, the network will hopefully have a representation of the edges and details of the image that it can pass to the fully connected layers.

Image Annotation

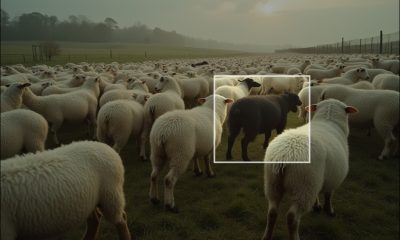

While a convolutional neural network can extract patterns from images by itself, the accuracy of the computer vision system can be greatly improved by annotating the images. Image annotation is the process of adding metadata to the image that assists the classifier in detecting important objects in the image. The use of image annotation is important whenever computer vision systems need to be highly accurate, such as when controlling an autonomous vehicle or robot.

There are various ways that images can be annotated to improve the performance of a computer vision classifier. Image annotation is often done with bounding boxes, a box that surrounds the edges of the target object and tells the computer to focus its attention within the box. Semantic segmentation is another type of image annotation, which operates by assigning an image class to every pixel in an image. In other words, every pixel that could be considered “grass” or “trees” will be labeled as belonging to those classes. The technique provides pixel-level precision, but creating semantic segmentation annotations is more complex and time-consuming than creating simple bounding boxes. Other annotation methods, like lines and points, also exist.