AI 101

What is Ensemble Learning?

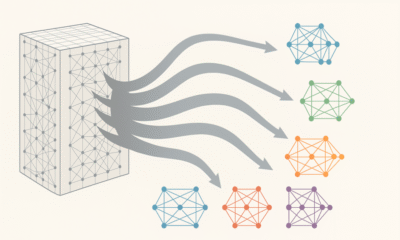

One of the most powerful machine learning techniques is ensemble learning. Ensemble learning is the use of multiple machine learning models to improve the reliability and accuracy of predictions. Yet how does the use of multiple machine learning models lead to more accurate predictions? What kind of techniques are used to create ensemble learning models? We’ll explore the answer to these questions, taking a look at the rationale behind using ensemble models and the primary ways of creating ensemble models.

What Is Ensemble Learning?

Put simply, ensemble learning is the process of training multiple machine learning models and combining their outputs together. The different models are used as a base to create one optimal predictive model. Combining a diverse set of individual machine learning models can improve the stability of the overall model, leading to more accurate predictions. Ensemble learning models are frequently more reliable than individual models, and as a result, they often place first in many machine learning competitions.

There are different techniques an engineer can use to create an ensemble learning model. Simple ensemble learning techniques include things like averaging the outputs of different models, while there are also more complex methods and algorithms developed especially to combine the predictions of many base learners/models together.

Why Use Ensemble Training Methods?

Machine learning models can be different from each other for a variety of reasons. Different machine learning models may operate on different samples of the population data, different modeling techniques may be used, and a different hypothesis might be used.

Imagine that you are playing a trivia game with a large group of people. If you are on a team by yourself, there are bound to be some topics that you have knowledge about and many topics you have no knowledge of. Now assume that you are playing on a team with other people. Just like you, they will have some knowledge regarding their own specialties and no knowledge of other topics. Yet when your knowledge is combined, you have more accurate guesses for more fields, and the number of topics your team lacks knowledge of shrinks. This is the same principle that underlies ensemble learning, combining the predictions of different team members (individual models) to improve accuracy and minimize errors.

Statisticians have proved that when a crowd of people is asked to guess the right answer for a given question with a range of possible answers, all of their answers form a probability distribution. The people who truly know the correct answer will pick the right answer with confidence, while the people who pick the wrong answers will distribute their guesses across the range of possible incorrect answers. Going back to the example of a trivia game, if you and your two friends know the right answer is A, all three of you will vote A, while the three other people on your team who don’t know the answer are likely to incorrectly guess B, C, D, or E. The result is that A has three votes and the other answers are likely to have only one or two votes maximum.

All models have some amount of error. The errors for one model will be different from the errors produced by another model, as the models themselves are different for the reasons described above. When all the errors are examined, they won’t be clustered around one answer or another, rather they will be scattered around. The incorrect guesses are essentially spread across all the possible wrong answers, canceling each other out. Meanwhile, the correct guesses from the different models will be clustered around the true, correct answer. When ensemble training methods are used, the correct answer can be found with greater reliability.

Simple Ensemble Training Methods

Simple ensemble training methods typically just involve the application of statistical summary techniques, such as determining the mode, mean, or weighted average of a set of predictions.

Mode refers to the most frequently occurring element within a set of numbers. In order to get the mode, the individual learning models return their predictions and these predictions are considered votes toward the final prediction. Determining the mean of the predictions is done simply by calculating the arithmetic mean of the predictions, rounded to the nearest whole integer. Finally, a weighted average can be calculated by assigning different weights to the models used to create predictions, with the weights representing the perceived importance of that model. The numerical representation of the class prediction is multiplied alongside a weight from 0 to 1.0, the individual weighted predictions are then summed together and the result is rounded to the nearest integer.

Advanced Ensemble Training Methods

There are three primary advanced ensemble training techniques, each of which is designed to deal with a specific type of machine learning problem. “Bagging” techniques are used to decrease the variance of a model’s predictions, with variance referring to how much the outcome of predictions differs when based on the same observation. “Boosting” techniques are used to combat the bias of models. Finally, “stacking” is used to improve predictions in general.

Ensemble learning methods themselves can generally be divided into one of two different groups: sequential methods and parallel ensemble methods.

Sequential ensemble methods get the name “sequential” because the base learners/models are generated sequentially. In the case of sequential methods, the essential idea is that the dependence between the base learners is exploited in order to get more accurate predictions. Mislabeled examples have their weights adjusted while properly labeled examples maintain the same weights. Every time a new learner is generated the weights are changes and accuracy (hopefully) improves.

In contrast to sequential ensemble models, parallel ensemble methods generate the base learners in parallel. When carrying out parallel ensemble learning, the idea is to exploit the fact that the base learners have independence, as the general error rate can be reduced by averaging the predictions of the individual learners.

Ensemble training methods can either be homogenous or heterogeneous in nature. Most ensemble learning methods are homogeneous, meaning that they use a single type of base learning model/algorithm. In contrast, heterogeneous ensembles make use of different learning algorithms, diversifying, and varying the learners to ensure that accuracy is as high as possible.

Examples Of Ensemble Learning Algorithms

Visualization of ensemble boosting. Photo: Sirakorn via Wikimedia Commons, CC BY SA 4.0, (https://commons.wikimedia.org/wiki/File:Ensemble_Boosting.svg)

Examples of sequential ensemble methods include AdaBoost, XGBoost, and Gradient tree boosting. These are all boosting models. For these boosting models, the goal is to convert the weak, underperforming learners into more powerful learners. Models like AdaBoost and XGBoost start off with many weak learners that perform just slightly better than randomly guessing. As the training continues, weights are applied to the data and adjusted. Instances that were incorrectly classified by the learners in earlier rounds of training are given more weight. After this process is repeated for the desired number of training rounds, the predictions are joined together through either a weighted sum (for regression tasks) and a weighted vote (for classification tasks).

The bagging learning process. Photo: SeattleDataGuy via Wikimedia Commons, CC BY SA 4.0 (https://commons.wikimedia.org/wiki/File:Bagging.png)

An example of a parallel ensemble model is a Random Forest classifier, and Random Forests is also an example of a bagging technique. The term “bagging” comes from “bootstrap aggregation”. Samples are taken from the total dataset using a sampling technique known as “bootstrap sampling”, which are used by the base learners to make predictions. For classification tasks, the outputs of the base models are aggregated using voting, while they are averaged together for regression tasks. Random Forests uses individual decision trees as their base learners, and each tree in the ensemble is built using a different sample from the dataset. A random subset of features is also used to generate the tree. Leading to highly randomized individual decision trees, which are all combined together to provide reliable predictions.

Visualization of ensemble stacking. Photo: Supun Setunga via Wikimedia Commons, CC BY S.A 4.0 (https://commons.wikimedia.org/wiki/File:Stacking.png)

In terms of stacking ensemble techniques, multiple regression or classification models are combined together through a higher level, meta-model. The lower level, base models are training by being fed the entire dataset. The outputs of the base models are then used as features to train the meta-model. Stacking ensemble models are frequently heterogeneous in nature.