Anderson's Angle

Total Recoil: The Groups Treating AI as an Intolerable Contaminant

‘Zero-tolerance’ towards AI-generated content is an increasingly appealing option in the face of growing legal, ethical, and user-base concerns around AI; but is this kind of ‘opting out’ a practical proposition?

Opinion Anyone who uses social media and other types of online discussion forums may have noticed, lately, a growing number of communities, forums and professional organizations entirely banning the use of generative AI, as the economic impact of the technology spreads beyond the artists and writers whose protests ignited current concerns back in 2023.

With the legal and economic consequences of the AI revolution still uncertain, organizations facing pressure from both users and internal legal counsel are being forced to decide policy well before the full picture is clear – a situation complicated by the emerging belief that delayed adoption could leave them at a competitive disadvantage.

AI as Miasma

The decision to ban is a political one, with consequences; the ability to ban, however, entails both political and practical pitfalls, since the history of banning things that people really want is a checkered one at best; and even eradicating the things that nobody wants requires long-term, ongoing effort.

Thus, haunted by the much-misunderstood history of the Luddites, those communities and entities treating any trace of AI as utterly intolerable often adopt a defensive tone when announcing or discussing an AI ban.

As the Amish have found over the last few centuries, a reclusive stance against a truly impactive technology can be hard to maintain; strictly speaking, for instance, those adhering to the rules of veganism cannot watch movies filmed on traditional celluloid, or even have an x-ray. Likewise, opting out of fluoridation in countries or cities that adopt it can be difficult.

Effectively, a pervasive and popular technology is an ‘atmospheric’ phenomena that doesn’t easily allow of the boundaries currently being set by a growing number of groups and organizations.

Pre-Legal Measures

As mentioned earlier, the first battles against AI’s encroachment were fought in the least deterministic and most interpretive sector of genAI – entertainment. When the American Screen Actors Guild–American Federation of Television and Radio Artists (SAG-AFTRA) fought for protection of its members’ rights in 2023, it was ultimately able to win some notable concessions.

There have been very few victories as decisive since then, in regard to AI – and the battle for legal clarity across a range of sectors is only just beginning. For instance, most of the new legislation around deepfakes, notably from the UK and the US, only includes AI in a broader category of crime (such as non-consensual imagery, real or fake).

Hot on the heels of SAG-AFTRA’s victory, a tranche of lawsuits around the use of the work of other kinds of artist are always in progress. In the meantime, many of the disaffected groups involved are anticipating legal ratification with local rules.

Before further considering the logic and constraints of a ‘totally intolerant’ attitude to AI, let’s look at some of the cases where this kind of policy is being enacted.

Zero-Tolerance AI Bans

A Note of Dissent

Only this month, Bandcamp became the first major online music platform to entirely ban AI content. The ban was announced in a statement which also declared ‘Music and audio that is generated wholly or in substantial part by AI is not permitted on Bandcamp’.

This restriction, as in nearly all cases, is forced to hedge its bets a little due to the aforementioned ‘miasma’ effect of widely-diffused AI systems. In another part of the announcement, it is stated that music that* ‘appears to be made entirely or with heavy reliance on generative AI’ is targeted in the ban – a pragmatic limitation, since, in most domains, at least some genAI content is capable of appearing entirely human-made, both to people, and to the ever-evolving AI-detection sector.

Effectively, this is the template for most of the new ‘zero-tolerance’ policies coming into force: that ignorance of AI is an excuse, in these challenging circumstances.

After an incident where an AI-created music track stormed the Swedish charts, IFPI Sweden, the Swedish National music industry organization, banned AI-generated music from inclusion in the charts – albeit with the customary caveat, stating* ‘Our rule is that if it is a song that is mainly AI-generated, it does not have the right to be on the top list’.

Again, the wish for a total ban on AI is here acknowledged to be impractical; the ban is tacit and total, but the implementation is effectively impossible.

Comic Effect

A wave of prohibition against genAI content has swept through the comics convention scene in the US and beyond, over the last year. The San Diego Comic-Con, which in 2024 had placed commercial restrictions on AI-produced art, has this month announced that it is banning AI content outright, reportedly in response to protest from artists.

Similarly, in October of last year, New York Comic-Con enforced a complete ban on selling AI-generated products or content, with the official site stating*:

‘Product, material, or content created, in whole or in substantial part, using generative artificial intelligence tools or algorithms (“AI-Generated”) or incorporating AI-Generated elements, including but not limited to visual artwork, images, or graphics, animations and/or materials, are prohibited from being made available for purchase at New York Comic Con.’

Once again, we see that in ‘substantial part’, the wording must acknowledge the difficulty of identifying the entire creative provenance of any work, these days. This reflects San Diego’s initial ban on commercializing AI output, rather than its later complete prohibition of AI content. That said, this approach is a rather effective ‘deplatforming’ of AI content, in most cases (though such work could still be used as a feeder to commercialized platforms).

Further, the FanX Salt Lake comic convention instituted a more decisive ban on AI content, in September of last year, with founder and producer Dan Farr commenting:

‘If customers come and buy a piece of art and they don’t realize generative AI was used to create that, they may pay a lot of money for it, not realizing it’s something not quite as valuable.’

Anti-Social

Many online communities have also adopted zero tolerance toward AI-generated content. Indeed, this is one of the most public-facing aspects of AI intolerance, and it was the increasing frequency of such bans that drew my attention to the topic:

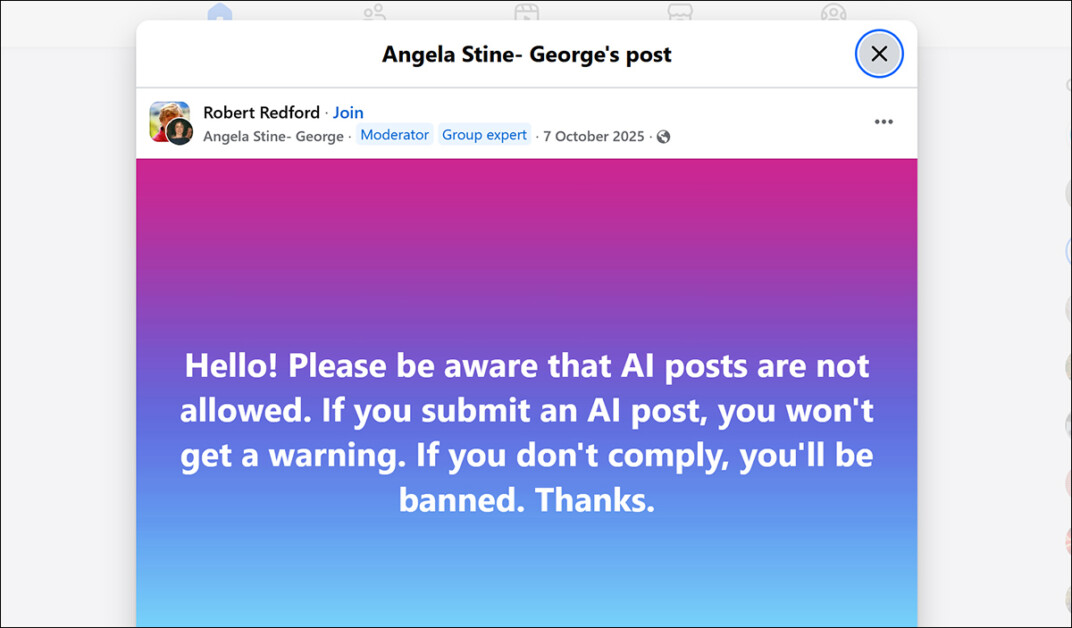

A typical anti-AI edict from Facebook. Source

Reddit has been especially affected, with moderators of various large subreddits often banning all AI-created images, art or text-based forms (such as fiction and programming), once such posts started flooding their forums and over-burdening limited human moderation resources.

A very early example, from 2022, was the r/Dune subreddit, where around 250,000 fans voted to forbid AI-generated art entirely, after being swamped with AI-made pictures. The sub’s mods noted at the time:

‘We acknowledge that many of these pieces are neat to look at, and the technology sure is fascinating, but it does technically qualify as low-effort content – especially when compared to original, ‘human-made’ art, which we would like to prioritize going forward.’

Since then, numerous subreddits (from r/lego to r/DataHoarder) have similarly declared that any content even partially made by AI will be removed on sight – at least, as ever, where recognizable.

Similar bans appear frequently across Facebook. However, since Meta, Facebook’s parent company, is one of the world’s vanguard developers of AI, whereas Reddit is instead a hotly-contended and sought-after source of ongoing human-based data, these bans tend to cause less controversy.

Surprisingly, it takes Meta a while to catch up with general public sentiment around AI – a year ago, for instance, the company made a bold retreat from an experiment with AI-based agents posting to diverse timelines, due to adverse user reactions.

ChatGPT Exfiltration Bans

One singular category of ‘absolute’ AI bans is the frequent prohibition against the use of ChatGPT in corporate or sensitive environments (and in this sense, much the same applies to any cloud-based, API-accessed language model, such as Claude or Gemini).

However, these bans are typically inspired not by liberal concerns around training data exploitation, but by fears that OpenAI will end up storing, processing, or even exploiting sensitive company IP disclosed during client chats.

While legal departments may support or even initiate corporate bans on ChatGPT-style platforms, they are also likely to consider whether the use of open-web data to train commercial systems could expose the company or its customers to future liabilities – particularly if ongoing litigation against OpenAI or similar entities should result in significant judgments.

Examples of bans on ChatGPT† include a wave of reactionary bans in 2023 from Apple, Amazon, Spotify, and Wells Fargo, among many others. That same year, a Cisco survey found that 27% of organizations polled had instituted similar bans – not on ChatGPT specifically, but on the use of GenAI overall.

In 2024, another survey found that 65% of the top 20 pharma companies had banned employees from using ChatGPT.

It is clear that many, if not all of the companies noted here, are likely to seek to benefit from AI in ways that suit their interests better; and that many are likely to be maintaining or developing other AI-based products for internal use – or even commercial API products with better IP protection than OpenAI can offer.

Many governments that are major proponents of AI also ban ChatGPT. Though some of these, such as Cuba an North Korea, are obvious candidates for this type of prohibition, China, currently considered the leading light of the AI revolution, bans ChatGPT at government level – presumably in favor of homespun alternatives.

Conclusion: About Zero Tolerance

One concern about any trend towards ‘absolute’ bans on AI (besides the challenge of identifying AI content), is whether or not uncompromising prohibition is actually effective. In fact, it’s easy to misunderstand what ‘zero tolerance’ actually is; what it can achieve; and the fairly limited circumstances in which it can attain its ends.

For example, New York City, perhaps most strongly associated with the term ‘zero tolerance’, was able to rise from its crime-ridden nadir in the 1970s and 1980s through the gradual and ongoing application of the ‘broken windows’ psychological theory, which posits that no matter how poor or affluent a neighborhood is, the presence of just one broken window will tend to lead to further broken windows – and an eventual escalation of other types of more serious crime, since the ongoing presence of the initial broken window suggests that the authorities are either not watching, or do not care.

Therefore, a ‘zero tolerance’ attitude to the ‘seed’ crime of breaking a window is believed to have upstream benefits, since this ‘criminal entropy’ can never get a foothold; in this way, by attending to little things (such as litter and broken windows, which are not expensive problems to resolve), the greater things (which would have cost more to resolve) take care of themselves.

Carried out, however, this theory (sometimes criticized as racist) can read like extreme authoritarianism, or even fascism:

Click to play. From the only training film John Ford ever made, ‘OSS – Undercover Training’, an example of apparently obsessive zero tolerance in a faux WWII axis culture context. Source

Instead, ‘zero tolerance’ is often equated in the public mind to ‘stern-but-necessary’ action, such as the uncompromising laws which most nations have in regard to penalties for harming minors – i.e., clear rules for the public good, concretized into punitive laws which inspire little debate or compromise.

In truth, ZT was designed to achieve urban renewal on a budget, by suggesting greater resources in a public arena than actually existed behind the scenes; not a particularly applicable use case for putting the AI genie back in the bottle.

And in practice, generative AI is already so pervasive that your apparently AI-free downstream data may well already owe something to it – even if it is an AI-enhanced codec in a (non-fake) video that you made, submitted to YouTube, and posted to your favorite social media channel. If AI truly becomes like a cultural ‘gas’, it seems set to defy clear borders, or binary solutions such as an ‘AI ban’.

* My emphasis.

† Which we can consider representative of this class of LLM, rather than an exclusive product, in this sense.

First published Thursday, January 29, 2026