Artificial Intelligence

Photonic Chips Seem to Boost Speed of AI Processes Dramatically

A team of researchers recently applied photonic processors to artificial intelligence applications, demonstrating that photonic processors can greatly surpass the information processing capability of regular electronic chips.

The research team developed a new chip architecture and approach that combines data storage and data processing capabilities in a single device. The chips were made with phase-change materials (like those used in DVDs). Photonic (light-based) processors are used to produce a computer chip that can carry out calculations at high speeds, and in a study recently published in Nature, the research team was able to demonstrate that these new chips were able to greatly improve over traditional electronic chips, thanks to their ability to rapidly process information in parallel.

Matrix-vector Multiplications

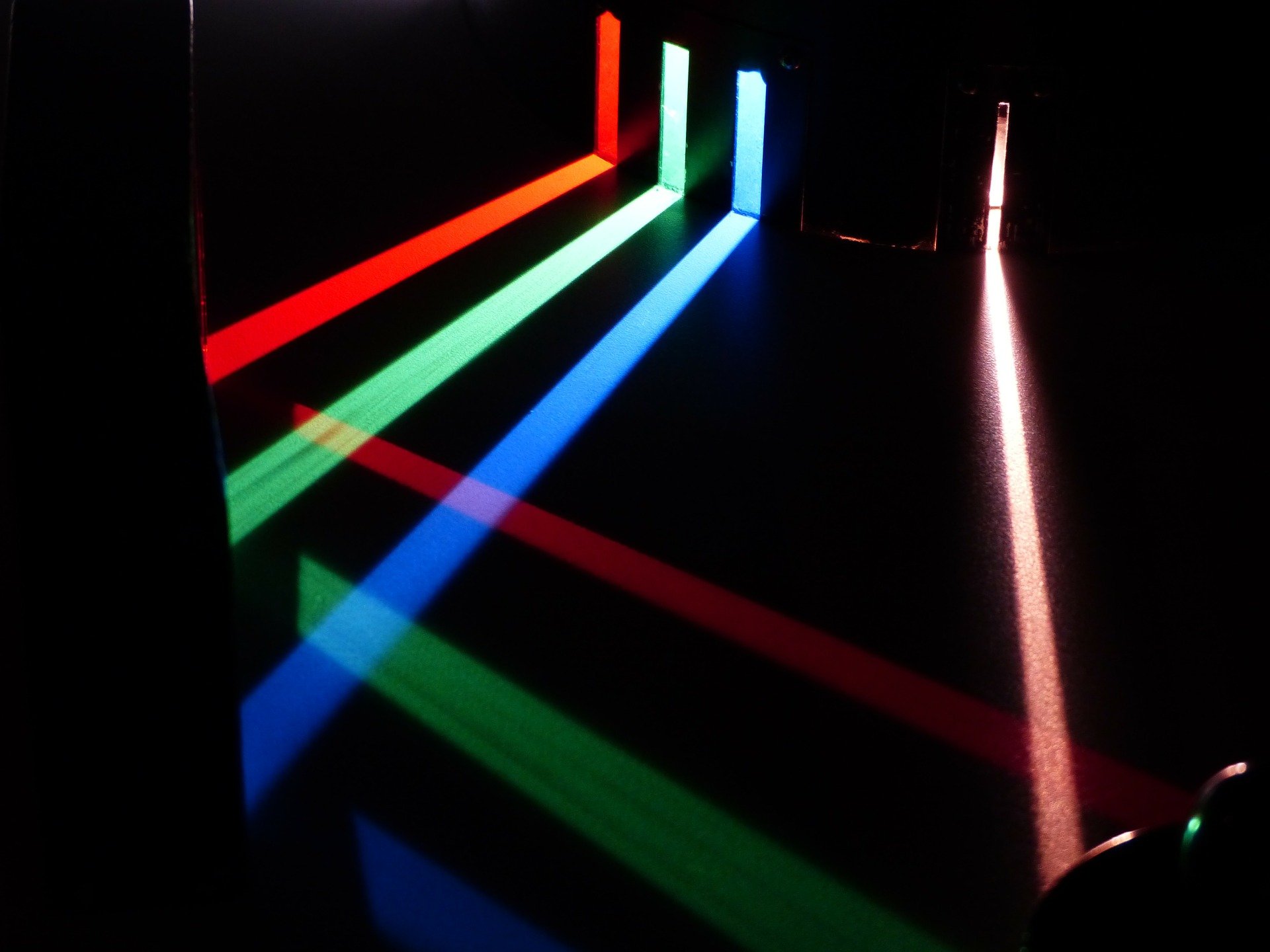

The neural networks that underlie much of the most cutting edge AI applications operate through matrix-vector multiplications. The research team created a hardware-based acceleration system that enables these multiplications to be carried out in parallel. The optical chips take advantage of the fact that different wavelengths of light don’t interfere with one another, meaning that they can be used to carry out calculations in parallel. The research team relied on a “frequency comb” developed by EPFL, using it as a light source to provide the photonic chip with the various wavelengths.

The development of the frequency comb was spearheaded by Professor Tobias Kippenberg at EPFL. Kippenburg is one of the lead authors of the study along with co-author Wolfram Pernice from Münster University. According to Pernice, light-based processors can speed up the computationally intensive tasks involved in machine learning, carrying out computations at even higher speeds than specialized hardware like Tensor Processing Units (TPUs) and the most advanced GPUs.

Neural Network Training

After the photonic chips were designed and built, the researchers put them through their paces by testing them on a neural network designed to recognize hand-written numbers. The neural network training process took advantage of the wavelength multiplexing enabled by the chips, and it was able to achieve higher data rates and computing densities than ever attained before.

As Johannes Feldmann, lead author of the study and graduate student at Münster University explained via TechXplore:

“The convolutional operation between input data and one or more filters—which can be a highlighting of edges in a photo, for example—can be transferred very well to our matrix architecture. Exploiting light for signal transference enables the processor to perform parallel data processing through wavelength multiplexing, which leads to a higher computing density and many matrix multiplications being carried out in just one timestep.”

The work is notable thanks to the fact that it could allow neural networks to be trained on large datasets in what could be a fraction of the typical time it takes to train a network. As big data gets ever bigger, inflated by the internet of things and the increasing proliferation of smart devices, data scientists will need new ways of keeping training times on massive datasets as low as possible. Traditional electronics typically operate in the low GHz range, while optical modulation speeds can go up to the 50 GHz to 100 GHz range.

The research could have important implications for applications like cloud computing, medical imaging, and autonomous vehicles, which all require the ability to process large volumes of data originating from multiple sources as quickly as possible.

The research project was the result of a collaboration between an international team of researchers from Pitt Univesity, the University of Münster in Germany, England’s Exeter and Oxford Universities, IBM Zurich, and the École Polytechnique Fédérale (EPFL) in Switzerland.