Artificial Intelligence

Google Envisages a GPT-3-like Query System, Without Search Results

A new paper from four Google researchers proposes an ‘expert’ system capable of authoritatively answering users’ questions without presenting a list of possible search results, similar to the Q&A paradigm that has come to public attention through the advent of GPT-3 over the past year.

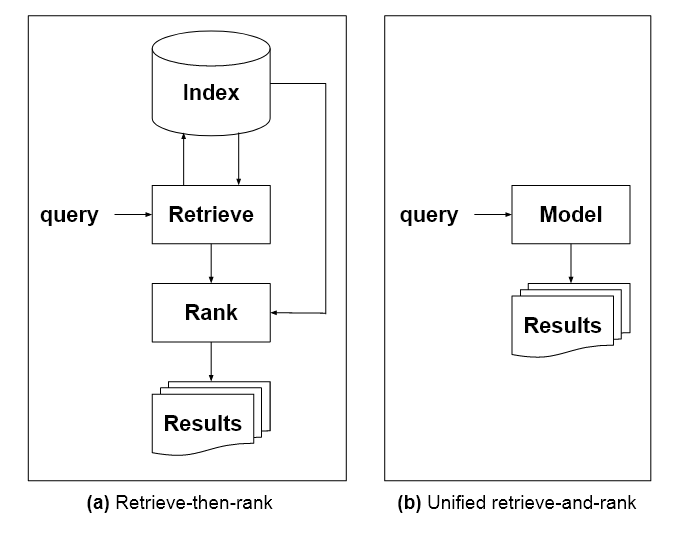

The paper, entitled Rethinking Search: Making Experts out of Dilettantes, suggests that the current standard of presenting the user with a list of search results in response to an inquiry is a ‘cognitive burden’, and proposes improvements in the ability of a natural language processing system (NLP) to provide an authoritative and definitive response.

Under the proposed model of an ‘expert’, cross-domain oracle, the thousands of possible search result sources will be baked into a language model instead of being explicitly available as an exploratory resource for users to evaluate and navigate for themselves. Source: https://arxiv.org/pdf/2105.02274.pdf

The paper, led by Donald Metzler at Google Research, proposes improvements in the type of multi-domain oracle responses that can currently be obtained from deep learning autoregressive language models such as GPT-3. The main improvements envisaged are a) that the model would be capable of accurately citing the sources that informed the response, and b) that the model would be prevented from ‘hallucinating‘ responses or inventing non-existent source material, which is currently an issue with such architectures.

Multi-Domain Training And Capabilities

Additionally, the proposed language model, characterized in the paper as ‘A Single Model for all Information Retrieval Tasks’, would be trained on a variety of domains, including images and text. It would also need an understanding about the provenance of knowledge, which is lacking in GPT-3 style architectures.

‘To replace indexes with a single, unified model, it must be possible for the model itself to have knowledge about the universe of document identifiers, in the same way that traditional indexes do. One way to accomplish this is to move away from traditional LMs and towards corpus models that jointly model term-term, term-document, and document-document relationships.’

In the image above, from the paper, three approaches in response to a user inquiry: left, the language models implicit in Google’s algorithmic search results have chosen and prioritized a ‘best answer’, but have left it as the top result of many. Center, a GPT-3 style conversational response, which speaks with authority, but does not justify its claims or cite sources. Right, the proposed expert system incorporates the ‘best response’ from the ranked search results directly into a didactic answer, with academic-style footnote citations (not depicted in the original image) indicating the sources that inform the response.

Removing Poisonous And Inaccurate Results

The researchers note that the dynamic and constantly updated nature of search indexes are a challenge to entirely replicate in a machine learning model of this nature. For instance, where a once-trusted source has been trained directly into the model’s understanding of the world, removing its influence (for instance, after it is discredited) may be more difficult than just removing a URL from SERPs, since data concepts can become abstract and widely-represented during assimilation in training.

Additionally, such a model would need to be continuously trained in order to provide the same level of responsiveness to new articles and publications as currently provided by Google’s constant spidering of sources. Effectively this means continuous and automated roll-out, in contrast to the current regime, where minor amendments are made to the weights and settings of the free-form search algorithm, but the algorithm itself is usually only updated infrequently.

Attack Surfaces For A Centralized Expert Oracle

A centralized model constantly assimilating and generalizing new data could transform the attack surface for search inquiries.

Currently, an attacker can obtain benefit by achieving high ranking for domains or pages that either contain misinformation or malicious code. Under the auspices of a more opaque ‘expert’ oracle, the opportunity to redirect users to attack domains is greatly diminished, but the possibility of injecting poisonous data attacks is greatly increased.

This is because the proposed system does not eliminate the search ranking algorithm, but hides it from the user, effectively automating the priority of the top result/s, and baking it (or them) into a didactic statement. Malicious users have long been able to orchestrate attacks against the Google search algorithm, to sell fake products, direct users to malware-spreading domains, or for purposes of political manipulation, among many other use cases.

Not AGI

The researchers emphasize that such a system would be unlikely to qualify as Artificial General Intelligence (AGI), and place the prospect of a universal expert responder in the context of natural language processing, subject to all the challenges that such models currently face.

The paper outlines five requirements for a ‘high quality’ response:

1: Authority

As with current ranking algorithms, ‘authority’ appears to be derived from citation from high quality domains that are considered authoritative in themselves. The researchers observe:

‘Responses should generate content by pulling from highly authoritative sources. This is another reason why establishing more explicit connections between sequences of terms and document metadata is so crucial. If all of the documents in a corpus are annotated with an authoritative-ness score, that score should be taken into account when training the model, generating responses, or both.’

Though the researchers do not suggest that traditional SERPs results would become unavailable if an expert oracle of this type was found to be performant and popular, the entire paper does present the traditional ranking system, and search result lists, in the light of a ‘decades old’ and outmoded information retrieval system.

‘The very fact that ranking is a critical component of this paradigm is a symptom of the retrieval system providing users a selection of potential answers, which induces a rather significant cognitive burden on the user. The desire to return answers instead of ranked lists of results was one of the motivating factors for developing question answering systems. ‘

2: Transparency

The researchers comment:

‘Whenever possible, the provenance of the information being presented to the user should be made available to them. Is this the primary source of information? If not, what is the primary source?’

3: Handling Bias

The paper notes that pre-trained language models are designed not to evaluate empirical truth, but to generalize and prioritize dominant trends in the data. It concedes that this directive opens up the model to attack (as occurred with Microsoft’s unintentionally racist chatbot in 2016), and that ancillary systems will be needed to safeguard against such biased system responses.

4: Enabling Diverse Viewpoints

The paper also proposes mechanisms to ensure a plurality of points of view:

‘Generated responses should represent a range of diverse perspectives but should not be polarizing. For example, for queries about controversial topics, both sides of the topic should be covered in a fair and balanced way. This obviously has close tie-ins with model bias.’

5: Accessible Language

Besides providing accurate translations in cases where the deemed authoritative response is in a different language, the paper suggests that encapsulated responses should be ‘written in as-plain-as-possible terms’.