Artificial Intelligence

StyleTTS 2: Human-Level Text-to-Speech with Large Speech Language Models

Owing to an increase in natural and synthetic speech synthesis approaches, one of the major achievements the AI industry has achieved in the past few years is to effectively synthesize text-to-speech frameworks with potential applications across different industries including audiobooks, virtual assistants, voice-over narrations and more, with some state of the art modes delivering human-level performance and efficiency across a wide array of speech-related tasks. However, despite their strong performance, there is still room for improvement for tasks thanks to expressive & diverse speech, requirement for a large amount of training data for optimizing zero-shot text to speech frameworks, and robustness for OOD or Out of Distribution texts leading developers to work on a more robust and accessible text to speech framework.

In this article, we will be talking about StyleTTS-2, a robust and innovative text to speech framework that is built on the foundations of the StyleTTS framework, and aims to present the next step towards state of the art text to speech systems. The StyleTTS2 framework models speech styles as latent random variables, and uses a probabilistic diffusion model to sample these speech styles or random variables thus allowing the StyleTTS2 framework to synthesize realistic speech effectively without using reference audio inputs. Owing to the approach, the StyleTTS2 framework is able to deliver better results & shows high efficiency when compared to current state of the art text to speech frameworks, but is also able to take advantage of the diverse speech synthesis offered by diffusion model frameworks. We will be discussing the StyleTTS2 framework in greater detail, and talk about its architecture and methodology while also having a look at the results achieved by the framework. So let’s get started.

StyleTTS2 for Text to Speech Synthesis: An Introduction

StyleTTS2 is an innovative Text to Speech synthesis model that takes the next step towards building human-level TTS frameworks, and it is built upon StyleTTS, a style-based text to speech generative model. The StyleTTS2 framework models speech styles as latent random variables, and uses a probabilistic diffusion model to sample these speech styles or random variables thus allowing the StyleTTS2 framework to synthesize realistic speech effectively without using reference audio inputs. Modeling styles as latent random variables is what separates the StyleTTS2 framework from its predecessor, the StyleTTS framework, and aims to generate the most suitable speech style for the input text without needing a reference audio input, and is able to achieve effective latent diffusions while taking advantage of the diverse speech synthesis capabilities offered by diffusion models. Additionally, the StyleTTS2 framework also employs pre-trained large SLM or Speech Language Model as discriminators like the WavLM framework, and couples it with its own novel differential duration modeling approach to train the framework end to end, and ultimately generating speech with enhanced naturalness. Thanks to the approach it follows, the StyleTTS2 framework outperforms current state of the art frameworks for speech generation tasks, and is one of the most efficient frameworks for pre-training large-scale speech models in zero-shot setting for speaker adaptation tasks.

Moving along, to deliver human-level text to speech synthesis, the StyleTTs2 framework incorporates the learnings from existing works including diffusion models for speech synthesis, and large speech language models. Diffusion models are usually used for speech synthesis tasks thanks to their abilities to fine-grain speech control, and diverse speech sampling capabilities. However, diffusion models are not as efficient as GAN-based non-iterative frameworks and a major reason for this is the requirement to sample latent representations, waveforms, and mel-spectrograms iteratively to the target duration of the speech.

On the other hand, recent works around Large Speech Language Models have indicated their ability to enhance the quality of text to speech generation tasks, and adapt well to the speaker. Large Speech Language Models typically convert text input either into quantized or continuous representations derived from pre-trained speech language frameworks for speech reconstructing tasks. However, the features of these Speech Language Models are not optimized for speech synthesis directly. In contrast, the StyleTTS2 framework takes advantage of the knowledge gained by large SLM frameworks using adversarial training to synthesize speech language models’ features without using latent space maps, and therefore, learning a speech synthesis optimized latent space directly.

StyleTTS2: Architecture and Methodology

At its core, the StyleTTS2 is built on its predecessor, the StyleTTS framework which is a non-autoregressive text to speech framework that makes use of a style encoder to derive a style vector from the reference audio, thus allowing expressive and natural speech generation. The style vector used in the StyleTTS framework is incorporated directly into the encoder, duration, and predictors by making use of AdaIN or Adaptive Instance Normalization, thus allowing the StyleTTS model to generate speech outputs with varying prosody, duration, and even emotions. The StyleTTS framework consists of 8 models in total that are divided into three categories

- Acoustic Models or Speech Generation System with a style encoder, a text encoder, and a speech decoder.

- A Text to Speech Prediction System making use of prosody and duration predictors.

- A Utility System including a text aligner, a pitch extractor, and a discriminator for training purposes.

Thanks to its approach, the StyleTTS framework delivers state of the art performance related to controllable and diverse speech synthesis. However, this performance has its drawbacks like degradation of sample quality, expressive limitations, and reliance on speech-hindering applications in real-time.

Improving upon the StyleTTS framework, the StyleTTS2 model results in enhanced expressive text to speech tasks with an improved out of distribution performance, and a high human-level quality. The StyleTTS2 framework makes use of an end to end training process that optimizes the different components with adversarial training, and direct waveform synthesis jointly. Unlike the StyleTTS framework, the StyleTTS2 framework models the speech style as a latent variable, and samples it via diffusion models thus generating diverse speech samples without using a reference audio. Let’s have a detailed look into these components.

End to End Training for Interference

In the StyleTTS2 framework, an end to end training approach is utilized to optimize various text to speech components for interference without having to rely on fixed components. The StyleTTS2 framework achieves this by modifying the decoder to generate the waveform directly from the style vector, pitch & energy curves, and aligned representations. The framework then removes the last projection layer of the decoder, and replaces it with a waveform decoder. The StyleTTS2 framework makes use of two encoders: HifiGAN-based decoder to generate the waveform directly, and an iSTFT-based decoder to produce phase & magnitude that are converted into waveforms for faster interference & training.

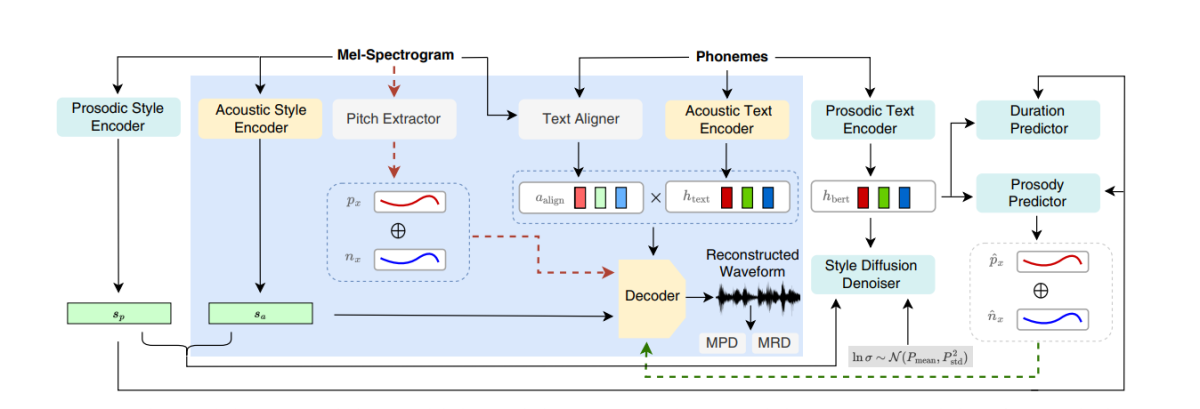

The above figure represents the acoustic models used for pre-training and joint training. To reduce the training time, the modules are first optimized in the pre-training phase followed by the optimization of all the components minus the pitch extractor during joint training. The reason why joint training does not optimize the pitch extractor is because it is used to provide the ground truth for pitch curves.

The above figure represents the Speech Language Model adversarial training and interference with the WavLM framework pre-trained but not pre-tuned. The process differs from the one mentioned above as it can take varying input texts but accumulates the gradients to update the parameters in each batch.

Style Diffusion

The StyleTTS2 framework aims to model speech as a conditional distribution through a latent variable that follows the conditional distribution, and this variable is called the generalized speech style, and represents any characteristic in the speech sample beyond the scope of any phonetic content including lexical stress, prosody, speaking rate, and even formant transitions.

Speech Language Model Discriminators

Speech Language Models are renowned for their general abilities to encode valuable information on a wide range of semantics and acoustic aspects, and SLM representations have traditionally been able to mimic human perceptions to evaluate the quality of the generated synthesized speech. The StyleTTS2 framework uses an adversarial training approach to utilize the ability of SLM encoders to perform generative tasks, and employs a 12-layer WavLM framework as the discriminator. This approach allows the framework to enable training on OOD or Out Of Distribution texts that can help improve performance. Furthermore, to prevent overfitting issues, the framework samples OOD texts and in-distribution with equal probability.

Differentiable Duration Modeling

Traditionally, a duration predictor is used in text to speech frameworks that produces phoneme durations, but the upsampling methods these duration predictors use often block the gradient flow during the E2E training process, and the NaturalSpeech framework employs an attention-based upsampler for human-level text to speech conversion. However, the StyleTTS2 framework finds this approach to be unstable during adversarial training because the StyleTTS2 trains using differentiable upsampling with different adversarial training without the loss of extra terms due to mismatch in the length due to deviations. Although using a soft dynamic time warping approach can help in mitigating this mismatch, using it is not only computationally expensive, but its stability is also a concern when working with adversarial objectives or mel-reconstruction tasks. Therefore, to achieve human-level performance with adversarial training and stabilize the training process, the StyleTTC2 framework uses a non-parametric upsampling approach. Gaussian upsampling is a popular nonparametric upsampling approach for converting the predicted durations although it has its limitations thanks to the fixed length of the Gaussian kernels predetermined. This restriction for Gaussian upsampling limits its ability to accurately model alignments with different lengths.

To encounter this limitation, the StyleTTC2 framework proposes to use a new nonparametric upsampling approach without any additional training, and capable of accounting varying lengths of the alignments. For each phoneme, the StyleTTC2 framework models the alignment as a random variable, and indicates the index of the speech frame with which the phoneme aligns with.

Model Training and Evaluation

The StyleTTC2 framework is trained and experimented on three datasets: VCTK, LibriTTS, and LJSpeech. The single-speaker component of the StyleTTS2 framework is trained using the LJSpeech dataset that contains roughly 13,000+ audio samples split into 12,500 training samples, 100 validation samples, and nearly 500 testing samples, with their combined run time totalling to nearly 24 hours. The multi speaker component of the framework is trained on the VCTK dataset consisting of over 44,000 audio clips with over 100 individual native speakers with varying accents, and is split into 43,500 training samples, 100 validation samples, and nearly 500 testing samples. Finally, to equip the framework with zero-shot adaptation capabilities, the framework is trained on the combined LibriTTS dataset that consists of audio clips totaling to about 250 hours of audio with over 1,150 individual speakers. To evaluate its performance, the model employs two metrics: MOS-N or Mean Opinion Score of Naturalness, and MOS-S or Mean Opinion Score of Similarity.

Results

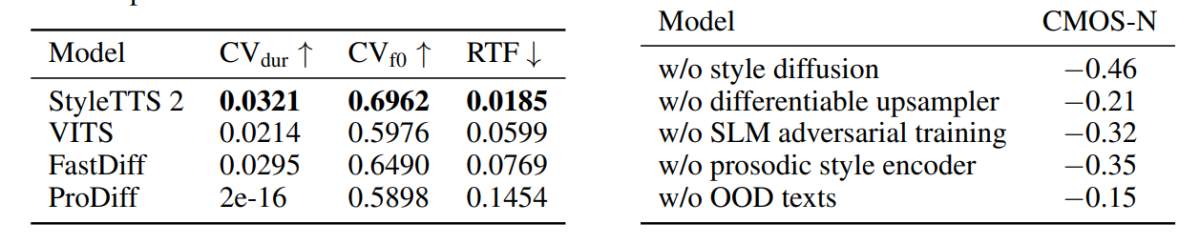

The approach and methodology used in the StyleTTS2 framework is showcased in its performance as the model outperforms several state of the art TTS frameworks especially on the NaturalSpeech dataset, and enroute, setting a new standard for the dataset. Furthermore, the StyleTTS2 framework outperforms the state of the art VITS framework on the VCTK dataset, and the results are demonstrated in the following figure.

The StyleTTS2 model also outperforms previous models on the LJSpeech dataset, and it does not display any degree of quality degradation on OOD or Out of Distribution texts as displayed by prior frameworks on the same metrics. Furthermore, in zero-shot setting, the StyleTTC2 model outperforms the existing Vall-E framework in naturalness although it falls behind in terms of similarity. However, it is worth noting that the StyleTTS2 framework is able to achieve competitive performance despite training only on 245 hours of audio samples when compared to over 60k hours of training for the Vall-E framework, thus proving StyleTTC2 to be a data-efficient alternative to existing large pre-training methods as used in the Vall-E.

Moving along, owing to the lack of emotion labeled audio text data, the StyleTTC2 framework uses the GPT-4 model to generate over 500 instances across different emotions for the visualization of style vectors the framework creates using its diffusion process.

In the first figure, emotional styles in response to input text sentiments are illustrated by the style vectors from the LJSpeech model, and it demonstrates the ability of the StyleTTC2 framework to synthesize expressive speech with varied emotions. The second figure depicts distinct clusters form for each of the five individual speakers thus depicting a wide range of diversity sourced from a single audio file. The final figure demonstrates the loose cluster of emotions from speaker 1, and reveals that, despite some overlaps, emotion-based clusters are prominent, thus indicating the possibility of manipulating the emotional tune of a speaker regardless of the reference audio sample and its input tone. Despite using a diffusion based approach, the StyleTTS2 framework manages to outperform existing state of the art frameworks including VITS, ProDiff, and FastDiff.

Final Thoughts

In this article, we have talked about StyleTTS2, a novel, robust and innovative text to speech framework that is built on the foundations of the StyleTTS framework, and aims to present the next step towards state of the art text to speech systems. The StyleTTS2 framework models speech styles as latent random variables, and uses a probabilistic diffusion model to sample these speech styles or random variables thus allowing the StyleTTS2 framework to synthesize realistic speech effectively without using reference audio inputs.The StyleTTS2 framework uses style diffusion and SLM discriminators to achieve human-level performance on text to speech tasks, and manages to outperform existing state of the art frameworks on a wide array of speech tasks.