Artificial Intelligence

Stable Video Diffusion: Latent Video Diffusion Models to Large Datasets

Generative AI has been a driving force in the AI community for some time now, and the advancements made in the field of generative image modeling especially with the use of diffusion models have helped the generative video models progress significantly not only in research, but also in terms of real world applications. Conventionally, generative video models are either trained from scratch, or they are partially or completely finetuned from pretrained image models with extra temporal layers, on a mixture of image and video datasets.

Taking forward the advancements in generative video models, in this article, we will talk about the Stable Video Diffusion Model, a latent video diffusion model capable of generating high-resolution, state-of-the-art image to video, and text to video content. We will talk about how latent diffusion models trained for synthesizing 2D images have improved the abilities & efficiency of generative video models by adding temporal layers, and fine-tuning the models on small datasets consisting of high-quality videos. We will be having a deeper dive into the architecture and working of the Stable Video Diffusion Model, and evaluate its performance on various metrics and compare it with current state of the art frameworks for video generation. So let’s get started.

Stable Video Diffusion Model and Generative Video Models : An Introduction

Thanks to its almost unlimited potential, Generative AI has been the primary subject of research for AI and ML practitioners for a while now, and the past few years have seen rapid advancements both in terms of efficiency and performance of generative image models. The learnings from generative image models have allowed researchers and developers to make progress on generative video models resulting in enhanced practicality and real-world applications. However, most of the research attempting to improve the capabilities of generative video models focus primarily on the exact arrangement of temporal and spatial layers, with little attention being paid to investigate the influence of selecting the right data on the outcome of these generative models.

Thanks to the progress made by generative image models, researchers have observed that the impact of training data distribution on the performance of generative models is indeed significant and undisputed. Furthermore, researchers have also observed that pretraining a generative image model on a large and diverse dataset followed by fine-tuning it on a smaller dataset with better quality often results in improving the performance significantly. Traditionally, generative video models implement the learnings obtained from successful generative image models, and researchers are yet to study the effect of data, and training strategies are yet to be studied. The Stable Video Diffusion Model is an attempt to enhance the abilities of generative video models by venturing into previously uncharted territories with special focus being on selecting data.

Recent generative video models rely on diffusion models, and text conditioning or image conditioning approaches to synthesize multiple consistent video or image frames. Diffusion models are known for their ability to learn how to gradually denoise a sample from normal distribution by implementing an iterative refinement process, and they have delivered desirable results on high-resolution video, and text to image synthesis. Using the same principle at its core, the Stable Video Diffusion Model trains a latent video diffusion model on its video dataset along with the use of Generative Adversarial Networks or GANs, and even autoregressive models to some extent.

The Stable Video Diffusion Model follows a unique strategy never implemented by any generative video model as it relies on latent video diffusion baselines with a fixed architecture, and a fixed training strategy followed by assessing the effect of curating the data. The Stable Video Diffusion Model aims to make the following contributions in the field of generative video modeling.

- To present a systematic and effective data curation workflow in an attempt to turn a large collection of uncurated video samples to high-quality dataset that is then used by the generative video models.

- To train state of the art image to video, and text to video models that outperforms the existing frameworks.

- Conducting domain-specific experiments to probe the 3D understanding, and strong prior of motion of the model.

Now, the Stable Video Diffusion Model implements the learnings from Latent Video Diffusion Models, and Data Curation techniques at the core of its foundation.

Latent Video Diffusion Models

Latent Video Diffusion Models or Video-LDMs follow the approach of training the primary generative model in a latent space with reduced computational complexity, and most Video-LDMs implement a pre trained text to image model coupled with the addition of temporal mixing layers in the pretraining architecture. As a result, most Video Latent Diffusion Models either only train temporal layers, or skip the training process altogether unlike the Stable Video Diffusion Model that fine-tunes the entire framework. Furthermore, for synthesizing text to video data, the Stable Video Diffusion Model directly conditions itself on a text prompt, and the results indicate that the resulting framework can be finetuned into a multi-view synthesis or an image to video model easily.

Data Curation

Data Curation is an essential component not only of the Stable Video Diffusion Model, but for generative models as a whole because it’s essential to pretrain large models on large-scale datasets to boost performance across different tasks including language modeling, or discriminative text to image generation, and much more. Data Curation has been implemented successfully on generative image models by leveraging the capabilities of efficient language-image representations, although such such discussions have never been focussed on for developing generative video models. There are several hurdles developers face when curating data for generative video models, and to address these challenges, the Stable Video Diffusion Model implements a three-stage training strategy, resulting in enhanced results, and a significant boost in performance.

Data Curation for High Quality Video Synthesis

As discussed in the previous section, the Stable Video Diffusion Model implements a three-stage training strategy, resulting in enhanced results, and a significant boost in performance. Stage I is an image pretraining stage that makes use of a 2D text to image diffusion model. Stage II is for video pretraining in which the framework trains on a large amount of video data. Finally, we have Stage III for video finetuning in which the model is refined on a small subset of high quality and high resolution videos.

However, before the Stable Video Diffusion Model implements these three stages, it is vital to process and annotate the data as it serves as the base for Stage II or the video pre-training stage, and plays a critical role in ensuring the optimal output. To ensure maximum efficiency, the framework first implements a cascaded cut detection pipeline at 3 varying FPS or Frames Per Second levels, and the need for this pipeline is demonstrated in the following image.

Next, the Stable Video Diffusion Model annotates each video clip using three varying synthetic captioning methods. The following table compares the datasets used in the Stable Diffusion Framework before & after the filtration process.

Stage I : Image Pre-Training

The first stage in the three-stage pipeline implemented in the Stable Video Diffusion Model is image pre-training, and to achieve this, the initial Stable Video Diffusion Model framework is grounded against a pre-trained image diffusion model namely the Stable Diffusion 2.1 model that equips it with stronger visual representations.

Stage II : Video Pre-Training

The second stage is the Video Pre-Training stage, and it builds on the findings that the use of data curation in multimodal generative image models often results in better results, and enhanced efficiency along with powerful discriminative image generation. However, owing to the lack of similar powerful off the shelf representations to filter out unwanted samples for generative video models, the Stable Video Diffusion Model relies on human preferences as input signals for the creation of an appropriate dataset used for pre-training the framework. The following figure demonstrate the positive effect of pre-training the framework on a curated dataset that helps in boosting the overall performance for video pre-training on smaller datasets.

To be more specific, the framework uses different methods to curate subsets of Latent Video Diffusion, and considers the ranking of LVD models trained on these datasets. Furthermore, the Stable Video Diffusion framework also finds that the use of curated datasets for training the frameworks helps in boosting the performance of the framework, and diffusion models in general. Furthermore, data curation strategy also works on larger, more relevant, and highly practical datasets. The following figure demonstrates the positive effect of pre-training the framework on a curated dataset that helps in boosting the overall performance for video pre-training on smaller datasets.

Stage III : High-Quality Fine-tuning

Till stage II, the Stable Video Diffusion framework focuses on improving the performance prior to video pretraining, and in the third stage, the framework lays its emphasis on optimizing or further boosting the performance of the framework after high quality video fine-tuning, and how the transition from Stage II to Stage III is achieved in the framework. In Stage III, the framework draws on training techniques borrowed from latent image diffusion models, and increases the training examples’ resolution. To analyze the effectiveness of this approach, the framework compares it with three identical models that differ only in terms of their initialization. The first identical model has its weights initialized, and the video training process is skipped whereas the remaining two identical models are initialized with the weights borrowed from other latent video models.

Results and Findings

It’s time to have a look at how the Stable Video Diffusion framework performs on real-world tasks, and how it compares against the current state of the art frameworks. The Stable Video Diffusion framework first uses the optimal data approach to train a base model, and then performs fine-tuning to generate several state of the art models, where each model performs a specific task.

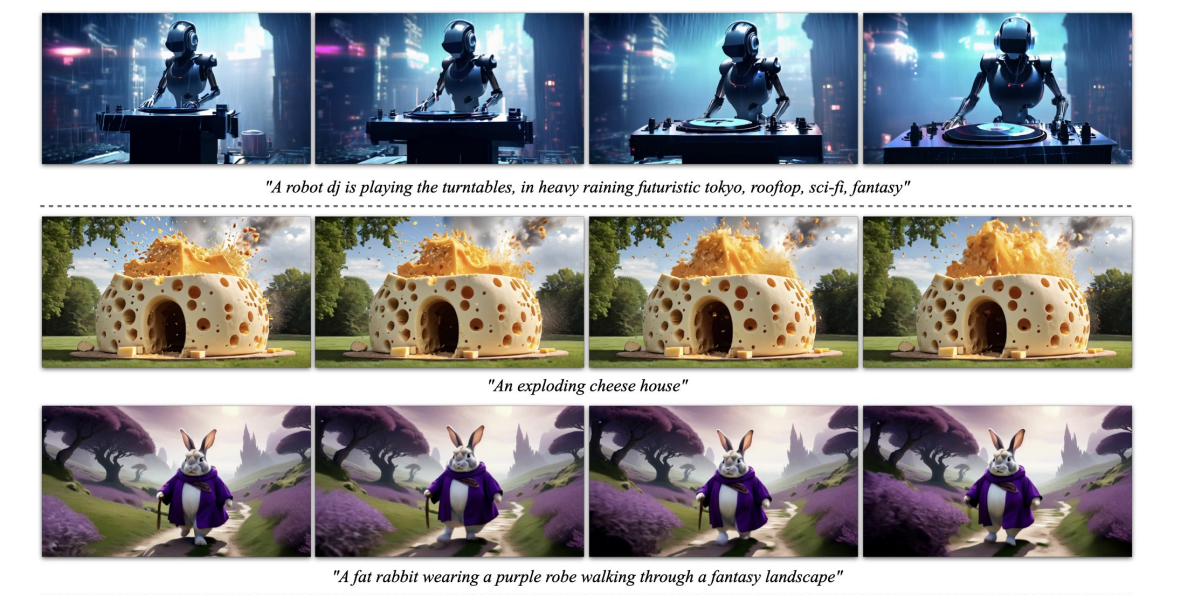

The above picture represents the high-resolution image to video samples generated by the framework whereas the following figure demonstrates the ability of the framework to generate high-quality text to video samples.

Pre-Trained Base Model

As discussed earlier, the Stable Video Diffusion model is built on the Stable Diffusion 2.1 framework, and on the basis of recent findings, it was crucial for developers to adopt the noise schedule and increase the noise to obtain images with better resolution when training image diffusion models. Thanks to this approach, the Stable Video Diffusion base model learns powerful motion representations, and in the process, outperforms baseline models for text to video generation in a zero shot setting, and the results are displayed in the following table.

Frame Interpolation and Multi-View Generation

The Stable Video Diffusion framework finetunes the image to video model on multi-view datasets to obtain multiple novel views of an object, and this model is known as SVD-MV or Stable Video Diffusion- Multi View model. The original SVD model is finetuned with the help of two datasets in a way that the framework inputs a single image, and returns a sequence of multi-view images as its output.

As it can be seen in the following images, the Stable Video Diffusion Multi View framework delivers high performance comparable to state of the art Scratch Multi View framework, and the results are a clear demonstration of SVD-MV’s ability to take advantage of the learnings obtained from the original SVD framework for multi-view image generation. Furthermore, the results also indicate that running the model for a relatively smaller number of iterations helps in delivering optimal results as is the case with most models fine-tuned from the SVD framework.

In the above figure, the metrics are indicated on the left-hand side and as it can be seen, the Stable Video Diffusion Multi View framework outperforms Scratch-MV and SD2.1 Multi-View framework by a decent margin. The second image demonstrates the effect of the number of training iterations on the overall performance of the framework in terms of Clip Score, and the SVD-MV frameworks deliver sustainable results.

Final Thoughts

In this article, we have talked about Stable Video Diffusion, a latent video diffusion model capable of generating high-resolution, state-of-the-art image to video, and text to video content. The Stable Video Diffusion Model follows a unique strategy never implemented by any generative video model as it relies on latent video diffusion baselines with a fixed architecture, and a fixed training strategy followed by assessing the effect of curating the data.

We have talked about how latent diffusion models trained for synthesizing 2D images have improved the abilities & efficiency of generative video models by adding temporal layers, and fine-tuning the models on small datasets consisting of high-quality videos. To gather the pre-training data, the framework conducts scaling study and follows systematic data collection practices, and ultimately proposes a method to curate a large amount of video data, and converts noisy videos into input data suitable for generative video models.

Furthermore, the Stable Video Diffusion framework employs three distinct video model training stages that are analyzed independently to assess their impact on the framework’s performance. The framework ultimately outputs a video representation powerful enough to finetune the models for optimal video synthesis, and the results are comparable to state of the art video generation models already in use.