Robotics

New Paper Argues Robots Need to Understand Human Motive

A new article by the National Centre for Nuclear Robotics, based at the University of Birmingham, argues that robots need to understand motive like us humans. If we want humans and robots to work both effectively and safely together, the robots can’t just perform tasks without knowing the reason they are doing them.

The lead author of the piece is Dr. Valerio Ortenzi from the University of Birmingham. He says that this is needed as the economy becomes increasingly automated, connected, and digitized. It’s also important as there will be a dramatic increase in interactions between humans and robots in both factories and homes.

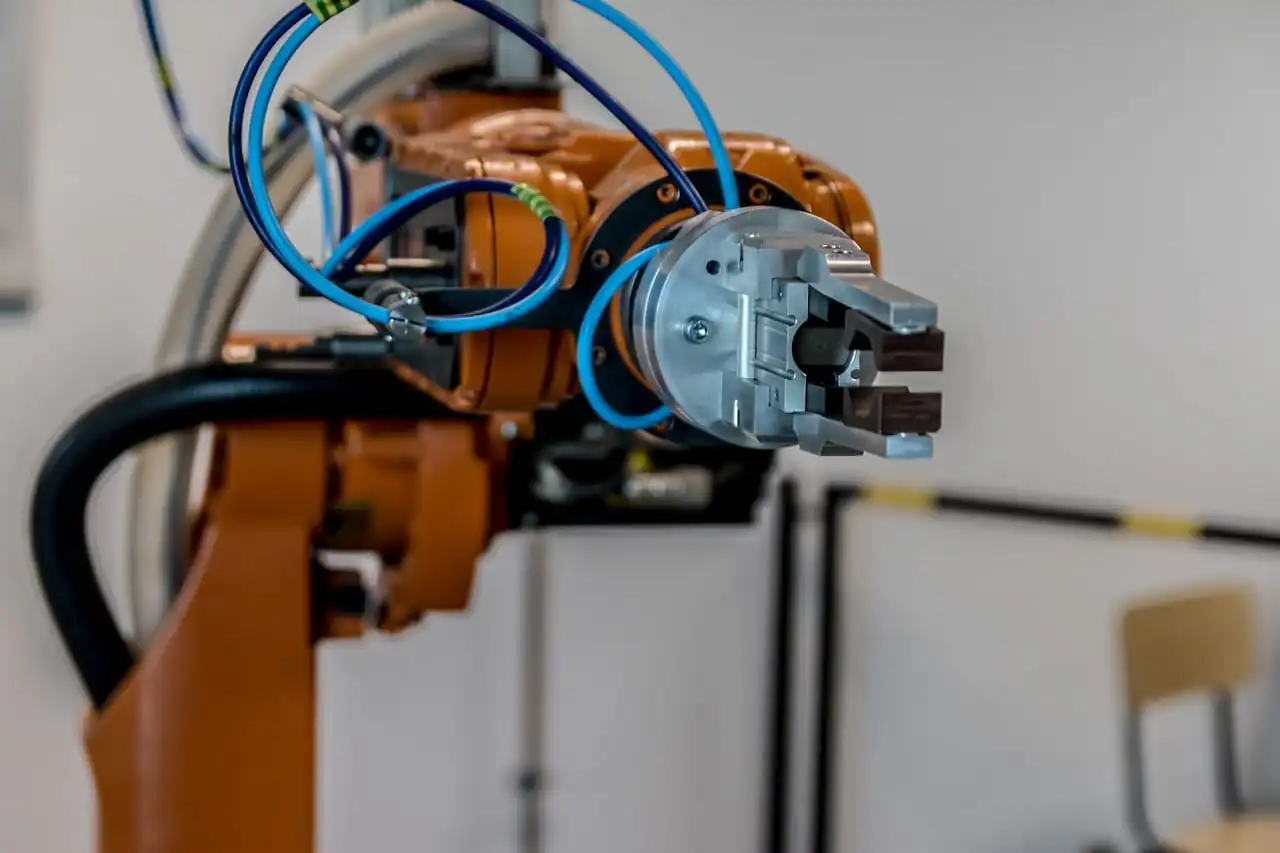

The paper was published in Nature Machine Intelligence. It focuses partly on robots using objects and ‘Grasping’, an action that is easily completed in nature but is challenging among robots.

Our current factory-based robots blindy pick up objects that they are already familiar with. Those objects are also in predetermined places during selected times. If a machine were to pick up an object that it is unfamiliar with, and in a random place, it would need multiple, complex technologies working together. Some of those technologies are vision systems and advanced AI. Those help the machine see the target and determine its properties. Some even require sensors that are located in the gripper to prevent the robot from crushing the object.

Researchers from the National Centre for Nuclear Robotics say that even with all of the technologies, the machine still doesn’t know the reasons for picking an object up. Because of this, what we once thought of as successive actions performed by robots can actually be real-world failures.

The paper in the Nature Machine Intelligence uses the example of a robot delivering an object to a customer after picking it up. The robot picks up the object successfully without crushing it. The problem arises when the robot covers up an important barcode. This means that the object is not able to be tracked and there is no information confirming the successful delivery of the object. This complicates things and results in a failure of the delivery system because the robot does not know certain consequences of picking up the object incorrectly.

Dr. Ortenzi and the co-authors of the paper spoke about other examples as well.

“Imagine asking a robot to pass you a screwdriver in a workshop. Based on current conventions, the best way for a robot to pick up the tool is by the handle. Unfortunately, that could mean that a hugely powerful machine then thrusts a potentially lethal blade towards you, at speed. Instead, the robot needs to know what the end goal is, i.e., to pass the screwdriver safely to its human colleague, in order to rethink its actions.”

“Another scenario envisages a robot passing a glass of water to a resident in a care home. It must ensure that it doesn’t drop the glass but also that water doesn’t spill over the recipient during the act of passing, or that the glass is presented in such a way that the person can take hold of it.”

“What is obvious to humans has to be programmed into a machine and this requires a profoundly different approach. The traditional metrics used by researchers, over the past twenty years, to assess robotic manipulation, are not sufficient. In the most practical sense, robots need a new philosophy to get a grip.”

Professor Rustman Stolkin, director of the National Centre for Nuclear Robotics, spoke about the organization’s role in developing this technology.

“National Centre for Nuclear Robotics is unique in working on practical problems with industry, while simultaneously generating the highest calibre of cutting-edge academic research — exemplified by this landmark paper.”

The new research was worked on with the Centre of Excellence for Robotic Vision at Queensland University of Technology, Australia, Scuola Superiore Sant’Anna, Italy, the German Aerospace Center (DLR), Germany, and the University of Pisa, Italy.