Artificial Intelligence

NeRF Moves Another Step Closer to Replacing CGI

Researchers at MIT and Google have taken a big step in solving one of the most fundamental obstacles for an emerging AI-driven technology that may eventually replace CGI – separating neural radiance field (NeRF) imagery into its constituent visual components, so that the imagery can be re-textured and re-lit.

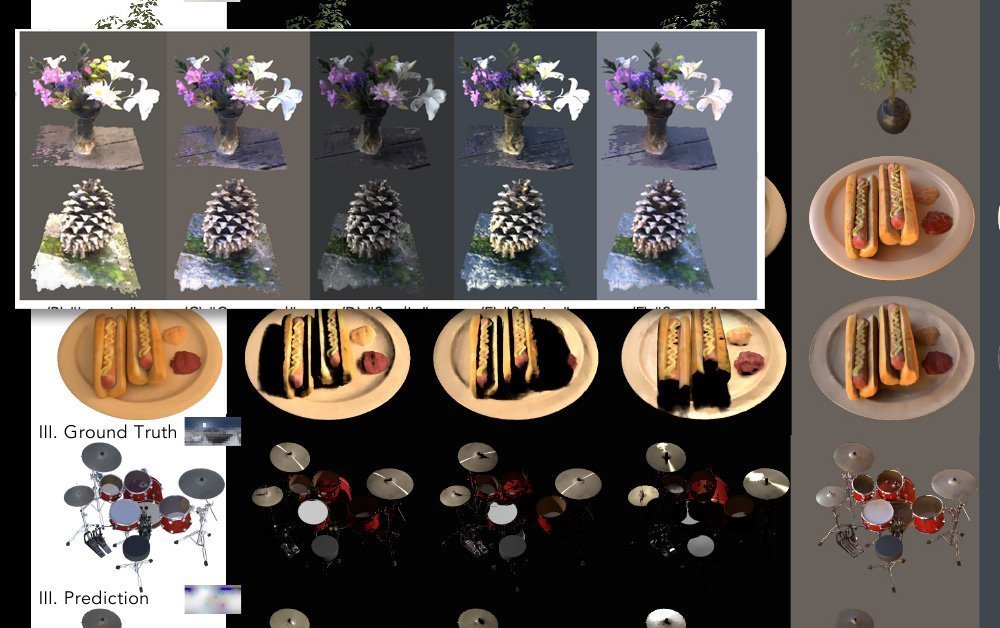

The new approach, called NeRFactor, effectively splits captured imagery into per-object normals (onto which textures can be assigned), light visibility, albedo (the proportion of incidental light that’s reflected away from a surface) and Bidirectional Reflectance Distribution Functions (BRDFs).

With these facets isolated, it’s possible not only to switch textures for individual objects or object groups, but also to add novel and unique lighting sources and shadow implementations, discounting any that were captured by the multi-camera arrays that generate input for NeRF imagery.

Normals, visibility, albedo and BRDF separated under NeRFactor. Source: https://www.youtube.com/watch?v=UUVSPJlwhPg

The model supports soft or hard shadows from arbitrary, user-defined lighting sources, and separates the four aspects of captured video programmatically, using a reconstruction loss, data from previous calculations of BRDF, and basic simple smoothness regularization.

NeRFactor’s work-flow, extracting separately actionable facets of imagery derived from multiple-camera arrays. Source: https://arxiv.org/pdf/2106.01970.pdf

NeRFactor uses an HDR light probe, a well-established approach which has pervaded the visual industrial and arts scene since its introduction in 1998, to evaluate possible routes for rays, which enables arbitrary lighting. Since this generates an ungovernable number of possible parameters, the light probe is filtered through a multi-layer perceptron (MLP), which maps the perceived geometry to the probe without attempting to calculate a complete lighting volume map for the model space.

Two neural radiance field models are used to demonstrate five lighting models possible under NeRFactor. Click image for higher resolution.

Cause For Reflection

The new research is perhaps most significant in separating the layers of captured imagery that control reflection. This remains one of the biggest challenges for neural radiance field imagery, since a truly novel and flexible NeRF system will need not only to be able to substitute textures, but crucially will need some way to reflect moving objects (besides just the general fixed environment) that would normally be accounted for in a CGI workflow.

This issue was noted recently in regard to Intel’s impressive new research into transforming videogame footage into photorealistic video via convolutional neural networks. In such workflows, many ‘baked’ aspects of the source material would need to become discrete and swappable, and this is arguably easier to solve for relighting (which is a function of the geometry being rendered in NeRF) than for reflections (which utilize ‘off-screen’ geometry which is entirely outside the scope of the model).

Therefore, isolating the layers in NeRF video which facilitate reflection brings NeRF one step closer to solving its ‘reflection challenge’.

The use of an HDR environment already solves the issue of generating world environment reflections (i.e. skies, landscapes, and other ‘fixed’ ambient factors), but new approaches will be needed to introduce moving and dynamic reflections.

Photogrammetry With NeRF

Neural Radiance Field imagery uses machine learning analysis to develop a completely volumetric space from a scene or object which has been captured from a number of angles.

Various NeRF-based schemes that have emerged in the last year have used a diverse number of contributing camera devices; some use 16 or more cameras, others as few as one or two. In all cases, the intermediate viewpoints are ‘infilled’ (i.e. interpreted) so that the scene or object can be fluidly navigated.

The resulting entity is a completely volumetric space, with an intrinsic 3D understanding that can be exploited in many ways, including the ability to generate traditional CG meshes from the 3D-parsed sum of the input images.

NeRF In The Context Of A ‘New CGI’

Neural radiance field imagery is drawn directly from images of the real world, including moving images of people, objects and scenes. By contrast, a CGI methodology ‘studies’ and interprets the world, requiring skilled workers to build meshes, rigs and textures that make use of real world imagery (i.e. facial and environmental captures). It remains an essentially interpretive and artisanal approach that’s expensive and laborious.

Additionally, CGI has had ongoing problems with the ‘uncanny valley’ effect in its efforts to recreate human likenesses, which presents no constraint to a NeRF-driven approach, which simply captures video or images of real people and manipulates it.

Further, NeRF can generate traditional CGI-style mesh geometry directly from photos as necessary, and in effect supplant many of the manual procedures that have always been necessary in computer-generated imagery.

Challenges For NeRF

This latest research from MIT and Google comes in the context of a veritable flood of NeRF papers over the last year, many of which have offered solutions to the various challenges thrown up by the initial 2020 paper.

In April, innovation from a Chinese research consortium provided a way to discretely isolate the individual timelines of facets in a NeRF scene, including people.

The Chinese research allows end-users to copy, paste and resize captured elements, disentangling them from the linear timeline of the original source video. Source: https://www.youtube.com/watch?v=Wp4HfOwFGP4

This approach not only enables re-imagining the scene from any angle captured by the camera array (and not just the single view represented in a typical video capture), but also enables versatile compositing – and even the ability to represent two facets from the same footage that are running in their own individual time-frames (or even running backwards, as necessary).

The Chinese research allows end-users to copy, paste and resize captured elements, disentangling them from the linear timeline of the original source video. Source: https://www.youtube.com/watch?v=Wp4HfOwFGP4

One of the biggest challenges for NeRF is lowering the considerable resources needed to train a scene, and this has been addressed in a number of recent papers. For instance, the Max Planck Institute for Intelligent Systems recently introduced KiloNeRF, which not only speeds up rendering times by a factor of 1000, but even enables NeRF to operate interactively.

KiloNeRF running an interactive environment at 50fps on a GTX 1080ti. Source: https://github.com/creiser/kilonerf

However, the NeRF speed innovation that truly captured the imagination of researchers and public alike in 2021 has been the PlenOctrees collaboration, led by UC Berkeley, which offers real-time rendering of Neural Radiance Fields:

The effect of PlenOctrees interactive capabilities has been reproduced in a live, web-based interface.

Live interactive movement of a PlenOctrees object in Firefox (movement is smoother and more dynamic than this GIF represents). Source: http://alexyu.net/plenoctrees/demo/

Additionally, Recursive-NeRF (from a May 2021 paper by researchers at Tsinghua University) offers high-quality recursive rendering on demand. Instead of obligating the user to render entire scenes, including parts which may not be seen, Recursive-NeRF provides something akin to JPEG’s lossy compression, and can generate discrete sub-NeRFs to handle extra imagery on demand – achieving a huge saving in computational resources.

Retaining detail while dumping unnecessary render calculations with Recursive-NeRF. Click on image for higher resolution. Source: https://arxiv.org/pdf/2105.09103.pdf

Other approaches include FastNeRF, which claims to achieve high-fidelity neural rendering at 200fps.

It’s been noted that many of the optimization techniques for NeRF involve ‘baking’ the scene, by committing to aspects that are desired to be rendered and discarding other facets, which limits exploration but greatly speeds up interactivity.

The downside of this is that the stress moves from the GPU to the storage, because baked scenes take up an inordinate amount of disk space; to an extent, this can be mitigated by downsampling the baked data, though this also involves a certain commitment, in terms of closing off avenues of exploration or interactivity.

Regarding motion capture and rigging, a new approach from Zheijang and Cornell universities, revealed in May, offered a method to recreate animatable humans using blend weight fields and skeleton structures interpreted from input video:

Derived skeletal structure in Animatable NeRF. Source: https://www.youtube.com/watch?v=eWOSWbmfJo4

When Will NeRF Have Its ‘Jurassic Park’ Moment?

Despite the rapid pace of progress with image synthesis via neural radiance fields, it is only in this period that any kind of ‘law of thermodynamics’ will be established for how deployable NeRF can become. In terms of a timeline analogous to the history of CGI, NeRF is currently hovering around 1973, just before the first use of CGI in Westworld.

That doesn’t mean NeRF will necessarily need to wait nine years for its equivalent Wrath Of Khan milestone, or decades for the similar breakthroughs that CGI achieved under James Cameron’s enthusiastic patronage in 1989’s The Abyss or 1991’s Terminator 2 – and then, the technology’s truly revolutionary breakout moment in 1993’s Jurassic Park.

The imaging scene has changed a great deal since the long period of stagnation for photo-chemical visual effects, which dominated film and television production from the birth of cinema to the early 1990s. The advent of the PC revolution and the acceleration of Moore’s law led to the CGI revolution, which otherwise could conceivably have taken place as early as the 1960s.

It remains to be seen if there is any barrier so implacable that it could hold up NeRF’s progress for that long – and if subsequent innovations in computer vision might not in the meantime entirely overtake NeRF as the key contender for CGI’s crown, characterizing neural radiance fields as the short-lived ‘fax machine’ of neural image synthesis.

As of yet, NeRF has not been used in any context outside of academic research; but it’s notable that major players such as Google Research, and many of the most prominent computer vision research labs are competing for the latest NeRF breakthrough.

Many of NeRF’s biggest obstacles have begun to be directly addressed this year; if subsequent research offers a solution to the ‘reflection issue’, and the many strands of NeRF-optimizing research coalesce into a decisive solution to the technology’s considerable processing and/or storage demands, NeRF really does have a shot at becoming ‘the new CGI’ in the next five years.