Artificial Intelligence

EfficientViT: Memory Efficient Vision Transformer for High-Resolution Computer Vision

Due to their high model capacity, Vision Transformer models have enjoyed a great deal of success in recent times. Despite their performance, vision transformers models have one major flaw: their remarkable computation prowess comes at high computation costs, and it’s the reason why vision transformers are not the first choice for real-time applications. To tackle this issue, a group of developers launched EfficientViT, a family of high-speed vision transformers.

When working on EfficientViT, developers observed that the speed of the current transformer models is often bounded by inefficient memory operations, especially element-wise functions & tensor reshaping in MHSA or Multi-Head Self Attention network. To tackle these inefficient memory operations, EfficientViT developers have worked on a new building block using a sandwich layout i.e the EfficientViT model makes use of a single memory-bound Multi-Head Self Attention network between efficient FFN layers that helps in improving memory efficiency, and also enhancing the overall channel communication. Furthermore, the model also discovers that attention maps often have high similarities across heads that leads to computational redundancy. To tackle the redundancy issue, the EfficientViT model presents a cascaded group attention module that feeds attention heads with different splits of the full feature. The method not only helps in saving computational costs, but also improves the attention diversity of the model.

Comprehensive experiments performed on the EfficientViT model across different scenarios indicate that the EfficientViT outperforms existing efficient models for computer vision while striking a good trade-off between accuracy & speed. So let’s take a deeper dive, and explore the EfficientViT model in a little more depth.

An Introduction to Vision Transformers and EfficientViT

Vision Transformers remain one of the most popular frameworks in the computer vision industry because they offer superior performance, and high computational capabilities. However, with constantly improving accuracy & performance of the vision transformer models, the operational costs & computational overhead increase as well. For example, current models known to provide state of the art performance on ImageNet datasets like SwinV2, and V-MoE use 3B, and 14.7B parameters respectively. The sheer size of these models coupled with the computational costs & requirements make them practically unsuitable for real-time devices & applications.

The EfficientNet model aims to explore how to boost the performance of vision transformer models, and finding the principles involved behind designing efficient & effective transformer-based framework architectures. The EfficientViT model is based on existing vision transformer frameworks like Swim, and DeiT, and it analyzes three essential factors that affect models interference speeds including computation redundancy, memory access, and parameter usage. Furthermore, the model observes that the speed of vision transformer models in memory-bound, which means that full utilization of computing power in CPUs/GPUs is prohibited or restricted by memory accessing delay, that results in negative impact on the runtime speed of the transformers. Element-wise functions & tensor reshaping in MHSA or Multi-Head Self Attention network are the most memory-inefficient operations. The model further observes that optimally adjusting the ratio between FFN (feed forward network) and MHSA, can help in significantly reducing the memory access time without affecting the performance. However, the model also observes some redundancy in the attention maps as a result of attention head’s tendency to learn similar linear projections.

The model is a final cultivation of the findings during the research work for the EfficientViT. The model features a new black with a sandwich layout that applies a single memory-bound MHSA layer between the Feed Forward Network or FFN layers. The approach not only reduces the time it takes to execute memory-bound operations in MHSA, but it also makes the entire process more memory efficient by allowing more FFN layers to facilitate the communication between different channels. The model also makes use of a new CGA or Cascaded Group Attention module that aims to make the computations more effective by reducing the computational redundancy not only in the attention heads, but also increases the depth of the network resulting in elevated model capacity. Finally, the model expands the channel width of essential network components including value projections, while shrinking network components with low value like hidden dimensions in the feed forward networks to redistribute the parameters in the framework.

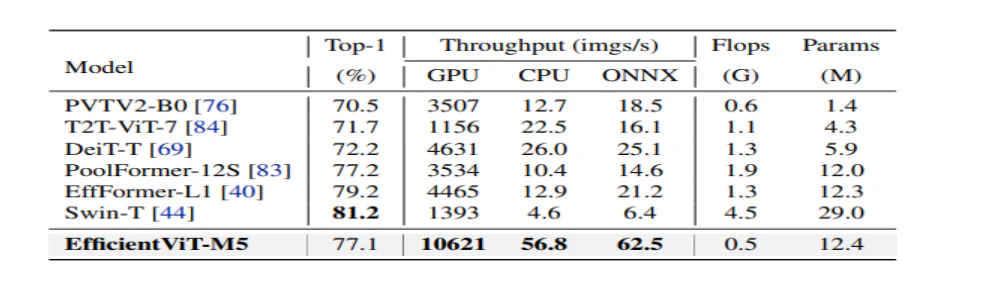

As it can be seen in the above image, the EfficientViT framework performs better than current state of the art CNN and ViT models in terms of both accuracy, and speed. But how did the EfficientViT framework manage to outperform some of the current state of the art frameworks? Let’s find that out.

EfficientViT: Improving the Efficiency of Vision Transformers

The EfficientViT model aims to improve the efficiency of the existing vision transformer models using three perspectives,

- Computational Redundancy.

- Memory Access.

- Parameter Usage.

The model aims to find out how the above parameters affect the efficiency of vision transformer models, and how to solve them to achieve better results with better efficiency. Let’s talk about them in a bit more depth.

Memory Access and Efficiency

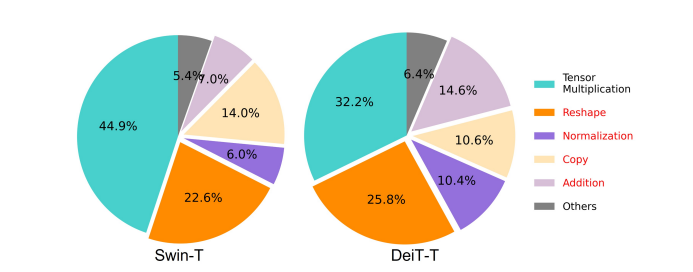

One of the essential factors affecting the speed of a model is the memory access overhead or MAO. As it can be seen in the image below, several operators in transformer including element-wise addition, normalization, and frequent reshaping are memory-inefficient operations, because they require access to different memory units which is a time consuming process.

Although there are some existing methods that can simplify the standard softmax self attention computations like low-rank approximation, and sparse attention, they often offer limited acceleration, and degrade the accuracy.

On the other hand, the EfficientViT framework aims to cut down the memory access cost by reducing the amount of memory-inefficient layers in the framework. The model scales down the DeiT-T and Swin-T to small subnetworks with a higher interference throughput of 1.25X and 1.5X, and compares the performance of these subnetworks with proportions of the MHSA layers. As it can be seen in the image below, when implemented, the approach boosts the accuracy of MHSA layers by about 20 to 40%.

Computation Efficiency

MHSA layers tend to embed the input sequence into multiple subspaces or heads, and computes the attention maps individually, an approach that is known to boost performance. However, attention maps are not computationally cheap, and to explore the computational costs, the EfficientViT model explores how to reduce redundant attention in smaller ViT models. The model measures the maximum cosine similarity of each head & the remaining heads within every block by training the width downscaled DeiT-T and Swim-T models with 1.25× inference speed-up. As it can be observed in the image below, there is a high number of similarity between attention heads which suggests that model incurs computation redundancy because numerous heads tend to learn similar projections of the exact full feature.

To encourage the heads to learn different patterns, the model explicitly applies an intuitive solution in which each head is fed only a portion of the full feature, a technique that resembles the idea of group convolution. The model trains different aspects of the downscaled models that feature modified MHSA layers.

Parameter Efficiency

Average ViT models inherit their design strategies like using an equivalent width for projections, setting expansion ratio to 4 in FFN, and increasing heads over stages from NLP transformers. The configurations of these components need to be re-designed carefully for lightweight modules. The EfficientViT model deploys Taylor structured pruning to find the essential components in the Swim-T, and DeiT-T layers automatically, and further explores the underlying parameter allocation principles. Under certain resource constraints, the pruning methods remove unimportant channels, and keep the critical ones to ensure highest possible accuracy. The figure below compares the ratio of channels to the input embeddings before and after pruning on the Swin-T framework. It was observed that: Baseline accuracy: 79.1%; pruned accuracy: 76.5%.

The above image indicates that the first two stages of the framework preserve more dimensions, while the last two stages preserve much less dimensions. It might mean that a typical channel configuration that doubles the channel after every stage or uses equivalent channels for all blocks, may result in substantial redundancy in the final few blocks.

Efficient Vision Transformer : Architecture

On the basis of the learnings obtained during the above analysis, developers worked on creating a new hierarchical model that offers fast interference speeds, the EfficientViT model. Let’s have a detailed look at the structure of the EfficientViT framework. The figure below gives you a generic idea of the EfficientViT framework.

Building Blocks of the EfficientViT Framework

The building block for the more efficient vision transformer network is illustrated in the figure below.

The framework consists of a cascaded group attention module, memory-efficient sandwich layout, and a parameter reallocation strategy that focus on improving the efficiency of the model in terms of computation, memory, and parameter, respectively. Let’s talk about them in greater detail.

Sandwich Layout

The model uses a new sandwich layout to build a more effective & efficient memory block for the framework. The sandwich layout uses less memory-bound self-attention layers, and makes use of more memory-efficient feed forward networks for channel communication. To be more specific, the model applies a single self-attention layer for spatial mixing that is sandwiched between the FFN layers. The design not only helps in reducing the memory time consumption because of self-attention layers, but also allows effective communication between different channels within the network thanks to the use of FFN layers. The model also applies an extra interaction token layer before each feed forward network layer using a DWConv or Deceptive Convolution, and enhances model capacity by introducing inductive bias of the local structural information.

Cascaded Group Attention

One of the major issues with MHSA layers is the redundancy in attention heads which makes computations more inefficient. To solve the issue, the model proposes CGA or Cascaded Group Attention for vision transformers, a new attention module that takes inspiration from group convolutions in efficient CNNs. In this approach, the model feeds individual heads with splits of the full features, and therefore decomposes the attention computation explicitly across heads. Splitting the features instead of feeding full features to each head saves computation, and makes the process more efficient, and the model continues to work on improving the accuracy & its capacity even further by encouraging the layers to learn projections on features that have richer information.

Parameter Reallocation

To improve the efficiency of parameters, the model reallocates the parameters in the network by expanding the width of the channel of critical modules while shrinking the channel width of not so important modules. Based on the Taylor analysis, the model either sets small channel dimensions for projections in each head during every stage or the model allows the projections to have the same dimension as the input. The expansion ratio of the feed forward network is also brought down to 2 from 4 to help with its parameter redundancy. The proposed reallocation strategy that the EfficientViT framework implements, allots more channels to important modules to allow them to learn representations in a high dimensional space better that minimizes the loss of feature information. Furthermore, to speed up the interference process & enhance the efficiency of the model even further, the model automatically removes the redundant parameters in unimportant modules.

The overview of the EfficientViT framework can be explained in the above image where the parts,

- Architecture of EfficientViT,

- Sandwich Layout block,

- Cascaded Group Attention.

EfficientViT : Network Architectures

The above image summarizes the network architecture of the EfficientViT framework. The model introduces an overlapping patch embedding [20,80] that embeds 16×16 patches into C1 dimension tokens that enhances the model’s capacity to perform better in low-level visual representation learning. The architecture of the model comprises three stages where each stage stacks the proposed building blocks of the EfficientViT framework, and the number of tokens at each subsampling layer (2× subsampling of the resolution) is reduced by 4X. To make subsampling more efficient, the model proposes a subsample block that also has the proposed sandwich layout with the exception that an inverted residual block replaces the attention layer to reduce the loss of information during sampling. Furthermore, instead of conventional LayerNorm(LN), the model makes use of BatchNorm(BN) because BN can be folded into the preceding linear or convolutional layers that gives it a runtime advantage over the LN.

EfficientViT Model Family

The EfficientViT model family consists of 6 models with different depth & width scales, and a set number of heads is allotted for each stage. The models use fewer blocks in the initial stages when compared to the final stages, a process similar to the one followed by MobileNetV3 framework because the process of early stage processing with larger resolutions is time consuming. The width is increased over stages with a small factor to reduce redundancy in the later stages. The table attached below provides the architectural details of the EfficientViT model family where C, L, and H refer to width, depth, and number of heads in the particular stage.

EfficientViT: Model Implementation and Results

The EfficientViT model has a total batch size of 2,048, is built with Timm & PyTorch, is trained from scratch for 300 epochs using 8 Nvidia V100 GPUs, uses a cosine learning rate scheduler, an AdamW optimizer, and conducts its image classification experiment on ImageNet-1K. The input images are randomly cropped & resized into resolution of 224×224. For the experiments that involve downstream image classification, the EfficientViT framework finetunes the model for 300 epochs, and uses AdamW optimizer with a batch size of 256. The model uses RetineNet for object detection on COCO, and proceeds to train the models for a further 12 epochs with the identical settings.

Results on ImageNet

To analyze the performance of EfficientViT, it is compared against current ViT & CNN models on the ImageNet dataset. The results from the comparison are reported in the following figure. As it can be seen that the EfficientViT model family outperforms the current frameworks in most cases, and manages to achieve an ideal trade-off between speed & accuracy.

Comparison with Efficient CNNs, and Efficient ViTs

The model first compares its performance against Efficient CNNs like EfficientNet and vanilla CNN frameworks like MobileNets. As it can be seen that when compared to MobileNet frameworks, the EfficientViT models obtain a better top-1 accuracy score, while running 3.0X and 2.5X faster on Intel CPU and V100 GPU respectively.

The above figure compares the EfficientViT model performance with state of the art large-scale ViT models running on the ImageNet-1K dataset.

Downstream Image Classification

The EfficientViT model is applied on various downstream tasks to study the model’s transfer learning abilities, and the below image summarizes the results of the experiment. As it can be observed, the EfficientViT-M5 model manages to achieve better or similar results across all datasets while maintaining a much higher throughput. The only exception is the Cars dataset, where the EfficientViT model fails to deliver in accuracy.

Object Detection

To analyze EfficientViT’s ability to detect objects, it is compared against efficient models on the COCO object detection task, and the below image summarizes the results of the comparison.

Final Thoughts

In this article, we have talked about EfficientViT, a family of fast vision transformer models that use cascaded group attention, and provide memory-efficient operations. Extensive experiments conducted to analyze the performance of the EfficientViT have shown promising results as the EfficientViT model outperforms current CNN and vision transformer models in most cases. We have also tried to provide an analysis on the factors that play a role in affecting the interference speed of vision transformers.