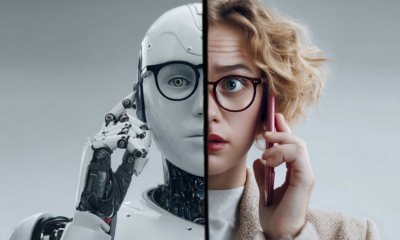

Artificial Intelligence

Early Warning System for Disinformation Developed with AI

Researchers at the University of Notre Dame are working on a project to combat disinformation online, including media campaigns to incite violence, sow discord, and meddle in democratic elections.

The team of researchers relied on artificial intelligence (AI) to develop an early warning system. The system will be able to identify manipulated images, deepfake videos, and disinformation online. It is a scalable, automated system that uses content-based image retrieval. It can then apply computer-vision based techniques to identify political memes on multiple social media networks.

Tim Weninger is an associate professor in the Department of Computer Science and Engineering at Notre Dame.

“Memes are easy to create and even easier to share,” said Weninger. “When it comes to political memes, these can be used to help get out the vote, but they can also be used to spread inaccurate information and cause harm.”

Weninger collaborated with Walter Scheirer, an assistant professor in the Department of Computer Science and Engineering at Notre Dame, along with members of the research team.

2019 General Election in Indonesia

The team tested out the system with the 2019 general election in Indonesia. They collected over two million images and content, coming from various sources on Twitter and Instagram related to the election.

In the election, the left-leaning, centrist incumbent beat the conservative, populist candidate. Following the election, violent protests erupted in which eight people died and hundreds more were injured. The team’s study found that there were spontaneous and coordinated campaigns launched on social media with the goal of influencing the election and inciting violence.

The coordinated campaigns used manipulated images, which projected false claims and misrepresented certain events. News stories and memes were fabricated with the use of legitimate news logos, with the goal of provoking citizens and supporters from both parties.

The Rest of the World

The 2019 general election in Indonesia is a representation of what can happen in the rest of the world. Disinformation, especially spread through social media, can threaten democratic processes.

The research team at Notre Dame included digital forensics experts and specialists in peace studies. According to the team, the system is being developed in order to flag manipulated content, with the goal of preventing violence and warning journalists or election monitors of potential threats.

The system is still in the research and development phase, but it will eventually be scalable and personalized for users to monitor content. Some of the biggest challenges in developing the system include determining the best way to scale up data ingestion and processing. According to Scheirer, the system is currently being evaluated with the next step being a transition to operational use.

There is a chance that the system can be used to monitor the 2020 general election in the United States, which is expected to see massive amounts of disinformation and manipulation.

“The disinformation age is here,” said Scheirer. “A deepfake replacing actors in a popular film might seem fun and lighthearted but imagine a video or a meme created for the sole purpose of pitting one world leader against another — saying words they didn’t actually say. Imagine how quickly that content could be shared and spread across platforms. Consider the consequences of those actions.”