Artificial General Intelligence

The Role of Vector Databases in Modern Generative AI Applications

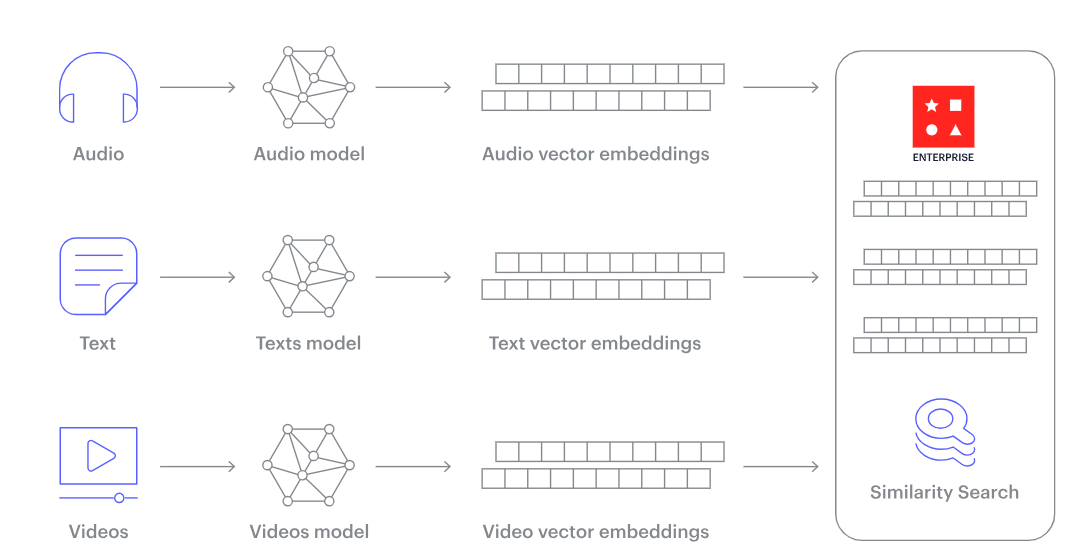

For large-scale Generative AI applications to work effectively, it needs good system to handle a lot of data. One such important system is the vector database. What sets this database apart is its ability to deals with many types of data like text, sound, pictures, and videos in a number/vector form.

What are Vector Databases?

Vector database is a specialized storage system designed to handle high-dimensional vectors efficiently. These vectors, which can be thought of as points in a multi-dimensional space, often represent embeddings or compressed representations of more complex data like images, text, or sound.

Vector databases allow for rapid similarity searches amongst these vectors, enabling quick retrieval of the most similar items from a vast dataset.

Traditional Databases vs. Vector Databases

Vector Databases:

- Handles High-Dimensional Data: Vector databases are designed to manage and store data in high-dimensional spaces. This is particularly useful for applications like machine learning, where data points (such as images or text) can be represented as vectors in multi-dimensional spaces.

- Optimized for Similarity Search: One standout features of vector databases is their ability to perform similarity searches. Instead of querying data based on exact matches, these databases allow users to retrieve data that is “similar” to a given query, making them invaluable for tasks like image or text retrieval.

- Scalable for Large Datasets: As AI and machine learning applications continue to grow, so does the amount of data they process. Vector databases are built to scale, ensuring that they can handle vast amounts of data without compromising on performance.

Traditional Databases:

- Structured Data Storage: Traditional databases, like relational databases, are designed to store structured data. This means data is organized into predefined tables, rows, and columns, ensuring data integrity and consistency.

- Optimized for CRUD Operations: Traditional databases are primarily optimized for CRUD operations. This means they are designed to efficiently create, read, update, and delete data entries, making them suitable for a wide range of applications, from web services to enterprise software.

- Fixed Schema: One of the defining characteristics of many traditional databases is their fixed schema. Once the database structure is defined, making changes can be complex and time-consuming. This rigidity ensures data consistency but can be less flexible than the schema-less or dynamic schema nature of some modern databases.

Traditional databases often struggle with the complexity of embeddings, a challenge readily addressed by vector databases.

Vector Representations

Central to the functioning of vector databases is the fundamental concept of representing diverse forms of data using numeric vectors. Let’s take an image as an example. When you see a picture of a cat, while it might just be an adorable feline image for us, for a machine it can be transformed into a unique 512-dimensional vector such as:

[0.23, 0.54, 0.32, …, 0.12, 0.45, 0.90]

With vector databases, Generative AI application can do more things. It can find information based on meaning and remember things for a long time. Interestingly, this method isn’t restricted to images alone. Textual data filled with contextual and semantic meanings, can also be put into vector forms as well.

Generative AI and The Need for Vector Databases

Generative AI often involves embeddings. Take, for instance, word embeddings in natural language processing (NLP). Words or sentences are transformed into vectors that capture semantic meaning. When generating human-like text, models need to rapidly compare and retrieve relevant embeddings, ensuring that the generated text maintains contextual meanings.

Similarly, in image or sound generation, embeddings play a crucial role in encoding patterns and features. For these models to function optimally, they require a database that allows for instantaneous retrieval of similar vectors, making vector databases an essential component of the generative AI puzzle.

Creating embeddings for natural language usually involves using pre-trained models such as:

- GPT-3 and GPT-4: OpenAI’s GPT-3 (Generative Pre-trained Transformer 3) has been a monumental model in the NLP community with 175 billion parameters. Following it, GPT-4, with an even larger number of parameters, continues to push the boundaries in generating high-quality embeddings. These models are trained on diverse datasets, enabling them to create embeddings that capture a wide array of linguistic nuances.

- BERT and its Variants: BERT (Bidirectional Encoder Representations from Transformers) by Google, is another significant model that has seen various updates and iterations like RoBERTa, and DistillBERT. BERT’s bidirectional training, which reads text in both directions, is particularly adept at understanding the context surrounding a word.

- ELECTRA: A more recent model that is efficient and performs at par with much larger models like GPT-3 and BERT while requiring less computing resources. ELECTRA discriminates between real and fake data during pre-training, which helps in generating more refined embeddings.

Understanding the above process:

Initially, an embedding model is employed to transform the desired content into vector embeddings. Once generated, these embeddings are then stored within a vector database. For easy traceability and relevance, these stored embeddings maintain a link or reference to the original content they were derived from.

Later, when a user or system poses a question to the application, the same embedding model jumps into action. It transforms this query into corresponding embeddings. These newly formed embeddings then search the vector database, seeking similar vector representations. The embeddings identified as matches have a direct association with their original content, ensuring the user’s query is met with relevant and accurate results.

Growing Funding for Vector Database Newcomers

With AI’s rising popularity, many companies are putting more money into vector databases to make their algorithms better and faster. This can be seen with the recent investments in vector database startups like Pinecone, Chroma DB, and Weviate.

Large cooperation like Microsoft have their own tools too. For example, Azure Cognitive Search lets businesses create AI tools using vector databases.

Oracle also recently announced new features for its Database 23c, introducing an Integrated Vector Database. Named “AI Vector Search,” it will have a new data type, indexes, and search tools to store and search through data like documents and images using vectors. It supports Retrieval Augmented Generation (RAG), which combines large language models with business data for better answers to language questions without sharing private data.

Primary Considerations of Vector Databases

Distance Metrics

The effectiveness of a similarity search depends on the chosen distance metric. Common metrics include Euclidean distance and cosine similarity, each catering to different types of vector distributions.

Indexing

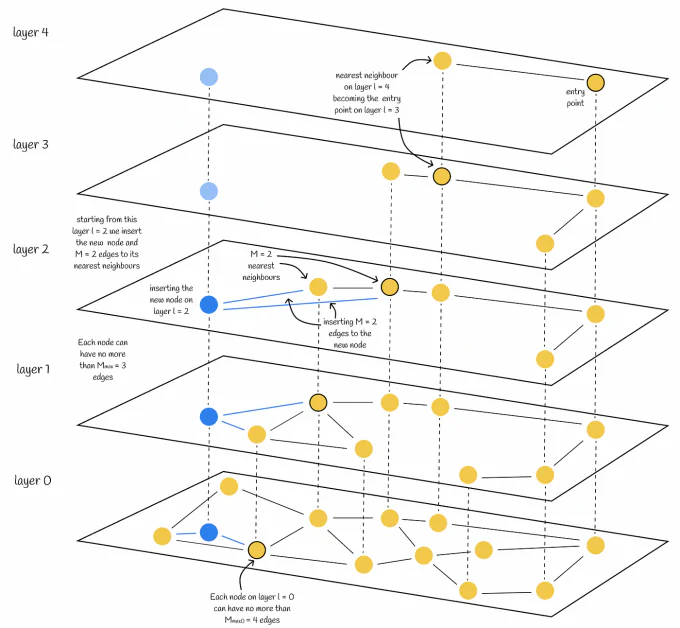

Given the high-dimensionality of vectors, traditional indexing methods don’t cut it. Vector databases uses techniques like Hierarchical Navigable Small World (HNSW) graphs or Annoy trees, allowing for efficient partitioning of the vector space and rapid nearest-neighbor searches.

Annoy tree (Source)

Annoy is a method that uses something called binary search trees. It splits our data space many times and only looks at a part of it to find close neighbors.

Hierarchical Navigable Small World (HNSW) graphs (Source)

HNSW graphs, on the other hand, are like networks. They connect data points in a special way to make searching faster. These graphs help in quickly finding close points in the data.

Scalability

As datasets grow, so does the challenge of maintaining fast retrieval times. Distributed systems, GPU acceleration, and optimized memory management are some ways vector databases tackle scalability.

Role of Vector Databases: Implications and Opportunities

1. Training Data for Cutting-Edge Generative AI Models: Generative AI models, such as DALL-E and GPT-3, are trained using vast amounts of data. This data often comprises vectors extracted from a myriad of sources, including images, texts, code, and other domains. Vector databases meticulously curate and manage these datasets, allowing AI models to assimilate and analyze the world’s knowledge by identifying patterns and relationships within these vectors.

2. Advancing Few-Shot Learning: Few-shot learning is an AI training technique where models are trained with limited data. Vector databases amplify this approach by maintaining a robust vector index. When a model is exposed to just a handful of vectors – say, a few images of birds – it can swiftly extrapolate the broader concept of birds by recognizing similarities and relationships between these vectors.

3. Enhancing Recommender Systems: Recommender systems uses vector databases to suggest content closely aligned with a user’s preferences. By analyzing a user’s behavior, profile, and queries, vectors indicative of their interests are extracted. The system then scans the vector database to find content vectors that closely resemble these interest vectors, ensuring precise recommendations.

4. Semantic Information Retrieval: Traditional search methods rely on exact keyword matches. However, vector databases empower systems to understand and retrieve content based on semantic similarity. This means that searches become more intuitive, focusing on the underlying meaning of the query rather than just matching words. For instance, when users input a query, the corresponding vector is compared with vectors in the database to find content that resonates with the query’s intent, not just its phrasing.

5. Multimodal Search: Multimodal search is an emerging technique that integrates data from multiple sources, like text, images, audio, and video. Vector databases serve as the backbone of this approach by allowing for the combined analysis of vectors from diverse modalities. This results in a holistic search experience, where users can retrieve information from a variety of sources based on a single query, leading to richer insights and more comprehensive results.

Conclusion

The AI world is changing fast. It’s touching many industries, bringing good things and new problems. The rapid advancements in Generative AI underscore the vital role of vector databases in managing and analyzing multi-dimensional data.

These specialized storage systems, adept at handling high-dimensional vectors from varied data forms like images, text, or sound, stand as the linchpin in the effective functioning of modern AI applications, particularly in the realm of similarity searches.