Anderson's Angle

The Advantages of Getting Fat Through AI

A new AI system can realistically reshape people’s bodies in photos, making them fatter, thinner, or more muscular, without changing their face, clothes, or background. The system is trained on a fully synthetic dataset that shows each identity across multiple body types.

Besides the increasingly common use of AI as a method of refining body shape on social networks, or (potentially) for altering body types for VFX purposes, the use of machine learning to alter the appearance of individuals can serve a more important function: helping individuals with eating disorders to understand their own dysmorphic interpretation of their appearance, as well as offering a potential motivational tool for more general sports and fitness purposes:

From the paper ‘Body size estimation in women with anorexia nervosa and healthy controls using 3D avatars’, a GUI to visualize body shape changes. Individuals with body dysmorphia may struggle to associate a realistic interpretation of their body with a similar image, thus giving clinicians a metric for dysmorphic responses, among other purposes. Source: https://www.nature.com/articles/s41598-017-15339-z.pdf

Additionally, the much-pursued fashion try-on sub-strand in computer vision research also has an interest in providing accurate visualizations across a range of body shapes. Meanwhile, frameworks such as the 2024 DiffBody offering from Japan’s University of Tsukuba, have created some eye-popping functionality in this field:

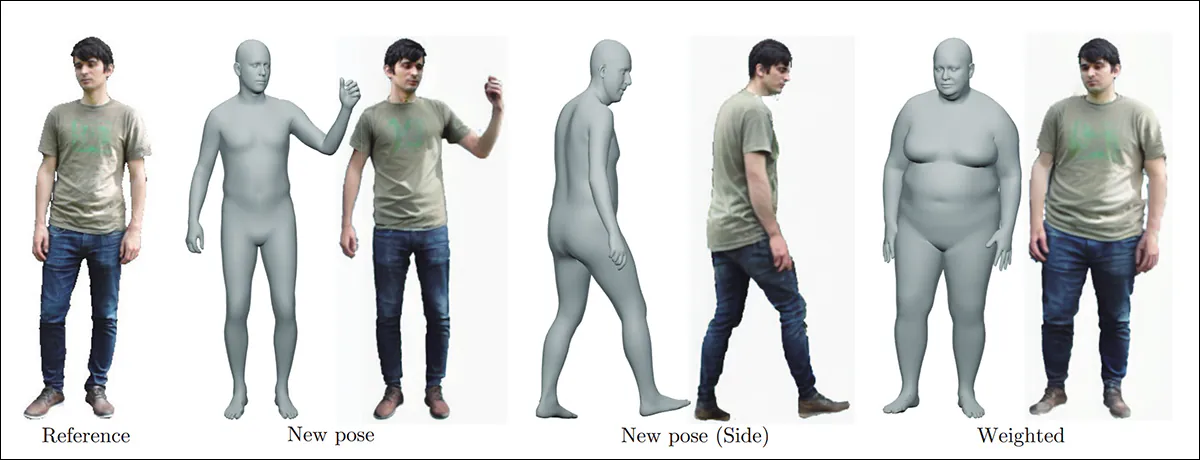

Some of the transformations possible using the prior DiffBody technique. Source: https://arxiv.org/pdf/2401.02804

Since AI foundation models are optimized towards conventionally-attractive or otherwise common body shapes, unusual sizes such as ‘obese’ are either minimally available in standard models, or else come with some punishing biases.

Pair Necessities

One of the biggest challenges in creating AI systems that can realistically add or subtract fat and muscle to pictures of individuals – without altering their identity, environments or clothes – is that this involves paired training, where the AI system effectively learns ‘before’ and ‘after’ images that define whatever transformation the model is intended to perform.

This kind of training has come back into prominence over the summer due to the success of Black Forest Labs’ Kontext series of image editing models, where this kind of paired data was used to teach a range of transformations to the models:

From the Flux Kontext site, an example of a transformation that reflects the kind of source data needed to train a model capable of retaining image integrity when imposing major changes. Source: https://bfl.ai/models/flux-kontext

Obviously, in the case of developing a model that can significantly alter the appearance of a person (without re-imagining the entire image), one needs something that’s totally impossible in the real world: radical ‘before’ and ‘after’ pictures taken only seconds apart.

The only recourse is synthetic data. Some projects of this type have used individual, high-effort contrastive pairs created manually in Photoshop; however, this is unrealistic at scale, and an automated or semi-automated, AI-driven process for generating the pairs is now increasingly thought to be preferable.

The trouble with GAN-based and most SMPL/X-based approaches (where a virtual CGI figure is used as a kind of exchange mechanism between real images and the desired transformations), and with approaches that use image-warping, is that the background and identity tend to suffer in the process.

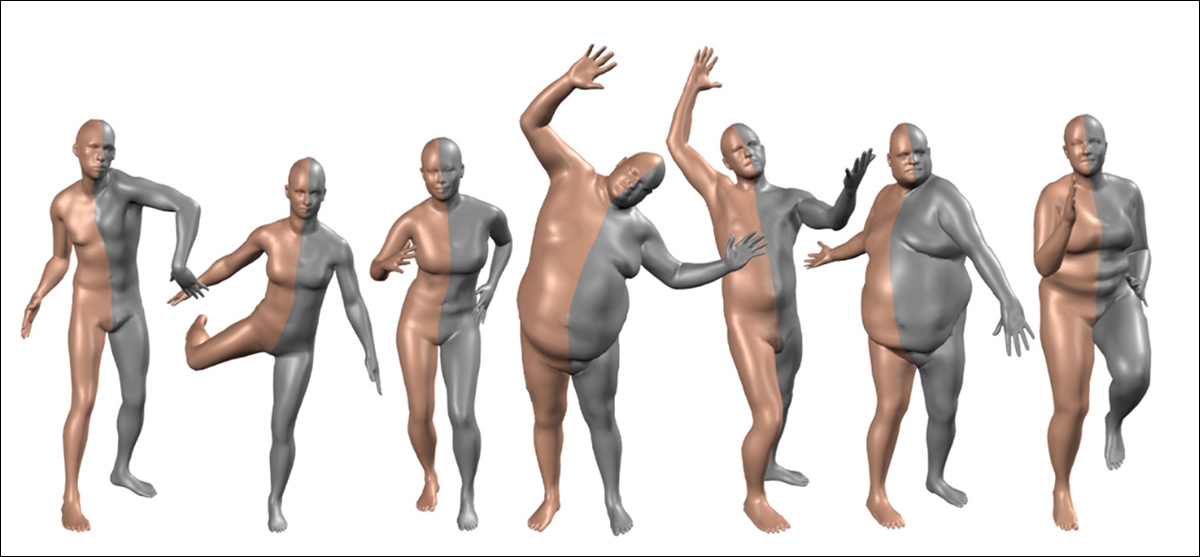

Parametric, vector-based CGI models such as SMPL and SMPL-X (among others), provide defined conventional physical 3D coordinates which can be interpreted and incorporated into computer vision frameworks. Source: https://files.is.tue.mpg.de/black/papers/SMPL2015.pdf

Since it’s important that the AI learn to change only the desired aspects, instead of learning to warp backgrounds and to replicate other undesired errors, no body-altering system has yet arrived at a perfect solution.

One recent paper from India, however, does propose a notable advance on the state-of-the-art through the use of the older Flux diffusion model framework, augmented with a number of secondary approaches that enable a superior and more consistent paired dataset:

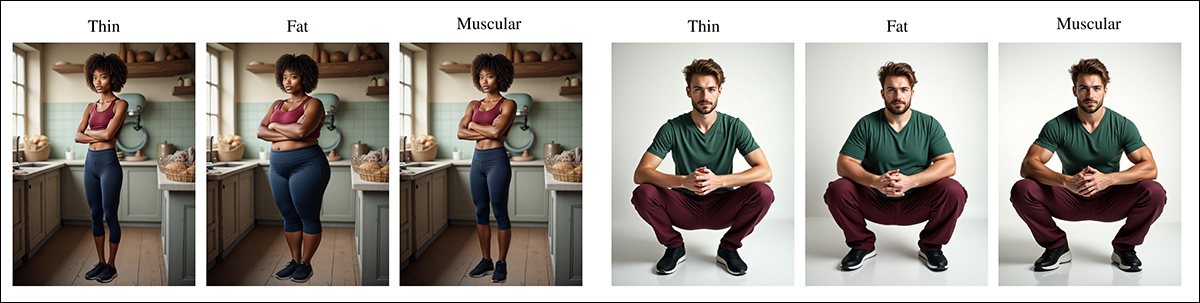

Dataset examples from the new project. Source: https://arxiv.org/pdf/2508.13065

The project comprises a new and extensive paired dataset; Odo, a generative diffusion model trained on this data; and a bespoke new benchmark designed to quantitatively assess human shape-editing performance. In tests, the authors claim a notable advance on standards achieved by similar comparable models.

The new paper is titled Odo: Depth-Guided Diffusion for Identity-Preserving Body Reshaping, and comes from three researchers at Fast Code AI Pvt. Ltd in Bangalore.

Data and Method

The dataset created by the researchers features 7,615 high resolution (960x1280px) images for each target body type (fat, thin, and muscular).

Initially 1,523 human faces were generated through the FLUX.1-dev 12-billion parameter diffusion model, though leveraging an unspecified number of license-free reference faces from Pexels and Unsplash, to increase diversity.

To generate full-body images incorporating these faces, the researchers used ByteDance’s 2024 offering PuLID, a checkpoint fine-tuned over base Flux, and featuring a contrastive ID loss designed to help conserve facial identity during transformational processes:

Examples from the PuLID project. Source: https://arxiv.org/pdf/2404.16022

The model received a facial image and a standardized prompt asking for gender, clothing, pose, scene, as well as body type of thin, fat, or muscular.

The three body-type images for each identity sometimes exhibited minor shifts in background alignment and perceived subject size, arising from the stochastic behavior of diffusion models, where each generation begins from a new noise seed. Even slight changes to the prompt, such as modifying the body type description, can influence the model’s trajectory in latent space, and cause visual drift.

To correct for this variation, a four-stage automatic post-processing pipeline was applied, with the thin image in each triplet selected as the reference, since its smaller silhouette exposed more background.

Person detection was performed using RT-DETRv2, followed by segmentation with SAM 2.1 to extract subject masks for all three body types. The thin reference image was then passed to FLUX.1 Kontext Pro (the newer image-editing system) for background inpainting, producing a clean version of the scene, with the subject removed.

The fat and muscular variants were resized using uniform scaling to match the height of the thin reference mask, and composited onto the clean background at the same bottom alignment, ensuring consistent framing across all images.

The authors state:

‘The resulting transformation triplets (thin, fat and muscle) have an identical background and uniform subject scale. This removes irrelevant variations that could negatively affect subsequent training or evaluation.’

Each triplet of thin, fat, and muscular images allowed six possible transformation pairs, resulting in 45,690 theoretical combinations across 7,615 identities.

After filtering out examples with mismatched clothing, unnatural poses, distorted limbs, identity drift, or minimal shape change, 18,573 high-quality pairs were retained. Though some minor pose differences remained, the model would prove robust to these variations.

Training and Tests

The resulting images were used to train the Odo model – a diffusion-based approach to reshaping humans, with the use of Skinned Multi-Person Linear Model (SMPL, i.e., intermediate CGI) maps.

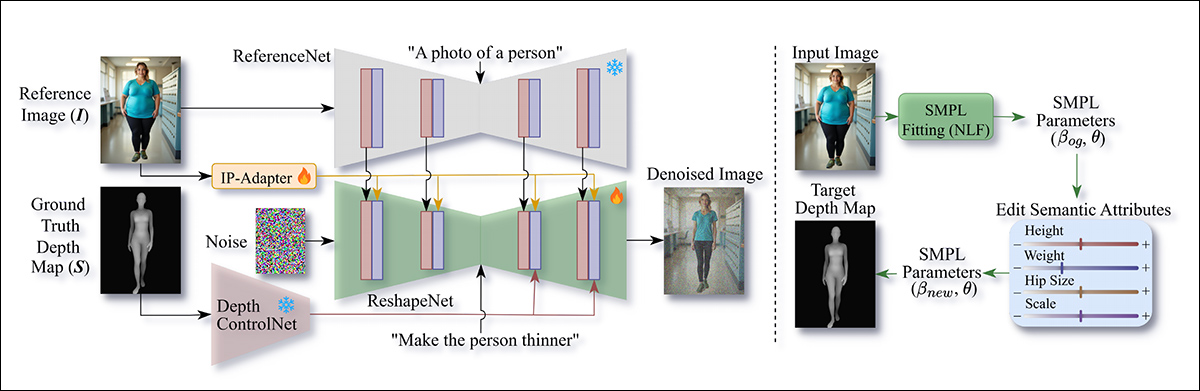

Informed by the 2024 Neural Localizer’s methods, the data was conformed to the SMPL figure on a per-individual basis, with the resulting optimized parameters capable of producing depth maps from which the altered images would be derived:

Schema for the training pipeline. The left side shows the training setup, where SMPL depth maps from the target image guide ReshapeNet via ControlNet to perform body transformation. Features from the source image are extracted by ReferenceNet and merged into ReshapeNet using spatial self-attention. The right side shows inference, where SMPL parameters are estimated from the input image, modified by semantic attributes, and rendered into a target depth map that conditions ReshapeNet during denoising, to produce the final transformed image.

The model (see schema above) comprises the ReshapeNet module, supported by three ancillary modules: ReferenceNet; an IP-Adapter module; and a depth-based ControlNet module.

The ReferenceNet extracts detailed features such as background, clothing, and identity from the input image, and passes them to ReshapeNet. IP-Adapter contributes high-level feature guidance, while the Depth ControlNet applies SMPL-based conditioning to guide the body transformation. In line with previous works, an SDXL-based frozen UNet was used to extract intermediate features.

As for the IP-Adapter module, this encodes the input image via CLIP, with the resulting embeddings integrated back into ReshapeNet via cross-attention.

As for the Depth ControlNet module, this guides the middle and decoder layers of ReshapeNet using residual connections. Subsequently, it takes a depth map rendered from the target SMPL parameters, and aligns it with the target image.

ReshapeNet, based on the SDXL UNet, is the core network of Odo. During training, target images are encoded into latent space with a variational autoencoder, noised over time, and then denoised by ReshapeNet using features from ControlNet and ReferenceNet.

Category-specific text prompts such as ‘Make the person fatter,’ ‘Make the person thinner,’ or ‘Make the person muscular’ were added, to guide transformations. While the depth maps captured coarse body shapes, the prompts provided the semantic detail needed for changes such as muscle definition, allowing the model to produce more accurate and realistic modifications.

Training Implementation

Odo was trained on the project’s synthetic dataset, combined with a subset of the DeepFashion-MultiModal dataset, yielding a total of 20,000 image pairs.

The DeepFashion-MultiModal data provided variety in garments and facial features, with images paired against themselves during training. With all SMPL depth maps precomputed for efficiency, training ran for 60 epochs on a single NVIDIA A100 GPU with 80GB of VRAM.

With the input images resized to 768×1024, the Adam optimizer was used, at a learning rate of 1×10⁻⁵. ReshapeNet was initialized with SDXL UNet weights, and fine-tuned jointly with the IP-Adapter from its checkpoint.

ReferenceNet was initialized with SDXL weights and kept frozen, while the Depth ControlNet used pre-trained weights and also remained frozen.

The final model required about 23GB of GPU memory, requiring 18 seconds for single-image inference.

A Novel Metric

The lack of datasets of the kind required for this type of project meant that no existing metrics really addressed the challenge. Therefore the authors devised a novel benchmark, comprised of 3,600 image pairs, featuring real face images and background descriptions, along with diverse body shape variations.

Other metrics used were Structural Similarity Index (SSIM); Peak Signal-to-Noise Ratio (PSNR); Learned Perceptual Image Patch Similarity (LPIPS); and Scale Corrected Per-Vertex Euclidean error in neutral (T-)pose (PVE-T-SC).

First, the authors tested their method qualitatively against in-the-wild images (images not seen by the model during training):

Qualitative tests. The examples show conversions from the original image to thinner, overweight, and muscular body types across different poses, including sitting and standing. Please refer to the source paper for better definition and detail.

Of these results, the paper states:

‘[Our] method effectively handles diverse poses, backgrounds, and clothing while preserving person identity.

‘In addition to SMPL target shapes, we provide textual prompts – ‘Make the person fatter,’ ‘Make the person thinner,’ or ‘Make the person muscular’– to explicitly guide the desired transformations…

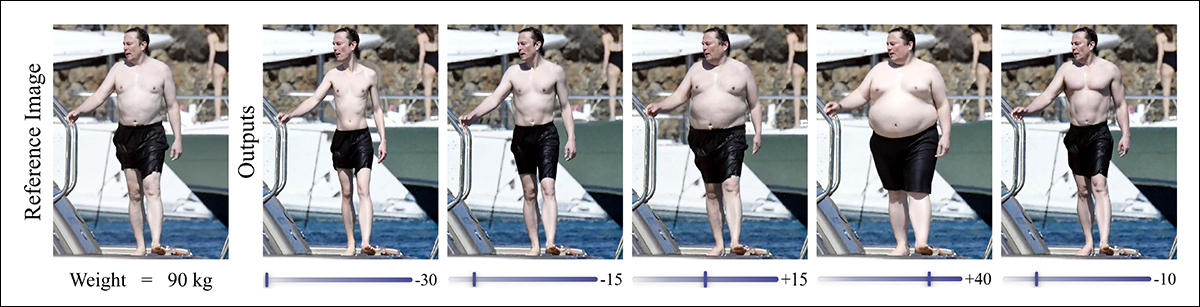

…'[The image below] further demonstrates our model’s capability to perform diverse shape transformations. The model accurately follows SMPL depth maps to generate multiple variations of thinner and fatter versions from the reference image.’

Further qualitative tests covering the range of target body types. Please refer to the source paper for better definition and detail.

The authors further comment:

‘Our results demonstrate more realistic transformations according to the target weight, as our model simultaneously adjusts overall body shape, limb proportions, and clothing, resulting in anatomically consistent and visually convincing modifications.’

For quantitative tests, the authors pitted their system against the open source Flux Kontext [dev] model, FLUX.1, and the 2022 offering Structure-Aware Flow Generation for Human Body Reshaping.

For FLUX.1 Kontext [dev], prompts were designed to instruct ‘Make the person fatter,’ ‘Make the person thinner,’ or ‘Make the person muscular’, with target weights specified – though the lack of fine-grained controls limited performance:

![Comparison of Odo with Structure-Aware Flow Generation for Human Body Reshaping and FLUX.1 Kontext [dev] on the test set, along with ablation results for models trained without prompt conditioning in ReshapeNet, without ReferenceNet (using only IP-Adapter), and with training restricted to the BR-5K dataset. The table also includes material related to ablation studies (BR-5K), which we are not covering here.](https://www.unite.ai/wp-content/uploads/2025/08/table-2-1.jpg)

Comparison of Odo with Structure-Aware Flow Generation for Human Body Reshaping and FLUX.1 Kontext [dev] on the test set, along with ablation results (not covered in this article) for models trained without prompt conditioning in ReshapeNet, without ReferenceNet (using only IP-Adapter), and with training restricted to the BR-5K dataset.

Conclusion

The advent of Flux Kontext this year, and even more recently the release of the unquantized weights for Qwen Image Edit, have brought paired image data back to the forefront of the hobbyist and professional communities. In the climate of growing criticism of and impatience regarding the imprecision of generative AI, models of this kind are designed for much higher fidelity to the input source images (though smaller-scale models are sometimes hamstrung by their very specific training goals).

In this case, the utility of a body-shaping system would seem to lie in psychological, medical, and fashion-based fields. Nonetheless, it remains possible that systems of this kind will achieve a higher level of prominence, and, perhaps a more casual and even potentially concerning set of uses.

First published Monday, August 25, 2025