Artificial Intelligence

A Full Guide to Fine-Tuning Large Language Models

By

Aayush Mittal Mittal

Large language models (LLMs) like GPT-4, LaMDA, PaLM, and others have taken the world by storm with their remarkable ability to understand and generate human-like text on a vast range of topics. These models are pre-trained on massive datasets comprising billions of words from the internet, books, and other sources.

This pre-training phase imbues the models with extensive general knowledge about language, topics, reasoning abilities, and even certain biases present in the training data. However, despite their incredible breadth, these pre-trained LLMs lack specialized expertise for specific domains or tasks.

This is where fine-tuning comes in – the process of adapting a pre-trained LLM to excel at a particular application or use-case. By further training the model on a smaller, task-specific dataset, we can tune its capabilities to align with the nuances and requirements of that domain.

Fine-tuning is analogous to transferring the wide-ranging knowledge of a highly educated generalist to craft an subject matter expert specialized in a certain field. In this guide, we’ll explore the whats, whys, and hows of fine-tuning LLMs.

What is Fine-Tuning?

At its core, fine-tuning involves taking a large pre-trained model and updating its parameters using a second training phase on a dataset tailored to your target task or domain. This allows the model to learn and internalize the nuances, patterns, and objectives specific to that narrower area.

While pre-training captures broad language understanding from a huge and diverse text corpus, fine-tuning specializes that general competency. It’s akin to taking a Renaissance man and molding them into an industry expert.

The pre-trained model’s weights, which encode its general knowledge, are used as the starting point or initialization for the fine-tuning process. The model is then trained further, but this time on examples directly relevant to the end application.

By exposing the model to this specialized data distribution and tuning the model parameters accordingly, we make the LLM more accurate and effective for the target use case, while still benefiting from the broad pre-trained capabilities as a foundation.

Why Fine-Tune LLMs?

There are several key reasons why you may want to fine-tune a large language model:

- Domain Customization: Every field, from legal to medicine to software engineering, has its own nuanced language conventions, jargon, and contexts. Fine-tuning allows you to customize a general model to understand and produce text tailored to the specific domain.

- Task Specialization: LLMs can be fine-tuned for various natural language processing tasks like text summarization, machine translation, question answering and so on. This specialization boosts performance on the target task.

- Data Compliance: Highly regulated industries like healthcare and finance have strict data privacy requirements. Fine-tuning allows training LLMs on proprietary organizational data while protecting sensitive information.

- Limited Labeled Data: Obtaining large labeled datasets for training models from scratch can be challenging. Fine-tuning allows achieving strong task performance from limited supervised examples by leveraging the pre-trained model’s capabilities.

- Model Updating: As new data becomes available over time in a domain, you can fine-tune models further to incorporate the latest knowledge and capabilities.

- Mitigating Biases: LLMs can pick up societal biases from broad pre-training data. Fine-tuning on curated datasets can help reduce and correct these undesirable biases.

In essence, fine-tuning bridges the gap between a general, broad model and the focused requirements of a specialized application. It enhances the accuracy, safety, and relevance of model outputs for targeted use cases.

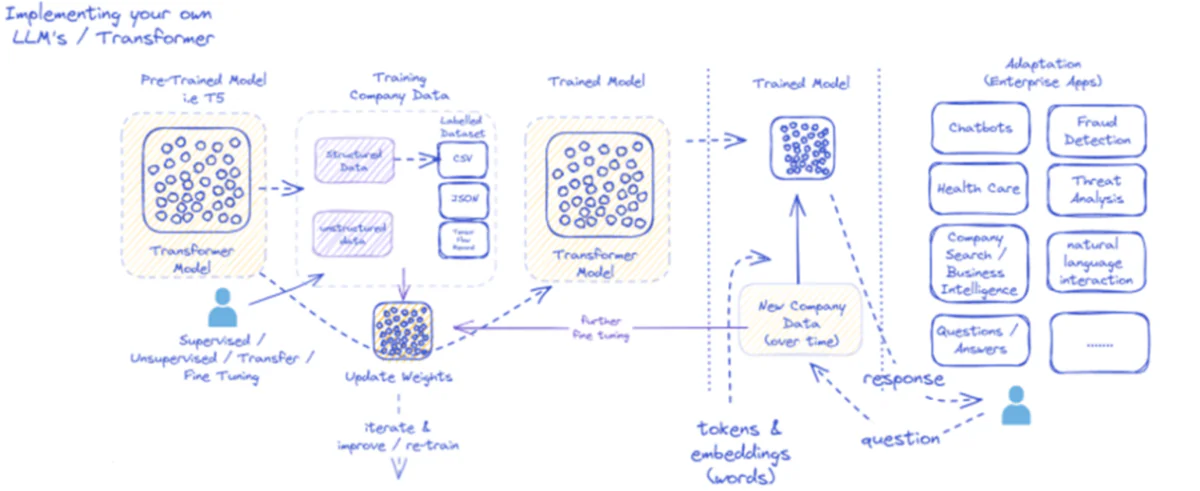

The provided diagram outlines the process of implementing and utilizing large language models (LLMs), specifically for enterprise applications. Initially, a pre-trained model like T5 is fed structured and unstructured company data, which may come in various formats such as CSV or JSON. This data undergoes supervised, unsupervised, or transfer fine-tuning processes, enhancing the model’s relevance to the company’s specific needs.

Once the model is fine-tuned with the company data, its weights are updated accordingly. The trained model then iterates through further training cycles, continually improving its responses over time with new company data. The process is iterative and dynamic, with the model learning and retraining to adapt to evolving data patterns.

The output of this trained model—tokens and embeddings representing words—is then deployed for various enterprise applications. These applications can range from chatbots to healthcare, each requiring the model to understand and respond to industry-specific queries. In finance, applications include fraud detection and threat analysis; in healthcare, models can assist with patient inquiries and diagnostics.

The trained model’s capacity to process and respond to new company data over time ensures that its utility is sustained and grows. As a result, enterprise users can interact with the model through applications, asking questions and receiving informed responses that reflect the model’s training and fine-tuning on domain-specific data.

This infrastructure supports a broad range of enterprise applications, showcasing the versatility and adaptability of LLMs when properly implemented and maintained within a business context.

Fine-Tuning Approaches

There are two primary strategies when it comes to fine-tuning large language models:

1) Full Model Fine-tuning

In the full fine-tuning approach, all the parameters (weights and biases) of the pre-trained model are updated during the second training phase. The model is exposed to the task-specific labeled dataset, and the standard training process optimizes the entire model for that data distribution.

This allows the model to make more comprehensive adjustments and adapt holistically to the target task or domain. However, full fine-tuning has some downsides:

- It requires significant computational resources and time to train, similar to the pre-training phase.

- The storage requirements are high, as you need to maintain a separate fine-tuned copy of the model for each task.

- There is a risk of “catastrophic forgetting”, where fine-tuning causes the model to lose some general capabilities learned during pre-training.

Despite these limitations, full fine-tuning remains a powerful and widely used technique when resources permit and the target task diverges significantly from general language.

2) Efficient Fine-Tuning Methods

To overcome the computational challenges of full fine-tuning, researchers have developed efficient strategies that only update a small subset of the model’s parameters during fine-tuning. These parametrically efficient techniques strike a balance between specialization and reducing resource requirements.

Some popular efficient fine-tuning methods include:

Prefix-Tuning: Here, a small number of task-specific vectors or “prefixes” are introduced and trained to condition the pre-trained model’s attention for the target task. Only these prefixes are updated during fine-tuning.

LoRA (Low-Rank Adaptation): LoRA injects trainable low-rank matrices into each layer of the pre-trained model during fine-tuning. These small rank adjustments help specialize the model with far fewer trainable parameters than full fine-tuning.

Sure, I can provide a detailed explanation of LoRA (Low-Rank Adaptation) along with the mathematical formulation and code examples. LoRA is a popular parameter-efficient fine-tuning (PEFT) technique that has gained significant traction in the field of large language model (LLM) adaptation.

What is LoRA?

LoRA is a fine-tuning method that introduces a small number of trainable parameters to the pre-trained LLM, allowing for efficient adaptation to downstream tasks while preserving the majority of the original model’s knowledge. Instead of fine-tuning all the parameters of the LLM, LoRA injects task-specific low-rank matrices into the model’s layers, enabling significant computational and memory savings during the fine-tuning process.

Mathematical Formulation

LoRA (Low-Rank Adaptation) is a fine-tuning method for large language models (LLMs) that introduces a low-rank update to the weight matrices. For a weight matrix 0∈W0∈Rd×k, LoRA adds a low-rank matrix BA, with A∈Rr×k and B∈Rd×r, where r is the rank. This approach significantly reduces the number of trainable parameters, enabling efficient adaptation to downstream tasks with minimal computational resources. The updated weight matrix is given by W=W0+B⋅A.

This low-rank update can be interpreted as modifying the original weight matrix $W_{0}$ by adding a low-rank matrix $BA$. The key advantage of this formulation is that instead of updating all $d \times k$ parameters in $W_{0}$, LoRA only needs to optimize $r \times (d + k)$ parameters in $A$ and $B$, significantly reducing the number of trainable parameters.

Here’s an example in Python using the peft library to apply LoRA to a pre-trained LLM for text classification:

</div> <div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color="">from</span> transformers <span class="token" data-darkreader-inline-color="">import</span> AutoModelForSequenceClassification </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color="">from</span> peft <span class="token" data-darkreader-inline-color="">import</span> get_peft_model<span class="token" data-darkreader-inline-color="">,</span> LoraConfig<span class="token" data-darkreader-inline-color="">,</span> TaskType </code></div> <div data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># Load pre-trained model</span> </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color="">model <span class="token" data-darkreader-inline-color="">=</span> AutoModelForSequenceClassification<span class="token" data-darkreader-inline-color="">.</span>from_pretrained<span class="token" data-darkreader-inline-color="">(</span><span class="token" data-darkreader-inline-color="">"bert-base-uncased"</span><span class="token" data-darkreader-inline-color="">,</span> num_labels<span class="token" data-darkreader-inline-color="">=</span><span class="token" data-darkreader-inline-color="">2</span><span class="token" data-darkreader-inline-color="">)</span> </code></div> <div data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># Define LoRA configuration</span> </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color="">peft_config <span class="token" data-darkreader-inline-color="">=</span> LoraConfig<span class="token" data-darkreader-inline-color="">(</span>task_type<span class="token" data-darkreader-inline-color="">=</span>TaskType<span class="token" data-darkreader-inline-color="">.</span>SEQ_CLS<span class="token" data-darkreader-inline-color="">, </span>r<span class="token" data-darkreader-inline-color="">=</span><span class="token" data-darkreader-inline-color="">8</span><span class="token" data-darkreader-inline-color="">,</span> <span class="token" data-darkreader-inline-color=""># Rank of the low-rank update</span> lora_alpha<span class="token" data-darkreader-inline-color="">=</span><span class="token" data-darkreader-inline-color="">16</span><span class="token" data-darkreader-inline-color="">,</span></code><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># Scaling factor for the low-rank update</span> </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""> target_modules<span class="token" data-darkreader-inline-color="">=</span><span class="token" data-darkreader-inline-color="">[</span><span class="token" data-darkreader-inline-color="">"q_lin"</span><span class="token" data-darkreader-inline-color="">,</span> <span class="token" data-darkreader-inline-color="">"v_lin"</span><span class="token" data-darkreader-inline-color="">]</span><span class="token" data-darkreader-inline-color="">,</span> <span class="token" data-darkreader-inline-color=""># Apply LoRA to the query and value layers</span> <span class="token" data-darkreader-inline-color="">)</span> </code></div> <div data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># Create the LoRA-enabled model</span> </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color="">model <span class="token" data-darkreader-inline-color="">=</span> get_peft_model<span class="token" data-darkreader-inline-color="">(</span>model<span class="token" data-darkreader-inline-color="">,</span> peft_config<span class="token" data-darkreader-inline-color="">)</span> </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># Fine-tune the model with LoRA</span> </code></div> <div data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># ... (training code omitted for brevity)</span></code></div> </div> <div data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color="">

In this example, we load a pre-trained BERT model for sequence classification and define a LoRA configuration. The r parameter specifies the rank of the low-rank update, and lora_alpha is a scaling factor for the update. The target_modules parameter indicates which layers of the model should receive the low-rank updates. After creating the LoRA-enabled model, we can proceed with the fine-tuning process using the standard training procedure.

Adapter Layers: Similar to LoRA, but instead of low-rank updates, thin “adapter” layers are inserted within each transformer block of the pre-trained model. Only the parameters of these few new compact layers are trained.

Prompt Tuning: This approach keeps the pre-trained model frozen completely. Instead, trainable “prompt” embeddings are introduced as input to activate the model’s pre-trained knowledge for the target task.

These efficient methods can provide up to 100x compute reductions compared to full fine-tuning, while still achieving competitive performance on many tasks. They also reduce storage needs by avoiding full model duplication.

However, their performance may lag behind full fine-tuning for tasks that are vastly different from general language or require more holistic specialization.

The Fine-Tuning Process

Regardless of the fine-tuning strategy, the overall process for specializing an LLM follows a general framework:

- Dataset Preparation: You’ll need to obtain or create a labeled dataset that maps inputs (prompts) to desired outputs for your target task. For text generation tasks like summarization, this would be input text to summarized output pairs.

- Dataset Splitting: Following best practices, split your labeled dataset into train, validation, and test sets. This separates data for model training, hyperparameter tuning, and final evaluation.

- Hyperparameter Tuning: Parameters like learning rate, batch size, and training schedule need to be tuned for the most effective fine-tuning on your data. This usually involves a small validation set.

- Model Training: Using the tuned hyperparameters, run the fine-tuning optimization process on the full training set until the model’s performance on the validation set stops improving (early stopping).

- Evaluation: Assess the fine-tuned model’s performance on the held-out test set, ideally comprising real-world examples for the target use case, to estimate real-world efficacy.

- Deployment and Monitoring: Once satisfactory, the fine-tuned model can be deployed for inference on new inputs. It’s crucial to monitor its performance and accuracy over time for concept drift.

While this outlines the overall process, many nuances can impact fine-tuning success for a particular LLM or task. Strategies like curriculum learning, multi-task fine-tuning, and few-shot prompting can further boost performance.

Additionally, efficient fine-tuning methods involve extra considerations. For example, LoRA requires techniques like conditioning the pre-trained model outputs through a combining layer. Prompt tuning needs carefully designed prompts to activate the right behaviors.

Advanced Fine-Tuning: Incorporating Human Feedback

While standard supervised fine-tuning using labeled datasets is effective, an exciting frontier is training LLMs directly using human preferences and feedback. This human-in-the-loop approach leverages techniques from reinforcement learning:

PPO (Proximal Policy Optimization): Here, the LLM is treated as a reinforcement learning agent, with its outputs being “actions”. A reward model is trained to predict human ratings or quality scores for these outputs. PPO then optimizes the LLM to generate outputs maximizing the reward model’s scores.

RLHF (Reinforcement Learning from Human Feedback): This extends PPO by directly incorporating human feedback into the learning process. Instead of a fixed reward model, the rewards come from iterative human evaluations on the LLM’s outputs during fine-tuning.

While computationally intensive, these methods allow molding LLM behavior more precisely based on desired characteristics evaluated by humans, beyond what can be captured in a static dataset.

Companies like Anthropic used RLHF to imbue their language models like Claude with improved truthfulness, ethics, and safety awareness beyond just task competence.

Potential Risks and Limitations

While immensely powerful, fine-tuning LLMs is not without risks that must be carefully managed:

Bias Amplification: If the fine-tuning data contains societal biases around gender, race, age, or other attributes, the model can amplify these undesirable biases. Curating representative and de-biased datasets is crucial.

Factual Drift: Even after fine-tuning on high-quality data, language models can “hallucinate” incorrect facts or outputs inconsistent with the training examples over longer conversations or prompts. Fact retrieval methods may be needed.

Scalability Challenges: Full fine-tuning of huge models like GPT-3 requires immense compute resources that may be infeasible for many organizations. Efficient fine-tuning partially mitigates this but has trade-offs.

Catastrophic Forgetting: During full fine-tuning, models can experience catastrophic forgetting, where they lose some general capabilities learned during pre-training. Multi-task learning may be needed.

IP and Privacy Risks: Proprietary data used for fine-tuning can leak into publicly released language model outputs, posing risks. Differential privacy and information hazard mitigation techniques are active areas of research.

Overall, while exceptionally useful, fine-tuning is a nuanced process requiring care around data quality, identity considerations, mitigating risks, and balancing performance-efficiency trade-offs based on use case requirements.

The Future: Language Model Customization At Scale

Looking ahead, advancements in fine-tuning and model adaptation techniques will be crucial for unlocking the full potential of large language models across diverse applications and domains.

More efficient methods enabling fine-tuning even larger models like PaLM with constrained resources could democratize access. Automating dataset creation pipelines and prompt engineering could streamline specialization.

Self-supervised techniques to fine-tune from raw data without labels may open up new frontiers. And compositional approaches to combine fine-tuned sub-models trained on different tasks or data could allow constructing highly tailored models on-demand.

Ultimately, as LLMs become more ubiquitous, the ability to customize and specialize them seamlessly for every conceivable use case will be critical. Fine-tuning and related model adaptation strategies are pivotal steps in realizing the vision of large language models as flexible, safe, and powerful AI assistants augmenting human capabilities across every domain and endeavor.

I have spent the past five years immersing myself in the fascinating world of Machine Learning and Deep Learning. My passion and expertise have led me to contribute to over 50 diverse software engineering projects, with a particular focus on AI/ML. My ongoing curiosity has also drawn me toward Natural Language Processing, a field I am eager to explore further.

You may like

-

The Learning-Authority Dilemma: What Happens When AI Agent Capability Exceeds Human Oversight?

-

A ‘Zen’ Method to Stop Language Models from Hallucinating

-

Research Reveals LLMs Default to Simple Reasoning When Complexity Increases

-

Using the ‘House’ TV Show To Develop AI’s Diagnostic Capabilities

-

Identifying AI Model Theft Through Secret Tracking Data

-

The End of Tabula Rasa: How Pre-Trained World Models are Redefining Reinforcement Learning