Artificial Intelligence

Instant-Style: Style-Preservation in Text-to-Image Generation

Over the past few years, tuning-based diffusion models have demonstrated remarkable progress across a wide array of image personalization and customization tasks. However, despite their potential, current tuning-based diffusion models continue to face a host of complex challenges in producing and generating style-consistent images, and there might be three reasons behind the same. First, the concept of style still remains widely undefined and undetermined, and comprises a combination of elements including atmosphere, structure, design, material, color, and much more. Second inversion-based methods are prone to style degradation, resulting in frequent loss of fine-grained details. Finally, adapter-based approaches require frequent weight tuning for each reference image to maintain a balance between text controllability, and style intensity.

Furthermore, the primary goal of a majority of style transfer approaches or style image generation is to use the reference image, and apply its specific style from a given subset or reference image to a target content image. However, it is the wide number of attributes of style that makes the job difficult for researchers to collect stylized datasets, representing style correctly, and evaluating the success of the transfer. Previously, models and frameworks that deal with fine-tuning based diffusion process, fine-tune the dataset of images that share a common style, a process that is both time-consuming, and with limited generalizability in real-world tasks since it is difficult to gather a subset of images that share the same or nearly identical style.

In this article, we will talk about InstantStyle, a framework designed with the aim of tackling the issues faced by the current tuning-based diffusion models for image generation and customization. We will talk about the two key strategies implemented by the InstantStyle framework:

- A simple yet effective approach to decouple style and content from reference images within the feature space, predicted on the assumption that features within the same feature space can be either added to or subtracted from one another.

- Preventing style leaks by injecting the reference image features exclusively into the style-specific blocks, and deliberately avoiding the need to use cumbersome weights for fine-tuning, often characterizing more parameter-heavy designs.

This article aims to cover the InstantStyle framework in depth, and we explore the mechanism, the methodology, the architecture of the framework along with its comparison with state of the art frameworks. We will also talk about how the InstantStyle framework demonstrates remarkable visual stylization outcomes, and strikes an optimal balance between the controllability of textual elements and the intensity of style. So let’s get started.

InstantStyle : Style Preservation in Text to Image Generation

Diffusion based text to image generative AI frameworks have garnered noticeable and remarkable success across a wide array of customization and personalization tasks, particularly in consistent image generation tasks including object customization, image preservation, and style transfer. However, despite the recent success and boost in performance, style transfer remains a challenging task for researchers owing to the undetermined and undefined nature of style, often including a variety of elements including atmosphere, structure, design, material, color, and much more. With that being said, the primary goal of stylized image generation or style transfer is to apply the specific style from a given reference image or a reference subset of images to the target content image. However, the wide number of attributes of style makes the job difficult for researchers to collect stylized datasets, representing style correctly, and evaluating the success of the transfer. Previously, models and frameworks that deal with fine-tuning based diffusion process, fine-tune the dataset of images that share a common style, a process that is both time-consuming, and with limited generalizability in real-world tasks since it is difficult to gather a subset of images that share the same or nearly identical style.

With the challenges encountered by the current approach, researchers have taken an interest in developing fine-tuning approaches for style transfer or stylized image generation, and these frameworks can be split into two different groups:

- Adapter-free Approaches: Adapter-free approaches and frameworks leverage the power of self-attention within the diffusion process, and by implementing a shared attention operation, these models are capable of extracting essential features including keys and values from a given reference style images directly.

- Adapter-based Approaches: Adapter-based approaches and frameworks on the other hand incorporate a lightweight model designed to extract detailed image representations from the reference style images. The framework then integrates these representations into the diffusion process skillfully using cross-attention mechanisms. The primary goal of the integration process is to guide the generation process, and to ensure that the resulting image is aligned with the desired stylistic nuances of the reference image.

However, despite the promises, tuning-free methods often encounter a few challenges. First, the adapter-free approach requires an exchange of key and values within the self-attention layers, and pre-catches the key and value matrices derived from the reference style images. When implemented on natural images, the adapter-free approach demands the inversion of image back to the latent noise using techniques like DDIM or Denoising Diffusion Implicit Models inversion. However, using DDIM or other inversion approaches might result in the loss of fine-grained details like color and texture, therefore diminishing the style information in the generated images. Furthermore, the additional step introduced by these approaches is a time consuming process, and can pose significant drawbacks in practical applications. On the other hand, the primary challenge for adapter-based methods lies in striking the right balance between the context leakage and style intensity. Content leakage occurs when an increase in the style intensity results in the appearance of non-style elements from the reference image in the generated output, with the primary point of difficulty being separating styles from content within the reference image effectively. To address this issue, some frameworks construct paired datasets that represent the same object in different styles, facilitating the extraction of content representation, and disentangled styles. However, thanks to the inherently undetermined representation of style, the task of creating large-scale paired datasets is limited in terms of the diversity of styles it can capture, and it is a resource-intensive process as well.

To tackle these limitations, the InstantStyle framework is introduced which is a novel tuning-free mechanism based on existing adapter-based methods with the ability to seamlessly integrate with other attention-based injecting methods, and achieving the decoupling of content and style effectively. Furthermore, the InstantStyle framework introduces not one, but two effective ways to complete the decoupling of style and content, achieving better style migration without having the need to introduce additional methods to achieve decoupling or building paired datasets.

Furthermore, prior adapter-based frameworks have been used widely in the CLIP-based methods as an image feature extractor, some frameworks have explored the possibility of implementing feature decoupling within the feature space, and when compared against undetermination of style, it is easier to describe the content with text. Since images and texts share a feature space in CLIP-based methods, a simple subtraction operation of context text features and image features can reduce content leakage significantly. Furthermore, in a majority of diffusion models, there is a particular layer in its architecture that injects the style information, and accomplishes the decoupling of content and style by injecting image features only into specific style blocks. By implementing these two simple strategies, the InstantStyle framework is able to solve content leakage problems encountered by a majority of existing frameworks while maintaining the strength of style.

To sum it up, the InstantStyle framework employs two simple, straightforward yet effective mechanisms to achieve an effective disentanglement of content and style from reference images. The Instant-Style framework is a model independent and tuning-free approach that demonstrates remarkable performance in style transfer tasks with a huge potential for downstream tasks.

Instant-Style: Methodology and Architecture

As demonstrated by previous approaches, there is a balance in the injection of style conditions in tuning-free diffusion models. If the intensity of the image condition is too high, it might result in content leakage, whereas if the intensity of the image condition drops too low, the style may not appear to be obvious enough. A major reason behind this observation is that in an image, the style and content are intercoupled, and due to the inherent undetermined style attributes, it is difficult to decouple the style and intent. As a result, meticulous weights are often tuned for each reference image in an attempt to balance text controllability and strength of style. Furthermore, for a given input reference image and its corresponding text description in the inversion-based methods, inversion approaches like DDIM are adopted over the image to get the inverted diffusion trajectory, a process that approximates the inversion equation to transform an image into a latent noise representation. Building on the same, and starting from the inverted diffusion trajectory along with a new set of prompts, these methods generate new content with its style aligning with the input. However, as shown in the following figure, the DDIM inversion approach for real images is often unstable as it relies on local linearization assumptions, resulting in propagation of errors, and leads to loss of content and incorrect image reconstruction.

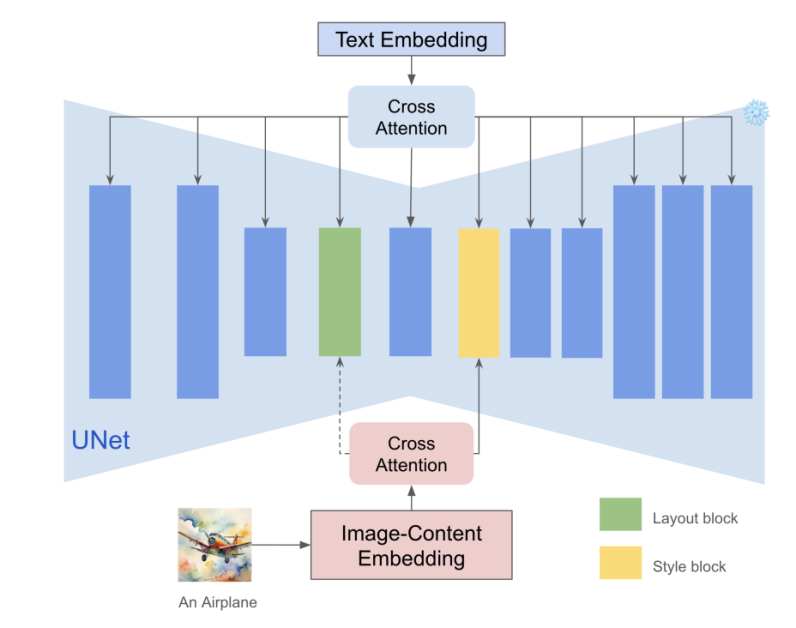

Coming to the methodology, instead of employing complex strategies to disentangle content and style from images, the Instant-Style framework takes the simplest approach to achieve similar performance. When compared against the underdetermined style attributes, content can be represented by natural text, allowing the Instant-Style framework to use the text encoder from CLIP to extract the characteristics of the content text as context representations. Simultaneously, the Instant-Style framework implements CLIP image encoder to extract the features of the reference image. Taking advantage of the characterization of CLIP global features, and post subtracting the content text features from the image features, the Instant-Style framework is able to decouple the style and content explicitly. Although it is a simple strategy, it helps the Instant-Style framework is quite effective in keeping content leakage to a minimum.

Furthermore, each layer within a deep network is responsible for capturing different semantic information, and the key observation from previous models is that there exist two attention layers that are responsible for handling style. up Specifically, it is the blocks.0.attentions.1 and down blocks.2.attentions.1 layers responsible for capturing style like color, material, atmosphere, and the spatial layout layer captures structure and composition respectively. The Instant-Style framework uses these layers implicitly to extract style information, and prevents content leakage without losing the style strength. The strategy is simple yet effective since the model has located style blocks that can inject the image features into these blocks to achieve seamless style transfer. Furthermore, since the model greatly reduces the number of parameters of the adapter, the text control ability of the framework is enhanced, and the mechanism is also applicable to other attention-based feature injection models for editing and other tasks.

Instant-Style : Experiments and Results

The Instant-Style framework is implemented on the Stable Diffusion XL framework, and it uses the commonly adopted pre-trained IR-adapter as its exemplar to validate its methodology, and mutes all blocks except the style blocks for image features. The Instant-Style model also trains the IR-adapter on 4 million large-scale text-image paired datasets from scratch, and instead of training all blocks, updates only the style blocks.

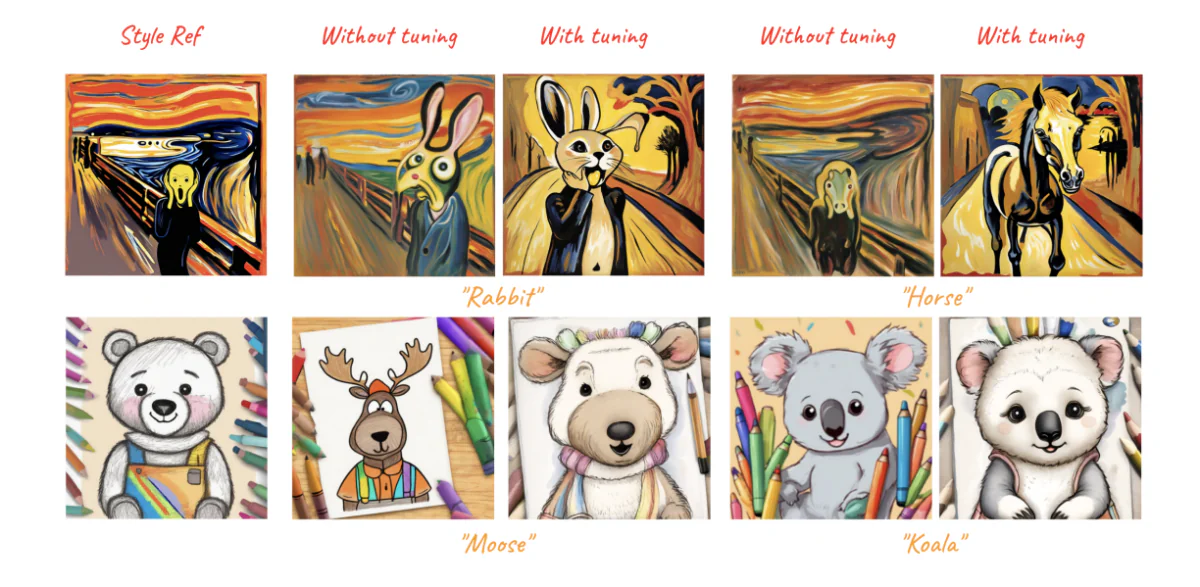

To conduct its generalization capabilities and robustness, the Instant-Style framework conducts numerous style transfer experiments with various styles across different content, and the results can be observed in the following images. Given a single style reference image along with varying prompts, the Instant-Style framework delivers high quality, consistent style image generation.

Furthermore, since the model injects image information only in the style blocks, it is able to mitigate the issue of content leakage significantly, and therefore, does not need to perform weight tuning.

Moving along, the Instant-Style framework also adopts the ControlNet architecture to achieve image-based stylization with spatial control, and the results are demonstrated in the following image.

When compared against previous state of the art methods including StyleAlign, B-LoRA, Swapping Self Attention, and IP-Adapter, the Instant-Style framework demonstrates the best visual effects.

Final Thoughts

In this article, we have talked about Instant-Style, a general framework that employs two simple yet effective strategies to achieve effective disentanglement of content and style from reference images. The InstantStyle framework is designed with the aim of tackling the issues faced by the current tuning-based diffusion models for image generation and customization. The Instant-Style framework implements two vital strategies: A simple yet effective approach to decouple style and content from reference images within the feature space, predicted on the assumption that features within the same feature space can be either added to or subtracted from one another. Second, preventing style leaks by injecting the reference image features exclusively into the style-specific blocks, and deliberately avoiding the need to use cumbersome weights for fine-tuning, often characterizing more parameter-heavy designs.