Robotics

How Robots Are Learning to Ask for Help

In the evolving world of robotics, a groundbreaking collaboration between Princeton University and Google stands out. Engineers from these prestigious institutions have developed an innovative method that teaches robots a crucial skill: recognizing when they need help and how to ask for it. This development marks a significant leap forward in robotics, bridging the gap between autonomous functioning and human-robot interaction.

The journey towards more intelligent and independent robots has always been hindered by one significant challenge: the complexity and ambiguity of human language. Unlike the binary clarity of computer codes, human language is riddled with nuances and subtleties, making it a labyrinth for robots. For instance, a command as simple as “pick up the bowl” can become a complex task when multiple bowls are present. Robots, equipped to sense their environment and respond to language, often find themselves at a crossroads when faced with such linguistic uncertainties.

Quantifying Uncertainty

Addressing this challenge, the Princeton and Google team has introduced a novel approach that quantifies the ‘fuzziness’ of human language. This technique essentially measures the level of uncertainty in language commands and uses this metric to guide robot actions. In situations where a command might lead to multiple interpretations, the robot can now gauge the level of uncertainty and decide when to seek further clarification. For instance, in an environment with multiple bowls, a higher degree of uncertainty would prompt the robot to ask which bowl to pick up, thereby avoiding potential errors or inefficiencies.

This approach not only empowers robots with a better understanding of language but also enhances their safety and efficiency in task execution. By integrating large language models (LLMs) like those behind ChatGPT, the researchers have taken a significant step in aligning robotic actions more closely with human expectations and needs.

Role of Large Language Models

The integration of LLMs plays a pivotal role in this new approach. LLMs are instrumental in processing and interpreting human language. In this context, they are used to evaluate and measure the uncertainty present in language commands given to robots.

However, the reliance on LLMs isn’t without its challenges. As pointed out by the research team, outputs from LLMs can sometimes be unreliable.

Anirudha Majumdar, an assistant professor at Princeton, emphasizes the importance of this balance:

“Blindly following plans generated by an LLM could cause robots to act in an unsafe or untrustworthy manner, and so we need our LLM-based robots to know when they don’t know.”

This highlights the necessity for a nuanced approach, where LLMs are used as tools for guidance rather than infallible decision-makers.

Practical Application and Testing

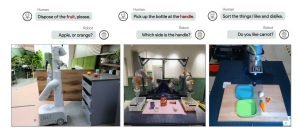

The practicality of this method has been tested in various scenarios, illustrating its versatility and effectiveness. One such test involved a robotic arm, tasked with sorting toy food items into different categories. This simple setup demonstrated the robot’s ability to navigate tasks with clear-cut choices effectively.

Image: Princeton University

The complexity increased significantly in another experiment featuring a robotic arm mounted on a wheeled platform in an office kitchen. Here, the robot faced real-world challenges like identifying the correct item to place in a microwave when presented with multiple options.

Through these tests, the robots successfully demonstrated their ability to use the quantified uncertainty to make decisions or seek clarification, thereby validating the practical utility of this method.

Future Implications and Research

Looking ahead, the implications of this research extend far beyond the current applications. The team, led by Majumdar and graduate student Allen Ren, is exploring how this approach can be applied to more complex problems in robot perception and AI. This includes scenarios where robots need to combine vision and language information to make decisions, further closing the gap between robotic understanding and human interaction.

The ongoing research aims to not only enhance the ability of robots to perform tasks with higher accuracy but also to navigate the world with an understanding akin to human cognition. This research could pave the way for robots that are not only more efficient and safer but also more in tune with the nuanced demands of human environments.

You can find the published research here.