Cybersecurity

Google Details Security Architecture for Chrome’s AI Agent Features

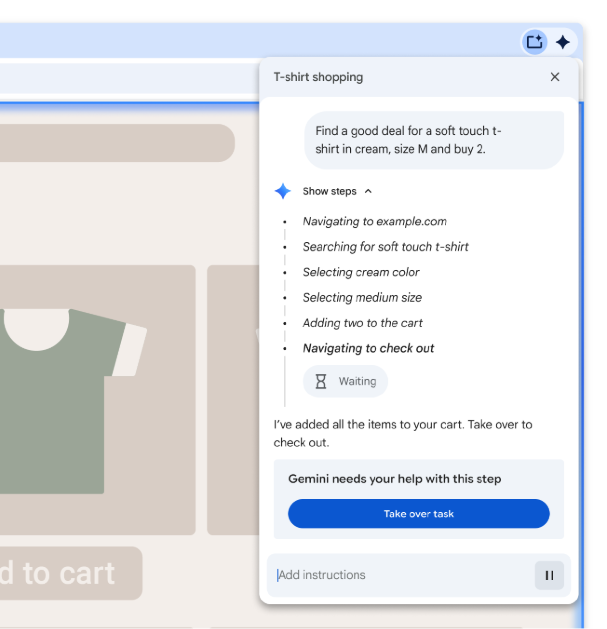

Google published a detailed security framework for Chrome’s upcoming agentic AI features, introducing multiple defense layers designed to protect users when Gemini-powered agents perform autonomous browsing tasks.

The announcement from Chrome security engineer Nathan Parker outlines four core security pillars that will govern how AI agents interact with websites on users’ behalf. The architecture addresses risks that have plagued early agentic systems, including prompt injection attacks, unauthorized data access, and fraudulent transactions.

Google’s approach arrives as competitors race to ship browser-based AI agents. OpenAI launched ChatGPT Atlas in October with agent mode capabilities, while Perplexity shipped its Comet browser in July. The security framework signals Google’s intent to move more cautiously than rivals whose agentic features researchers have already shown to be vulnerable to exploitation.

Four Pillars of Agent Security

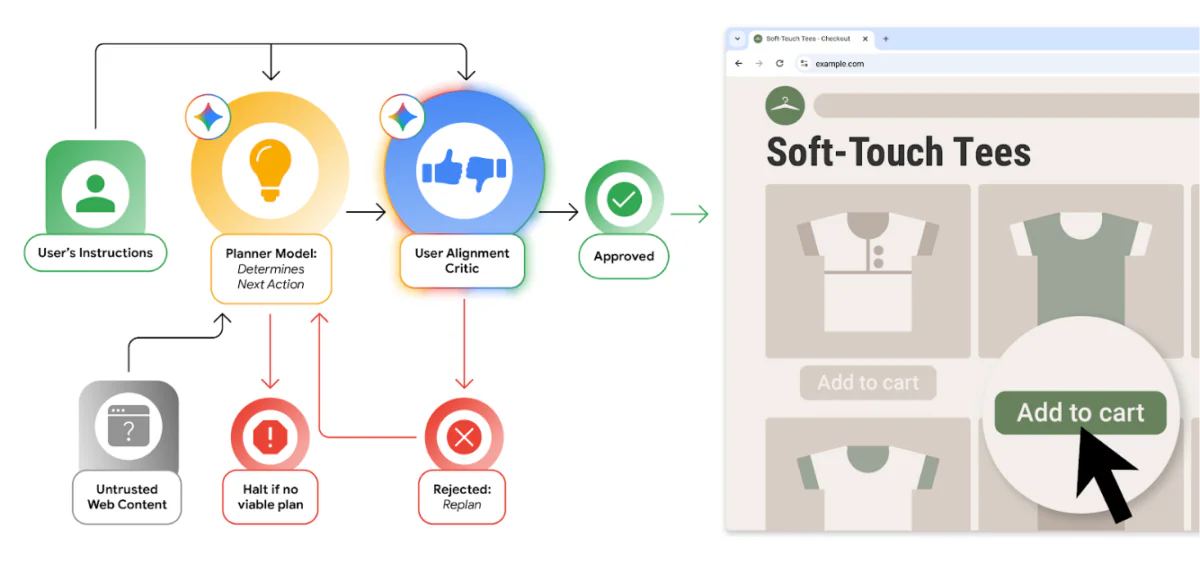

The User Alignment Critic forms the first defense layer—a separate Gemini model that vets every action the primary AI agent proposes. This critic operates in isolation and examines only metadata about proposed actions rather than full page content, reducing its exposure to malicious inputs. If an action appears risky or irrelevant to the user’s stated goal, the critic can order a retry or return control to the user.

Origin Sets restrict which websites and page elements an agent can access during any given task. The system distinguishes between read-only origins where the agent can consume content and read-writeable origins where it can take actions. Unrelated websites and iframes are withheld entirely, with a trusted gating function required to approve access to new domains. This prevents cross-site data leakage and limits potential damage from a compromised agent.

User Oversight requires manual confirmation for sensitive operations. When an agent encounters banking portals, medical data sites, or needs to access stored credentials from Google Password Manager, Chrome pauses and prompts users to approve the action. The same applies before making purchases or sending messages—the agent cannot complete these actions autonomously.

Prompt Injection Detection employs a dedicated classifier scanning pages in real-time for indirect prompt injection attempts. This system operates alongside Chrome’s existing Safe Browsing infrastructure and on-device scam detection to block suspected malicious content before the agent can act on it.

Automated Red-Teaming and Bug Bounties

Google developed automated red-teaming systems that generate test sites and LLM-driven attacks to continuously validate the security architecture. The company prioritizes testing attack vectors that could cause lasting harm, particularly those targeting financial transactions or credential theft.

Chrome’s auto-update mechanism will deliver fixes rapidly as new vulnerabilities are discovered. To encourage external security research, Google announced bounty payments up to $20,000 for researchers who identify weaknesses in the agentic browsing framework.

The defensive measures reflect lessons learned from early AI browser extensions and chatbot integrations, where prompt injection attacks proved surprisingly effective at manipulating AI behavior. By isolating the critic model and restricting origin access at the browser level, Google aims to prevent the web page itself from becoming an attack surface.

Implications for the AI Browser Race

Google’s detailed security disclosure contrasts with the relative opacity around competing agentic browser systems. The company appears to be betting that enterprise and security-conscious users will value transparent safeguards over first-mover features.

The architecture also suggests what Google considers acceptable autonomy for AI agents. Shopping, research, and form-filling can proceed with oversight, but anything touching financial accounts, healthcare data, or stored credentials requires explicit human approval. This draws a clear line that other vendors have been less willing to define publicly.

For developers building on Chrome’s platform, the origin set restrictions will require careful consideration of how agentic features interact with multi-site workflows. Applications that expect agents to freely navigate across domains may need architectural changes to work within Google’s security model.

Google has not announced a specific launch date for agentic browsing features in Chrome, but the detailed security framework suggests deployment is approaching. The company’s willingness to publish defensive architecture before launch indicates confidence in the approach—and an implicit challenge to competitors to match its transparency.