Artificial Intelligence

We’re Not Aligning AIs Anymore. We’re Aligning Civilizations

For many years, the main question in artificial intelligence (AI) was how to align AI models with human values. Researchers tried to define safety rules, ethical principles, and control mechanisms that guide AI decisions. This work achieved significant progress. But now, as these AI systems grow more capable and widespread, the focus of alignment is beginning to shift. What started as the challenge of aligning a single model to a single set of instructions has now become the much larger challenge of aligning entire civilizations that use these systems on a global scale. AI is no longer just a tool. It is evolving into an environment where societies work, communicate, negotiate, and compete. As a result, alignment no longer remains a technical problem. It has become a civilizational problem. In this article, I explain why alignment is no longer just about models and why we must begin thinking in terms of civilizations instead of machines. I also discuss how this shift affects our responsibilities and what it means for global cooperation.

Whose Values Does AI Really Represent?

If you ask an AI about a sensitive historical event, its answer depends on the data it studied. If that data comes mostly from the Western internet, the answer will have a Western bias. It will prioritize individualism, open speech, and democratic ideals. These are good values to many people. But they are not the only values in the world.

A user in Singapore, a user in Riyadh, and a user in San Francisco have different definitions of what is “helpful” and what is “harmful.” In some cultures, social harmony is more important than absolute freedom of speech. In others, respect for hierarchy is more important than disruption.

When a Silicon Valley company uses “Reinforcement Learning from Human Feedback” (RLHF), they hire humans to rate the AI’s answers. But who are these humans? What are their beliefs? If they align the model with American standards, they are accidentally building an American cultural export. They are building a digital diplomat that enforces one specific civilization’s rules on the rest of the world.

The Rise of Feedback Loops

The challenge is not just that AI reflects beliefs of one culture. It’s how those beliefs can change us. Modern AI systems have the potential to shape the behavior of individuals, organizations, and even nations. They can influence how we think, work, trust, and compete. This creates feedback loops between AI systems and human societies; we train AI and AI shape how we think and act. These loops are becoming stronger as AI is becoming more pervasive. To see how this feedback loop works, here are a two examples:

- Large-scale AI deployment changes social behavior, and social behavior changes the data that trains new systems. For example, a recommendation algorithm can shape what people watch, read, and believe. Productivity tools reshape how teams collaborate and how students learn. These shifts in behavior change data patterns in form of different viewing habits, workplace communication patterns, or writing styles. When this data flows into future training datasets, the models adjust their assumptions and outputs accordingly. Human behavior shapes the models, and the models, in turn, shape human behavior.

- Automated decision tools influence public policy, and public policy influences future model training. For example, many governments now use AI to suggest where to allocate resources such as identifying which neighborhoods need more healthcare support or which areas might see higher crime risk. When policymakers act on these recommendations, the results of those decisions later become part of new datasets. Over time, policy decisions shaped by AI end up shaping the next generation of AI models.

Once you recognize this feedback loop, it becomes clear that AI is gradually shaping and aligning civilizations. As a result, nations are beginning to ask: How can they safeguard their values while integrating AI into their societies and institutions?

The Emergence of Sovereign AI

This alignment challenge has prompted significant reaction from governments worldwide. Nations have realized that relying on imported AI is a risk to their sovereignty. They cannot afford to have their citizens educated, informed, and advised by a black box that thinks like a foreigner.

This realization has led to the rise of “Sovereign AI.” France is investing heavily to build models that speak French and understand French law and culture. India is building indigenous AI models to ensure their cultural values. The United Arab Emirates and China are building AI models aligned with their own national visions.

This is the new arms race. It is a race for narrative control. A civilization that does not have its own AI will eventually lose its own memory. If your children ask a machine question, and the machine answers with the logic of a different culture, your culture begins to erode. This realization, however, may lead to the formation of distinct digital blocks. We might end up with a Western AI, a Chinese AI, an Indian AI, and so on. These systems will operate with different facts and different moral compasses. These developments make it clear that if we want to create a single, truly aligned AI model, we must first find a way to align civilizations.

Need for a Diplomatic AI

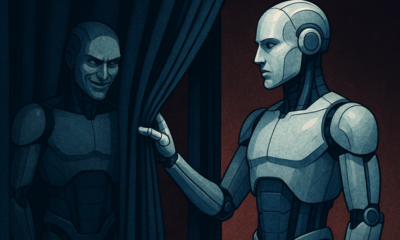

Traditional alignment assumes that a model can be aligned by careful training, prompting, and guardrails. This thinking comes from the technical mindset of early AI safety research. But even perfect model-level alignment cannot solve the challenges of aligning civilizations. The alignment cannot remain stable when societies pull in opposite directions. If countries, companies, and communities have conflicting goals, they will push AI systems to reflect those conflicts. These limits show that alignment is not only a technical problem. It is a governance, cultural and coordination problem. And these problems require not only experts or developers. They involve entire civilizations.

So, how do we move forward? If we accept that universal alignment is impossible, we must change our strategy. We need to stop looking for a technical fix to a philosophical problem. We need to start thinking like diplomats. We will need to build protocols for “Civilizational Alignment.” We need to figure out how AI can respect a society’s beliefs and values without imposing on it the beliefs of other cultures. In other words, we need a digital United Nations for our algorithms.

This requires transparency. Currently, we do not really know what values are hidden inside the deep layers of a neural network. We only see the output. To align civilizations, we need to be clear about the “Constitution” of each model. A model should be able to declare its bias. It should be able to say, “I am trained on this data, with these safety rules, prioritizing these values.” Only when the bias is visible can we trust the system. A user should be able to switch between perspectives. You should be able to ask a question and see how a “Western” model answers versus how an “Eastern” model answers. This would turn AI into a tool for understanding, rather than a tool for indoctrination.

The Bottom Line

We have spent too much time worrying about the Terminator. The real risk is not that a robot destroys us. The real risk is that a robot makes us forget who we are. Alignment is not a code we can write once and forget. It is constant negotiation with AI models to keep them aligned with our beliefs and values. It is a political act. As we move into this next phase of the intelligence age, we must look beyond the screen. We must consider how AI interprets our history, our borders, and our beliefs. We are building the minds that will help us run the world. We must make sure those minds respect the differences between civilizations.