Thought Leaders

The New Era of Executive Impersonation Won’t Involve Executives at All

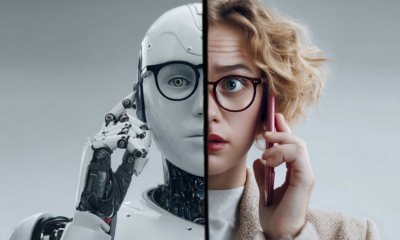

For the last few years, the biggest digital threat facing brands was executive impersonation, often executed through spoofed email addresses or imposter social media accounts. Today, we’re facing a much more complex and convincing enemy: AI agents.

The rise of autonomous agents powered by large language models (LLMs), designed to operate with little or no human intervention, has unlocked new possibilities that closely mimic the excitement seen in the early web2 and mobile app eras.

While truly autonomous agents have not quite made the leap from vision to reality, experts warn they may be able to roam corporate networks as soon as next year. The excitement and adoption that follows will inevitably fall onto the radar of ever-adapting cybercriminals, who see autonomous agents as a new frontier for digital fraud. So, what happens when these agents are impersonated? Or worse, when they are the impersonators?

Just as mobile apps once faced a deluge of counterfeit versions built to extract login credentials or install malware, AI agents are now poised to face a similar wave of copycats. This time, however, the risk will be harder to detect, and the damage potentially more widespread.

From Executive Impersonation to Agent Spoofing

AI has already ushered in new avenues of fraud and deception, characterized by voice cloning scams that can recreate an executive’s speech patterns using just seconds of audio, for example, and lately, evidence of real-time deepfake video and voice-based impersonations has been reported. Now, imagine an autonomous agent, supposedly representing your company, engaging with customers, investors, or partners. That agent could be embedded on a website, integrated into a chatbot, or even interacting via email, offering plausible, professional, and completely fraudulent interactions.

The predecessor to copycat agents may lie in “jailbreaking” models, by which threat actors manipulate LLMs, often through prompt injections or multi-turn techniques, to produce harmful or otherwise false outputs. But this is only the beginning.

According to data from PitchBook, AI companies raised $131.5 billion in 2024, accounting for more than a third of total global venture capital deals. The AI arms race, fueled by ambitious founders, hungry investors, and eager governments, will quickly accelerate the capabilities of this emerging technology, whose legitimate use cases will continue to be exploited for nefarious means.

Generative AI has and will continue to democratize the creation of such agents. As platforms race to integrate them, few guardrails exist to verify their authenticity. This opens the door to a new class of impersonation: not just of people but also of software.

A Wake-Up Call for Eager Brands and Curious Consumers

Consumers and brands alike are only beginning to understand what it means to trust an agent. But trust is the linchpin of any digital interaction, and when it’s broken, the consequences can be devastating.

As observed with deepfake videos and voice cloning, fake agents can be used to:

- Solicit payments or sensitive data under the guise of an established, or otherwise trustworthy, brand

- Redirect users to malware-ridden websites via social media DMs or paid ads

- Erode customer confidence and trigger reputational damage at scale

According to a recent Federal Trade Commission report, impersonation scams were responsible for nearly $3 billion in reported losses in the U.S. alone – a number expected to rise sharply as AI impersonation becomes more seamless and scalable.

The Proliferation of Fake Agents: Ads, Search, and Social

What’s especially dangerous is how copycat agents will be distributed. They don’t need their own websites or domains to gain traction. Bad actors can, and do, use paid search results and social media platforms to promote malicious clones of trusted brands, executives or products. A sponsored link on Google or a promoted post on Instagram, for example, is enough to funnel unsuspecting users to a fake agent or support portal.

This ecosystem-level risk, where bad actors hijack legitimate digital real estate to facilitate scams, requires a new school of thought for cybersecurity. It’s no longer enough to safeguard your network, your cloud or secure your employees’ credentials. Your brand’s threat perimeter now includes every ad network, search platform, and third-party tool your customers interact with.

Taking Action Before It’s Too Late

We are entering a world where the line between humans and agents is increasingly blurred. The threat is no longer just impersonation of people, which is currently at an exponential growth due to available AI tools, it’s the impersonation of digital personas.

So, how can businesses prepare?

- Map your brand’s digital presence: Know where your customers interact with your company and your agents – whether on your site, via API, or through third parties – and actively monitor those entry points for spoofing attempts.

- Monitor emerging agent ecosystems: As open agent marketplaces and platforms (like Hugging Face Spaces) gain popularity, be vigilant about lookalikes or forks of your tools appearing elsewhere.

- Shorten time-to-detect (TTD) and time-to-remediate (TTR): Use solutions that allow swiftly identifying and removing impersonators, especially those abusing paid ads or social platforms, is critical to limiting damage.

- Educate your users: Build transparency into your agent experiences. Your users should understand where and how they should expect to interact with your tools, and how to spot red flags.

- Establish agent authenticity protocols: Work toward cryptographic or metadata-based verification that can confirm an agent’s origin and legitimacy.

The solution lies in securing the AI agents ecosystem, not avoiding it. Just as we’ve learned to verify domains, authenticate emails, and vet mobile apps in decades past, we now need to extend the same degree of vigilance to the world of AI agents. If we don’t get ahead of agent impersonation now, we may soon find ourselves talking to a familiar-looking assistant who’s working for the other side.