Anderson's Angle

AI Can Help Identify ‘Pink Slime’ News

Agenda-driven opinion mills, designed more to sway public opinion than serve the public, might be harder to spot if AI is used to make them sound more original and rational. So the race is on to stay ahead in the ‘pink slime detection’ game.

The defunding of traditional local media outlets over the last twenty years, both due to evolving media trends and – lately – to US government policy, has left a void in regional reporting that’s been eagerly taken over by partisan organizations using AI to drive their agendas.

To put the term ‘partisan’ into context (given that no news organization is without political tendencies of some kind), we are talking about oil companies running regional news sites from distant locations, without any real local resources, but with a remit to defend the company’s public reputation; politically-motivated news sites bereft of any revenue stream gearing up before elections; and entire networks of pro-Republican news sites likewise appearing from nowhere, close to polling time.

In 2024, it was estimated that AI-driven pink slime news had finally come to outnumber genuine news outlets; at that time, an Australian survey found that 41% of consumers preferred pink slime sources over ‘real’ sources.

This kind of covert electioneering, it could be argued, has evolved from a mere dark art to an existential threat to democracy (with regard to politically-motivated outlets) and to the public trust in reasonable standards of fairness in reporting.

Therefore, methods of distinguishing the characteristic output of pink slime publishers and broadcasters from more traditional media organizations would be of great help at least in understanding who the players and driving forces are in the current information climate.

As it stands, the tropes and templates of real news organizations are very easy to mimic, and AI makes scalable publishing a current and affordable reality, using many of the same tricks being adopted by budget-stricken ‘old media’ publishers and broadcasters.

Signal and Noise

A new study from the US addresses this issue, by investigating the growing use of Large Language Models to make pink slime websites sound less generic and easy-to-spot, and by creating a learning framework designed to keep up with evolving changes in pink slime (PS) output.

Titled Exposing Pink Slime Journalism: Linguistic Signatures and Robust Detection Against LLM-Generated Threats, the new work comes from five researchers at the University of Texas.

The new work investigates how mass-produced PS local news articles differ from legitimate reporting, focusing on their reliance on short, repetitive structures and templated phrasing with minimal variation; and the authors note that PS articles tend to reuse identical templates designed to manipulate public opinion, with appeals to emotion uppermost in the content:

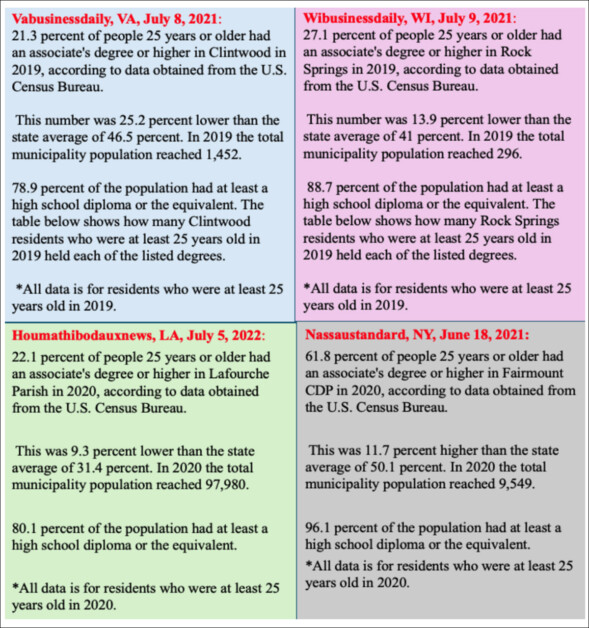

From the new paper – multiple outlets publish near-identical articles with only location details changed, revealing a copy-paste strategy used to mass-produce content that mimics legitimate local news. Source

Traditional detection models trained on these traits perform well against such content, but fail when the articles are rewritten using AI chatbots to appear more natural or sophisticated.

The authors’ own tests indicate that even minor stylistic changes introduced by large language models can reduce detection accuracy by up to 40%. To mitigate this, they propose a continual learning framework that incrementally retrains detection models on both original and AI-rewritten articles, to adapt to shifting linguistic patterns.

Method

To establish data for the project, the authors used the Pink Slime Dataset, featuring 7.9 million articles covering 1,093 outlets during 2021-2023, from which they obtained 9,472 pink slime articles after filtering. They also used the LIAR dataset, which contains annotated fake news, as well as the NELA-GT-2021 collection, which contains solely US articles*.

To prepare their training and testing sets, the authors first used the T-distributed Stochastic Neighbor Embedding (t-SNE) algorithm to reduce the article embeddings to two dimensions. They then applied the data clustering algorithm Density-Based-Spatial-Clustering-of-Applications-with-Noise (DBSCAN) to isolate clusters of similar pink-slime articles.

Each cluster was treated as a group of related stories, many of which still followed the same template, despite a concerted effort to address duplicates.

To prevent similar articles from appearing in both training and test sets, entire clusters were randomly selected, with 80% used for training and 20% for testing. Because the legitimate news articles did not form clear clusters, a random split was applied instead.

This process was repeated three times, to ensure consistency, and to reduce sampling bias.

Characteristics of Pink Slime

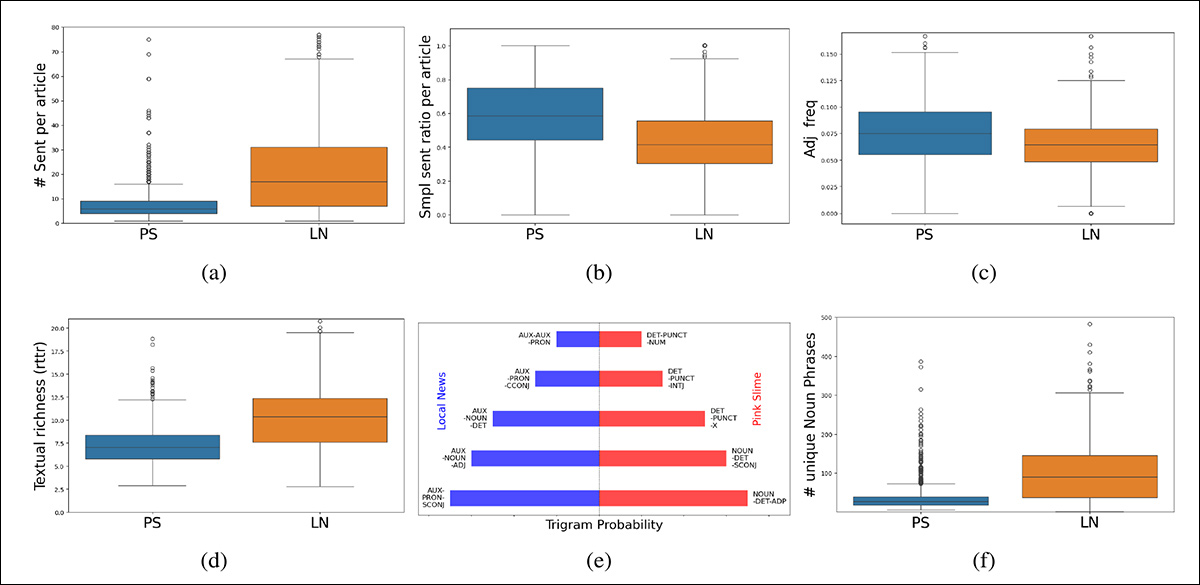

Commenting on the distinguishing traits of PS vs. regular news, the researchers assert that PS-style local news articles are significantly shorter and simpler than legitimate reporting, averaging fewer than nine sentences per article.

A higher proportion of simple sentences and a heavier reliance on adjectives are further hallmarks of pink slime, according to the paper, and indicate a penchant for repetitive, emotionally charged language.

Lexical richness was measured using the Root-Type-Token Ratio (RTTR), and found to be notably lower in the PS articles, which also exhibited far fewer unique noun phrases.

These patterns denote a limited vocabulary and formulaic style, in contrast to legitimate local news, which is characterized by complex part-of-speech patterns built around auxiliary verbs, pronouns, and conjunctions. Instead, the fake articles favor basic noun-preposition structures, with frequent use of punctuation-based trigrams, suggesting a less formal, more fragmented writing style.

Tests

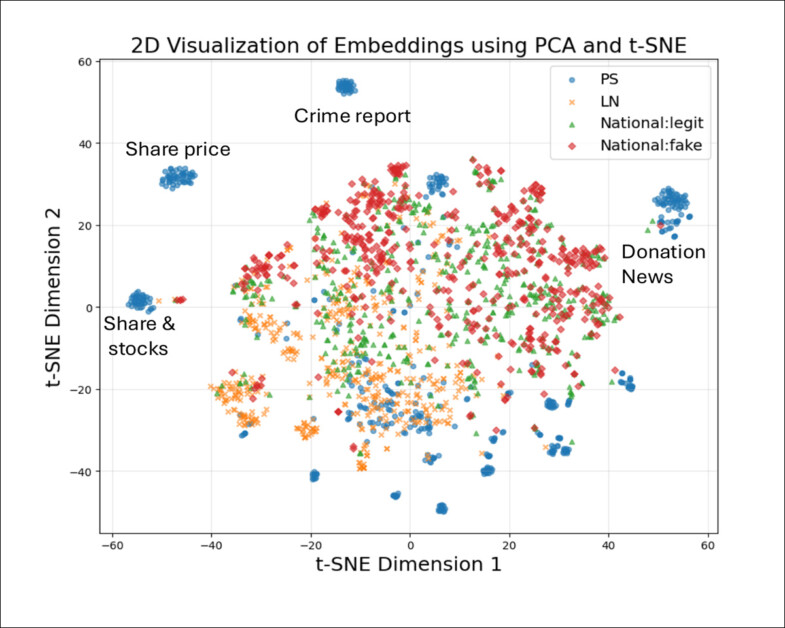

To examine the associations between different types of news articles, based on linguistic and structural features, embeddings were generated using the 435-million parameter stella_en_400M_v5 model, and reduced with Principal Component Analysis (PCA), and t-SNE for visualization.

When projected into two dimensions, the fake local news articles formed small, dense clusters, each corresponding to narrowly focused topics such as crime statistics, stock updates, or charitable donations:

Clustering patterns from a t-SNE projection reveal that pink slime articles form tight, repetitive groupings, while legitimate news displays broader, more varied distributions aligned with topic and style diversity.

As we can see, to some extent, in the visualization above, this pattern suggests a rigid, template-driven format, with minimal variation between articles.

Interestingly, articles labeled as ‘fake news’ diverged from the fake local content, showing a distribution more in line with real news, indicating that mass-produced local fakes may not be simply less truthful, but may also be mechanically distinct in form and composition.

By contrast, ‘legitimate’ local news forms fewer and more widely spaced clusters, consistent with more diverse language and subject matter, while national news articles show even greater dispersion, reflecting broader topical range and looser stylistic consistency.

Feature comparisons between legitimate local news and pink slime content, indicating that PS articles are shorter, use simpler sentence structures, contain more adjectives, exhibit lower lexical richness, favor basic part-of-speech trigrams, and contain fewer unique noun phrases.

Detection

The researchers evaluated two main approaches to detect pink slime content: classification, based on handcrafted linguistic features; and transformer-based fine-tuning.

For the handcrafted approach, structural rather than semantic characteristics were emphasized, using sentence count; lexical richness; syntactic depth; part-of-speech co-occurrence probabilities; dependency tag co-occurrence probabilities; readability; and part-of-speech counts.

Three models were tested on this feature set: XGBoost; Random Forest; and Support Vector Machine (SVM) – with Random Forest showing slightly stronger results overall.

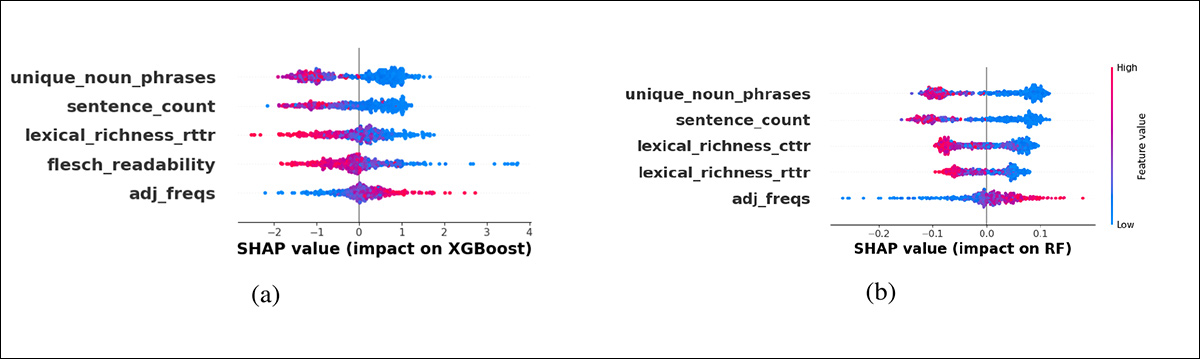

Both XGBoost and Random Forest assigned high predictive importance to features such as sentence count and the number of unique noun phrases. Readability and lexical richness measures also influenced classification strongly, though the models weighted these differently, with XGBoost favoring Flesch and RTTR, while Random Forest leaned on CTTR:

Feature importance scores based on SHAP (SHapley Additive exPlanations) highlight how each input feature influences the model’s output across samples. In this case, SHAP values reveal that both XGBoost and Random Forest relied most heavily on sentence count and unique noun phrases to distinguish pink slime from real news, while assigning varying weight to measures of lexical richness and readability.

As seen in the earlier feature comparisons (above), pink slime articles favor sensationalism over detail, with a lower lexical richness, and with fewer distinct noun phrases – reinforcing the conclusion that this kind of content is highly templated and repetitive.

Part-of-speech trigram patterns confirm that legitimate local news tends to use more structurally complex forms, involving auxiliaries, pronouns, and conjunctions, while pink slime favors fragmented or minimal syntax. These patterns suggest a reliable foundation for distinguishing synthetic local content from real journalism.

A second round of testing involved fine-tuning transformer models on full article text, to capture both semantic content and syntactic structure.

BERT, XLNet, and Flan-T5 were tested, with BERT achieving the highest F1-score at 89.31% – although the paper notes that performance differences were not statistically significant. Unlike the handcrafted classifiers, these models update all weights during training, allowing them to learn task-specific representations directly from the data.

An additional embedding-based method used a fully-connected downstream classifier performed better than the handcrafted models, but did not match the accuracy of full fine-tuning.

These approaches benefited from broader linguistic context – advantageous, given that pink slime articles often recycle content across outlets. Handcrafted features, by contrast, rely on surface-level syntax alone, limiting their ability to generalize across sources.

Boosting Pink Slime with LLMs

To test whether pink slime articles could be made harder to detect, the researchers rewrote them using large language models. Prompts were designed to improve the writing style by expanding content, adding sentence complexity, reducing overuse of adjectives, enforcing a neutral tone, using more varied vocabulary, and increasing the number of unique noun phrases, with the ambit of hiding the surface-level traits that most detection systems rely on.

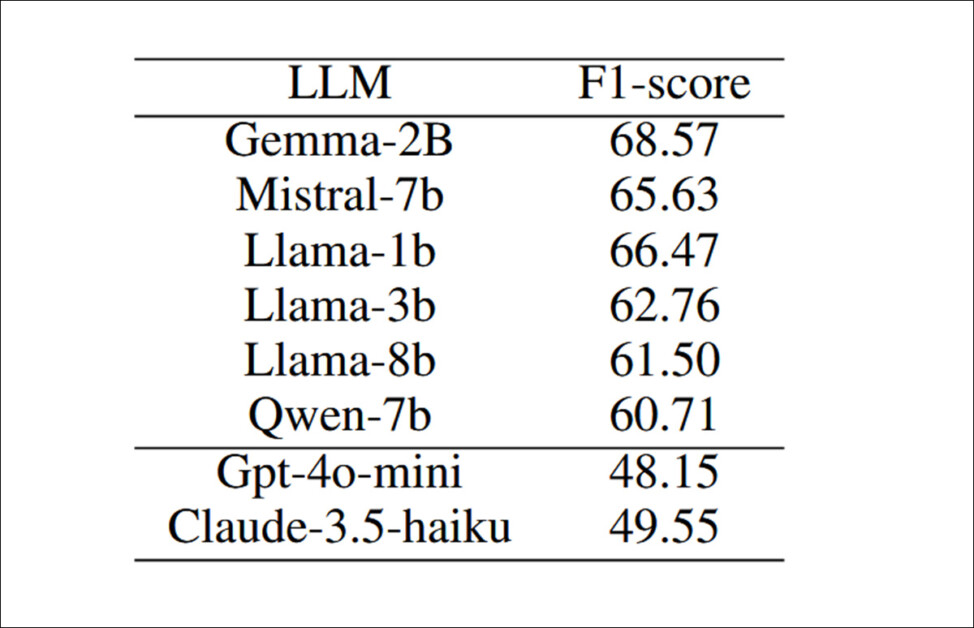

The models tested were Gemma‑2B; Mistral‑7B; Llama‑1B; Llama‑3B; Llama‑8B; Qwen‑7B; GPT‑4o mini; and Claude‑3.5‑Haiku.

Detection performance (F1) of a fine-tuned BERT classifier when pink slime articles are rewritten by different LLMs. Lower scores indicate more successful obfuscation.

Of these results, the authors comment:

‘[Among] open-source models, Qwen was the most effective at evading detection: when tested against a previously fine-tuned BERT classifier (baseline F1-score: 89%), it led to a 28.34% drop in detection [performance].

‘Closed-source models were significantly more potent, with both GPT-4o-mini and Claude-3.5-Haiku reducing the F1-score by an average of 40%, highlighting the challenge posed by high-quality LLM-generated obfuscation.’

These results, the authors contend, show how easily LLMs can disguise pink slime content, making it much harder for current tools to catch**.

Conclusion

Opinion This line of research contains some interesting quandaries, not the least of which is that so many people (according to at least one survey, mentioned earlier) support PS content, knowing what it is, that it brings the pejorative context into question. It’s as if people know that ‘Soylent Green is people’, but they shrug and keep eating; or so it may appear, from a liberal sensibility.

This public indifference to algorithmic news may evolve and even backtrack – but for the moment, it appears to be deepening.

Another thing that struck me, reading the paper, was the way that the simpler prose and reductionism of pink slime output was treated as a shortcoming with a possible technological solution, when the minimalism, emotionalism and limited vocabulary are arguably all quite intentional.

If the various interest groups behind PS want to extend their reach to a more intellectual or liberal audience (though this would perhaps not play to their strengths), it seems more likely that they would set up camp nearer their target demographic, rather than change a style of language and a tone that already seem to be achieving their goals, on existing platforms.

* Due to some unfortunate formatting in the paper, the extra source of local news articles does not have a clear attribution. Please refer to the source paper and guess which of the ‘Horne’ references applies.

** Here we refer the reader to the source paper for details of the secondary, supplementary experiments that close out the results section of the new paper.

First published Friday, December 12, 2025