Interviews

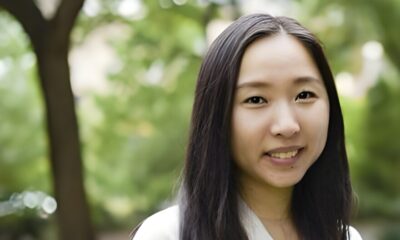

Zuzanna Stamirowska, Co-Founder and CEO of Pathway – Interview Series

Zuzanna Stamirowska, Co-Founder and CEO of Pathway, is a researcher turned builder who previously worked on emergent phenomena and large-scale network evolution. Her projects were recognized by the U.S. National Academy of Sciences, and she holds a PhD in Complex Systems. Zuzanna, along with CTO Jan Chorowski and CSO Adrian Kosowski, leads a team that has already built open AI tools with over 62,000 stars on GitHub.

Pathway is reimagining how AI models think, aiming to create systems that operate continuously and adaptively rather than in batches. Behind the scenes, its tooling and architecture enable models to process, learn, and evolve in real time. The company has attracted backing from luminaries such as Lukasz Kaiser (co-inventor of Transformers) and prominent venture firms.

What motivated you to launch Pathway, and how did your background in complex systems, game theory, and emergent phenomena shape the company’s vision and technical direction?

We wanted to make AI that thinks and adapts like humans. We realized that this means AI systems (Deep Learning models, or Machine Learning pipelines built from a larger number of bricks) that would be capable of learning continuously based on data from the environment, getting better with experience, sometimes “correcting” their previous views of the world. We actually got quite some coverage on the topic of “machine unlearning”. On top of models, such systems needed an entire layer of engineering, to capture data at the source and feed it immediately into dynamic systems.

Having worked on emergence in complex networks, I developed a number of hypotheses which we ended up applying directly or almost directly in BDH. Funnily enough, I made a couple of bets with my colleagues (Adrian, CSO, and Jan, CTO) and I won a couple of bottles of really good cognac. First, we believed that natural intelligence results from an emergent structure and action of neurons in the brain (this is obvious, no bet here). Second, from my previous research, I knew that, in general, function shapes the network (won this bet). In past work, I discovered this for trade, and neuroscientists had studied this for nerves and senses in mice etc. Third, was the fact that this shaping of network structure must follow some very local rules directly linked to the so called “neighbourhood” of each network location (in this case a node is a neuron) (won this bet). The other bits came from my knowledge of particle interaction systems – think for example magnetism – where particles adjust their spins to an external field and create some sort of a “spontaneous order”. This was the sort of math that I used while working on game theory on graphs.Putting all this together, from the first day of the company’s existence, we had a strong conviction that sparsity (graph-like structures) would be a key stepping stone in the advancement of AI.

Accounting for a notion of time was critical and also pretty characteristic of my background, because emergence usually happens over time.

When you launched the company in 2020, what were your earliest hypotheses about what future AI systems should do differently, and how have those views evolved?

We had a strong conviction from the start that AI needed to be live, adaptive, and integrated in large scale processes. It should learn directly from the source, with the rawest data possible.

First we did it for more classical machine learning approaches, and along the way, built layers of engineering that allows such systems to be deployed easily in the real world. Now, we have brought this approach to deep learning.

We knew that the elements of time and structure (networks) would be key for the advancement towards AGI. We have this written in some of our founding documents from 2020.

Can you walk us through the “post-Transformer” architecture you’re introducing and how it differs from current Transformer-based systems?

Our new architecture, called Baby Dragon Hatchling (BDH), formally connects how Transformers process information with how reasoning emerges in the brain.

BDH behaves like a physical system: a brain-like computing model in which neurons make a collaborative effort to discover the next, most relevant fact. Contextual reasoning is not limited by engineering limitations, such as the fixed context length of the Transformer, but rather scales with the number of neurons of the model.

Putting all of this in more technical terms, unlike in the Transformer, in BDH we have linear attention, sparse key-query vectors, and no limits on the context window size.

This approach opens the door to systems which learn while performing, sustain long chains of reasoning, and adapt continuously in context.

A core feature of the system is locality: important data is located just next to the sites at which it is being processed. This minimizes communication, and eliminates the most painful of all bottlenecks for reasoning models during inference: memory-to-core bandwidth.

How does your approach draw inspiration from human reasoning, particularly in enabling adaptability and continuous learning without retraining?

BDH brings natural intelligence and natural intelligence closer.

This architecture is inspired by the way neurons and synapses work in the brain. It maps biological attention mechanisms to the computational notion of attention in machine learning, creating a scalable bridge between Transformers and the brain.

BDH brings attention close to model parameters, presenting them as two reflections of the same brain-like system dynamics, with attention changing at a fast rate as new facts become known during reasoning, and model parameters changing more slowly as the system changes its long-term habits. This is closer to how we believe reasoning works in the brain.

We see BDH as a milestone towards the design of AI systems which reason mid-task, improve with experience, and adapt without retraining – properties we associate with human reasoning.

One of the challenges in AI is balancing stability with adaptability — how do you ensure systems can learn in real time without losing past knowledge?

BDH relies on its scale-free structure and localized neuron states to sustain reasoning over long time horizons, balancing stability and the ability to integrate new knowledge and observations. This natural health balance is easy to extract and follow over the lifetime of a model.

With BDH we are placing reasoning as the cornerstone of intelligence. With the current work, we make progress on a hypothesis about the role of knowledge in Large Language Models: it is not so much about what is “true”, but what is “useful” in a given context to progress in a given chain of reasoning. For instance, if knowledge is contextualized, all of the sudden there is no contradiction between our world following laws of nature, and the fact that we know a folk tale which admits the existence of fairies and magic. In the same way there is no contradiction for a model considering several different forecasting hypotheses, with more and less optimistic assumptions, in a single chain of thought.

Models based on BDH acquire new facts during their life-time in a contextualized way. They can self-correct because of it. By exposing easy to follow statistics of neuron activity and granular control over the transfer knowledge from context into model parameters, the architecture helps to curb the risk of “outdated” contexts resurfacing at an inopportune moment.

What are the engineering trade-offs in building a live data processing engine that can sustain these capabilities at scale?

Pathway’s enterprise offering relies on the fastest data processing engine on the market. That engine underpins our ability to handle real-time inputs, and to react to new information with low latency. With the current BDH breakthrough, we ensure that this real-time adaptability extends as far as the foundational AI models used in deployments. Our overarching goal for large-scale deployments is moving away from static optimization toward building infrastructure that can handle long-horizon reasoning.

What are the most compelling use cases that truly demand this next stage of AI, and where do current Transformer-based systems fall short?

Many innovations have extended the functionality of generative AI to adapt quickly to new information and to rely deeply on ‘time on task, ’ but nothing yet has been able to replace hiring a highly talented person.

The very quick and straightforward answer is that we are talking about any task that currently requires more than 2h and 17 min of coherent work by a human expert. This is the current limit of GPT5 according to METER.

We have had great discussions with design partners in the enterprise who require deep personalization, models that learn on the job from scarce data, and the security of deployment.

BDH makes it relatively straightforward for an enterprise to tackle highly complex processes such as:

- Closing a quarter for a public company

- Dynamic generation of the next best courses of action in high-stakes environments is highly relevant in both sales and defense.

- Investment management

NATO is already using Pathway’s technology to process live military and social data, enabling planning systems that adapt as situations evolve. La Poste is utilizing Pathway’s Live AI to dynamically manage its operations in real-time. Industries such as finance and healthcare, where data is scarce or sensitive, can benefit from models that require less data but deliver deeper insights and more reliable judgments.

Can you share examples of how organizations like NATO, La Poste, or Formula 1 have applied your technology and the impact it’s delivered?

NATO, La Poste, and Formula 1 teams are already early adopters of Pathway technology.

Pathway offers NATO robust and innovative data processing technology to unlock new capabilities for critical use at scale. With our functional demonstrator, the Reinforcement Enablement Simulation Tool (REST), we developed the cornerstone for further development of AI-supported solutions to NATO. Using Pathway to connect open source data has sped up situational awareness and brought it to the necessary level that NATO required to successfully operate in the 2020s.

La Poste utilizes Pathway to improve processes related to their transport units. With Pathway Framework, La Poste anticipates their operations automatically in real-time and generates live qualitative analysis of their transport operations. With Pathway, the company has been able to optimize their logistics dynamically, reducing ETAs, processing times / lead times, and increasing reliability. Furthermore, they have achieved significant reductions in operational costs (50% reduction in TCO in some cases).

Formula 1 racing team leverages Pathway to adapt strategy under high-pressure, real-time conditions. The company wanted a platform for end-users to create User-Defined Functions (UDFs) independently, and feed various business needs from e-sports/sim-racing, to cars and formula racing. Pathway’s Live Data Framework enables advanced data transformations at F1 racing team at the lowest latency (90x faster processing speeds than before).

What are the biggest obstacles to deploying adaptive systems in industries such as healthcare or defense, and how do you address them?

By design, current LLMs generate content that is “new” without real judgment – call it the ‘gen’ in gen AI. Many highly regulated industries need governance by definition, and are reluctant to deploy AI-driven business processes without repeatability, trust, and observability. Ironically, to deploy AI, these enterprises often ‘dumb down’ functionality and introduce additional complexity to facilitate compliance with regulations.

By design, BDH enables an enterprise to observe and adapt to what is happening inside the model. This observability over long time periods of ‘reasoning’ gives the enterprise the trust to tackle longer, more complex business processes. BDH is, by design, both observable and time-axis business-process aware. No need for the perfect, enormous data sets for learning, no need for extremely long context windows, or glue logic for observability.

What ethical considerations or safeguards are essential as AI systems begin to learn and adapt in real time?

Systems that learn with experience in fact have better chances at being safe than the current, Transformer-based ones. One element of this is the fact that with continuous learning they have a chance to self-correct and update their pre-conceptions if they were wrong.

In order to ensure safety of such systems, they need to get feedback over time. Which means we need to keep on feeding them with fresh data, and potentially ensure feedback loops for them to understand the effects of their own functioning. This is close to Reinforcement Learning.

Second, a model based on BDH offers interpretability which makes it easier to understand how it works, giving humans better control over them.

What would it take for a “post-Transformer” paradigm to become mainstream across the AI community?

A model out in the market that is extremely useful and has significantly lower costs of inference while being faster. We believe that there is a market win to achieve, especially in the enterprise.

Thank you for the great interview, readers who wish to learn more should visit Pathway.