Interviews

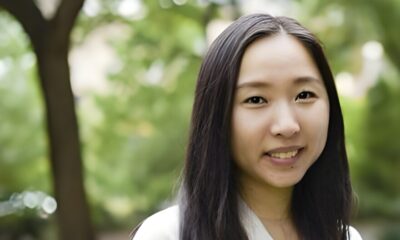

Olga Megorskaya, Founder and CEO of Toloka – Interview Series

Olga Megorskaya, founder and CEO of Toloka, has led the company since 2020, steering it from its original crowd-labeling roots into a major player in AI data services. Before founding and leading Toloka, she spent more than a decade at Yandex, culminating in a role as Head of Crowdsourcing and Platforms, where she built and scaled crowdsourced data-labeling infrastructure for machine-learning products including search, voice, speech, self-driving, content moderation, and more. Under her leadership at Toloka, the firm has positioned itself as a global data partner for AI — combining machine-learning technology with human expertise to support training, evaluation, and safety for large-scale AI models and agents.

Toloka is a Netherlands-based company headquartered in Amsterdam that provides high-quality, expertly curated data for AI development, ranging from annotation and labeling to custom datasets for supervised fine-tuning (SFT) and reinforcement learning from human feedback (RLHF). The company supports a wide variety of modalities — text, image, audio, video — and engages domain experts alongside a global crowd to deliver scalable, accurate datasets for training and evaluating advanced AI agents and large language models. Their services help improve capabilities such as reasoning, multilingual understanding, image/audio generation, and AI safety across industries and applications.

You spent years building human-in-the-loop systems at Yandex before co-founding Toloka in 2020. What was the moment that convinced you this needed to become its own company, and how did that experience shape your vision for Toloka’s role in AI development?

At a certain point, we realized that Toloka had outgrown its company of origin. AI researchers from across the world were using the platform, and we recognized that the company could achieve more as an independent entity. Consequently, Toloka was able to rely on the cumulative experience of hundreds of AI teams collecting training data for the full spectrum of AI solutions—from web search and voice assistants to GenAI chatbots and AI Agents. We have since combined our expertise in managing human effort with building and training AI Agents to create our new product, Tendem.

You’ve said the real disruption ahead won’t come from fully autonomous agents. What led you to conclude that hybrid agents—combining AI automation with human judgment—are the model that will ultimately win?

While I believe that AI-only solutions in many areas will soon reach an amazing level of quality to fully satisfy customers’ needs, there will always be a long tail of use cases that basic models will never be optimized for. This is where the hybrid approach will outperform both AI-only and human-only solutions for the foreseeable future. We’ve seen the same pattern play out repeatedly over the past decade: AI can scale tasks beautifully, but it struggles with nuance, context, and the kinds of judgment calls that matter in real business settings. Professionals want speed, but they also need reliability– fully autonomous agents simply can’t guarantee that today. Hybrid systems win because they solve both sides of the equation. AI handles what it’s best at: speed, structure, and repeatability. Human expertise fills the gaps: ambiguity, edge cases, and interpretation. When you combine the two natively, you get a workflow that is both fast and trustworthy. That’s the model professionals will ultimately rely on.

You’ve noted that AI breaks on ambiguity and multi-document reasoning. What kinds of tasks reveal these limitations most clearly?

Long-horizon tasks – those that require synthesizing multiple sources, reconciling conflicting information, or interpreting context, and which would take humans hours or even days to complete – tend to expose the current limits of AI. Examples include market research, competitive analysis, summarizing long-form documents, drafting original content from mixed inputs, or pulling out insights that hinge on human nuance versus pattern recognition. AI is excellent at producing a first pass. But the moment a task requires prioritization, judgment, or reconciling ambiguity, reliability drops sharply. That’s where human experts are essential.

Many professionals use AI but still don’t fully trust it. How does your approach rebuild confidence in delegating real work to AI-assisted systems?

Trust improves when people stop having to fix AI output. Our approach rebuilds confidence by integrating humans into the workflow from the start, not as an escalation path when the AI fails. Every task in Tendem flows through an AI project manager that decides what should be automated and what requires human expertise. After that, both automated checks and human QA ensure the final output is accurate, complete, and business-ready. For professionals, this means they can delegate work and actually expect a verified result, not a draft they have to repair.

What mechanisms ensure that human experts in the loop maintain high-quality output without slowing down the workflow?

Two things make this possible:

- Specialization at scale. Experts in Tendem’s network are pre-vetted, trained, and matched based on domain expertise. They aren’t general freelancers trying to adapt on the fly– they’re already qualified for specific types of tasks.

- Layered QA and smart routing. AI handles the bread-and-butter steps so human experts only focus on the parts that require judgment. Then there’s a second layer of automated QA and human-verification where needed. This keeps humans engaged where they add the most value, not where they create bottlenecks.

The result is speed that rivals automation, with accuracy that rivals expert review.

In industries demanding accuracy and compliance, how can hybrid systems offer verifiability and auditability that businesses can rely on?

Hybrid systems inherently allow for auditability because every step in the workflow is tracked, attributed, and quality checked. You know which parts were automated, which were performed by vetted experts, and what QA steps validated the output. In highly regulated industries, this process becomes essential. You’re no longer relying on a black-box AI decision; you have a verifiable chain of judgment, corrections, and approvals. That’s what makes hybrid systems suitable for domains where accuracy and compliance cannot be optional.

Which categories of knowledge work do you expect to shift to hybrid agents first, and which will be slowest to adopt this model?

Overall, I think the hybrid approach is much easier to adopt than an AI-only approach, so we anticipate almost any domain can be covered with this model—the difference will just be in the proportion of AI versus human involvement. The earliest adopters are roles where speed and accuracy both matter, yet the work is still highly repetitive. We’re already seeing strong pull from consulting, marketing, sales operations, research, and content creation. The most complex (and probably the most interesting) areas to solve are those closer to the physical world, such as architecture and construction. But I do see a clear technological path to getting us there as well.

From an operational standpoint, how do organizations benefit when shifting from freelancer-managed work to hybrid agents?

Hybrid agents eliminate two of the biggest inefficiencies in freelancer-managed work: management overhead and inconsistent quality. Organizations and professionals no longer have to recruit, vet, brief, manage, or correct freelancers. The hybrid agent handles workflow orchestration automatically, assigning the right combination of AI and human expertise for each step. This reduces turnaround time, standardizes output quality, and scales work without scaling headcount. In short: you get the speed of automation with the reliability of an expert team, but without managing either.

As hybrid systems gain mainstream traction, what impact do you expect this shift to have on the broader freelance and gig economy?

We think it will reshape the freelance economy, not replace it. Instead of freelancers spending time on low-level, repetitive tasks, they can plug into hybrid systems as specialists in specific domains. The work becomes more structured: higher-value, more predictable, and more stable. Rather than chasing inconsistent projects, experts can contribute at scale through platforms like Tendem, where their skills are matched to the right tasks and supported by AI systems that remove busywork.

Looking ahead a few years, what does “delegation to AI” look like once hybrid agents become standard across digital work?

Delegation will feel less like “using a tool” and more like handing work to a capable team. You’ll describe the outcome you want, and the hybrid agent will break it down, route subtasks, apply the right mix of AI and human expertise, verify the results, and deliver a finished product– all within hours. The shift will be from using AI as a drafting assistant, to trusting AI as a project manager that oversees an entire workflow. That’s when true delegation happens: when professionals can trust the system to deliver the final output, not just a starting point.

Thank you for the great interview, readers who wish to learn more should visit Toloka.