Artificial Intelligence

From Math Exams to Machine Reasoning: AI’s Latest Struggles

Recently, Artificial Intelligence (AI) has reached a historic milestone in one of the world’s toughest math contests, the International Mathematical Olympiad (IMO). Google DeepMind’s Gemini Deep Think and an experimental OpenAI model each solved five of the six challenging problems, scoring 35 out of 42 points, which was the threshold for a gold medal. DeepMind’s result was officially graded by IMO markers, while former IMO gold medalists validated OpenAI’s under the same time and tool restrictions as human contestants. Both systems generated detailed, natural-language proofs, demonstrating remarkable progress in AI’s mathematical reasoning.

Despite performing well in such contests, AI struggles with tasks that require creativity, abstract thinking, and in-depth logical analysis. These systems can handle familiar problem types successfully, but they often fail on unfamiliar or highly complex tasks that demand original insight. This limitation highlights the current limitations of AI’s reasoning abilities and identifies key areas for future research.

From Basic Calculators to AI Cognitive Contenders in Math

AI in mathematics began with simple rule-based tools. Early digital calculators were limited to performing only basic arithmetic. Later, software like Wolfram Alpha and symbolic solvers automated algebra and calculus. These systems adhered to strict rules and provided exact answers. They could not explain their reasoning in natural language.

Large language models (LLMs) changed this approach. Unlike symbolic systems, LLMs learn from large collections of text. Initially, their math skills were limited. They often failed on fundamental word problems. Gradual fine-tuning improved performance. Training on datasets such as GSM8K and MATH helped them follow a step-by-step problem-solving approach. Moreover, Chain-of-thought prompting encouraged whole reasoning instead of short answers.

By 2023 and 2024, top AI models matched human-level scores on many math benchmarks. They could explain multi-step solutions and solve Olympiad-style practice problems. In 2025, AI reached a milestone. Experimental systems from Google DeepMind and OpenAI achieved gold-medal level scores at the International Mathematical Olympiad. Each AI system solved five out of six proof-based problems using the same time and tools as human participants. This was the first time AI reached the level of top young mathematicians in the official IMO grading.

Why AI Still Struggles with Mathematical Reasoning

AI shows strong performance on many math tasks, yet its ability to reason deeply remains limited. The following sections explore the factors behind these limitations.

Overestimation from Standard Benchmarks

Even with strong performance in math contests and benchmarks, AI still struggles with deep reasoning. Many popular tests provide an overly optimistic view of AI’s capabilities. This happens because problem sets often reuse questions or resemble tasks from the models’ training data. As a result, AI can perform well by recognizing familiar patterns. However, it lacks actual reasoning on new problems.

FrontierMath Benchmark

To test AI more rigorously, researchers introduced FrontierMath in 2024. This benchmark contains hundreds of original problems created by expert mathematicians, including IMO gold medalists and a Fields Medalist. The problems cover advanced topics, including number theory, fundamental analysis, algebraic geometry, and category theory. FrontierMath avoids data contamination, meaning AI cannot simply recall answers. Even the most advanced systems solved less than 2% of these problems. This indicates a significant drop compared to older benchmarks, highlighting the gap between superficial success and genuine understanding.

RIMO and Olympiad-Style Challenges

RIMO, another benchmark tests AI on Olympiad-style mathematics. It contains problems that require precise and verifiable proofs. The questions are adapted from past International Mathematical Olympiad problems and rewritten to avoid data contamination.

RIMO has two parts. One focuses on proof-based questions graded by experts, while the other uses problems with unique numeric answers for automatic scoring. Both formats demand logical precision.

AI models that perform well on benchmarks like GSM8K often struggle on RIMO. They produce long proofs that look correct but contain hidden errors. This highlights a key limitation that AI can generate reasoning that seems convincing, yet it often lacks a firm logical foundation.

Routine Problems vs Reasoning Problems

The distinction between routine and reasoning problems helps explain AI’s challenges in mathematics. Routine problems follow familiar patterns or templates. Many word problems or algebra exercises can be solved through pattern recognition. AI performs well on these tasks, often matching or even surpassing human accuracy.

Reasoning problems require more than pattern recognition. They demand creativity, abstract thinking, and flexible planning. Olympiad-style proofs, for example, test the ability to generate new ideas rather than repeat known solutions. AI can produce text that resembles proofs, but expert reviewers often find gaps in logic. Key steps may be missing or weakly justified, and some claims lack support. These shortcomings indicate that AI has not yet mastered true mathematical reasoning.

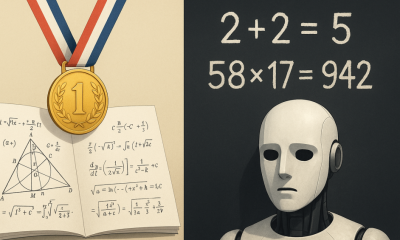

Limitations of Current AI Models

Current AI models have additional limitations. LLMs predict the next word in a sequence without strictly following symbolic or mathematical rules. This can lead to errors such as algebraic mistakes. AI also hallucinates, confidently producing incorrect solutions. In education or research, these mistakes can mislead users or spread false knowledge.

Benchmark Scoring and Evaluation Issues

Evaluation methods also add to these weaknesses. For example, many benchmarks only check the final answer and overlook the reasoning process. Because of this, they encourage shortcuts and discourage careful, step-by-step problem solving. As a result, models may provide incorrect answers instead of demonstrating reliable logic.

Real-World Impact of AI’s Reasoning Limits

AI has demonstrated strong results in math contests and benchmarks; however, these achievements do not fully reflect the picture. The weaknesses in AI’s reasoning create serious challenges when applied in real-world contexts.

In education, AI tutoring systems provide explanations and practice problems to support students. However, flawed reasoning can mislead learners. Students may adopt incorrect ideas, and teachers must spend additional time verifying and correcting AI outputs. This reduces the usefulness of AI as a teaching aid.

In scientific research, accuracy in reasoning is essential. Even small mistakes can disrupt experiments, waste resources, and lead to false conclusions. Such errors reduce confidence in AI as a research tool and slow progress in scientific work.

In medicine, both accuracy and clarity are critical. AI systems used for diagnosis or treatment must accurately explain their decisions. If explanations are incomplete or misleading, doctors and patients may lose trust in each other. This can lead to poor medical choices with serious consequences.

In law and finance, errors in reasoning can cause legal disputes or financial losses. Professionals in these fields require AI systems that adhere to consistent and logical rules to ensure fairness and reliability.

Ultimately, trust in AI is at risk more broadly. Reports of AI’s success in contests create expectations that it has solved reasoning challenges. When it later fails on complex problems, public confidence declines. This limits the adoption of AI in areas where it could still provide value. For this reason, it is essential to clearly communicate AI’s abilities and limitations.

Strategies for Improving AI’s Reasoning Abilities

Researchers are investigating several approaches to address the reasoning challenges faced by AI. One important direction is neuro-symbolic AI, which combines neural networks with symbolic reasoning systems. Neural models are effective at processing and generating natural language, while symbolic solvers apply strict logical and algebraic rules. Their integration helps ensure correctness in complex tasks such as algebra and logic, reducing errors that arise in purely statistical models.

Another approach is step verification. In this method, AI produces proofs step by step, and separate verification systems check each step for consistency. This process reduces false reasoning and hallucinations, making AI outputs more reliable in tasks requiring rigorous proofs.

Challenging benchmarks such as FrontierMath and RIMO also play a vital role. These benchmarks include original problems that prevent memorization and require genuine reasoning. Their use in training and evaluation encourages models to move beyond pattern recognition toward a deeper understanding.

The use of external tools further supports AI reasoning. Some systems connect with Computer Algebra Systems (CAS) to perform precise calculations and manipulations. This reduces arithmetic mistakes and increases accuracy in multi-step problem solving.

Reinforcement learning offers another effective strategy. By rewarding correct intermediate reasoning steps rather than only the final answer, this method guides models to focus on logical process and reliability.

Human-AI collaboration is also essential to overcome the limitations. AI can generate lemmas or draft reasoning paths, while humans verify and refine results. In education, AI may provide practice problems and hints, but teachers ensure accuracy and context. In research, medicine, and law, experts critically review AI outputs before making decisions. This combination of AI speed and human judgment strengthens reliability.

Developers also need to improve evaluation protocols. This includes testing with unpublished datasets, adversarial problems, and scoring methods that assess reasoning steps in addition to final answers. Such evaluations encourage careful and detailed proofs rather than shortcuts.

The Bottom Line

The progress of AI in mathematics reflects both historic advances and unresolved challenges. From basic calculators to modern language models, AI has evolved into systems capable of performing at the level of top human contestants in international competitions. However, these successes do not mean that AI has mastered mathematical reasoning.

Rigorous benchmarks such as FrontierMath and RIMO expose persistent weaknesses in creativity, abstraction, and logical precision. These gaps raise serious concerns when AI is applied in education, research, medicine, law, or finance, where accuracy and trust are essential. Moving forward, combining symbolic logic, step-by-step verification, human collaboration, and more robust evaluation methods will be necessary for AI to achieve reliable reasoning and effectively address complex real-world problems.