Artificial Intelligence

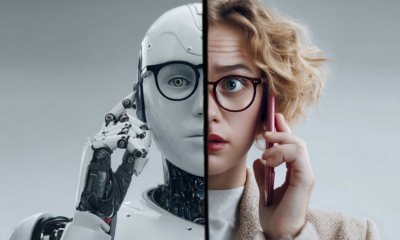

Expert Says “Perfectly Real” DeepFakes Will Be Here In 6 Months

The impressive but controversial DeepFakes, images and video manipulated or generated by deep neural networks, are likely to get both more impressive and more controversial in the near future, according to Hao Li, the Director of the Vision and Graphics Lab at the University of Southern California. Li is a computer vision and DeepFakes expert, and in a recent interview with CNBC he said that “perfectly real” Deepfakes are likely to arrive within half a year.

Li explained that most DeepFakes are still recognizable as fake to the real eye, and even the more convincing DeepFakes still require substantial effort on the part of the creator to make them appear realistic. However, Li is convinced that within six months, DeepFakes that appear perfectly real are likely to appear as the algorithms get more sophisticated.

Li initially thought that it would take between two to three years for extremely convincing DeepFakes to become more commonplace, making that prediction at a recent conference hosted at the Massachusetts Institute of Technology. However, Li revised his timeline after the revelation of the recent Chinese app Zao and other recent developments concerning DeepFakes technology. Li explained to CNBC that the methods needed to create realistic DeepFakes are more or less the method currently being used and that the main ingredient which will create realistic DeepFakes is more training data.

Li and his fellow researchers have been hard at work on DeepFake detection technology, anticipating the arrival of extremely convincing DeepFakes. Li and his colleagues, such as Hany Farid from the University fo California Berkely, experimented with state of the art DeepFake algorithms to understand how the technology that creates them works.

Li explained to CNBC:

“If you want to be able to detect deepfakes, you have to also see what the limits are. If you need to build A.I. frameworks that are capable of detecting things that are extremely real, those have to be trained using these types of technologies, so in some ways, it’s impossible to detect those if you don’t know how they work.”

Li and his colleagues are invested in creating tools to detect DeepFakes in acknowledgment of the potential issues and dangers that the technology poses. Li and colleagues are far from the only group of AI researchers concerned about the possible effects of DeepFakes and interested in creating countermeasures to them.

Recently, Facebook started a joint partnership with MIT, Microsoft and the University of Oxford to create the DeepFake Detection Challenge, which aims to create tools that can be used to detect when images or videos have been altered. These tools will be open source and usable by companies, media organizations, and governments. Meanwhile, researchers from the University of Southern California’s Information Sciences Institute recently created a series of algorithms that could distinguish fakes videos with around 96% accuracy.

However, Li also explained that the issue with DeepFakes is the way they can be misused, and not the technology itself. Li noted several legitimate possible uses for DeepFake technology, including in the entertainment and fashion industries.

DeepFake techniques have also been used to replicate the facial expressions of people with their faces obscured in images. Researchers used Generative Adnversail Networks to create an entirely new face that had the same expression of a subject in an original image. The techniques developed by the Norwegian University of Science and Technology could help render facial expressions during interviews with sensitive people who need privacy, such as whistleblowers. Someone else could let their face be used as a stand-in for the person who needs anonymity, but the person’s facial expressions could still be read.

As the sophistication of Deepfake technology increases, the legitimate use cases for Deepfakes will increase as well. However, the danger will also increase, and for this reason, the work on detecting DeepFakes done by Li and others grows even more important.