Artificial Intelligence

Enhancing AI Efficiency with Shorter Reasoning Chains in Large Language Models

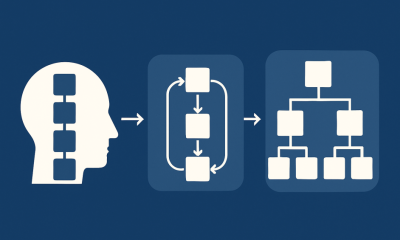

Large Language Models (LLMs) have transformed Artificial Intelligence (AI) by generating human-like text and solving complex problems in various industries. For years, AI experts believed that longer and more detailed reasoning chains would lead to higher accuracy. The assumption was that more steps would result in better and more reliable answers.

However, a 2025 study by Meta’s FAIR team and The Hebrew University of Jerusalem has questioned this belief. The research found that shorter reasoning chains could improve LLM accuracy by up to 34.5%. At the same time, they reduced computational costs by up to 40%. This finding suggests that concise, focused reasoning speeds up processing. These results are expected to change the training, deployment, and scaling of LLMs in the future.

Why Shorter Reasoning Chains Matter in AI

For a long time, it was believed that longer reasoning chains in AI models would result in better outcomes. The logic behind this idea was simple: the more steps an AI model takes, the more information it would process. This additional processing was thought to increase the chances of generating a more accurate solution. As a result, many AI systems were developed to maximize the number of reasoning steps, with the aim of improving the model’s performance.

However, this approach has several significant limitations. Longer reasoning chains require much more computational power, meaning the AI model needs more time and energy to process each task. This often leads to slower processing speeds and higher operational costs, which can be a major issue, especially in real-time applications where fast responses are critical. Moreover, the complexity of longer chains increases the chances of introducing errors. The more steps involved, the higher the likelihood of mistakes occurring. This makes the model less efficient and more difficult to scale, creating challenges when trying to apply AI systems in industries that require both speed and accuracy.

The research conducted by Meta and the collaborators highlights the flaws in this traditional belief. Their study found that shorter reasoning chains can improve accuracy. At the same time, they reduce computational overhead. This means AI models can process tasks faster and at a lower cost without losing accuracy.

These findings suggest a change in AI development. The focus should shift from increasing the number of reasoning steps to optimizing the reasoning process. By using shorter reasoning chains, AI models can be more efficient. They can also offer more reliable results and complete tasks in less time.

Advancements in Reasoning Efficiency with the short-m@k Inference Framework

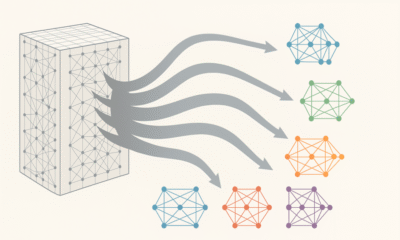

The study by Meta’s FAIR team and The Hebrew University of Jerusalem introduces the short-m@k inference framework, a new approach designed to optimize multi-step reasoning in LLMs. This framework moves away from traditional sequential reasoning and exhaustive majority-voting methods, instead leveraging parallelism combined with early termination criteria to improve efficiency and reduce computational costs.

In the short-m@k methodology, k parallel reasoning chains are initiated simultaneously. However, the process halts as soon as the first m chains finish, and the final prediction is determined through majority voting based on the results from these early-terminated chains. This mechanism cuts down on unnecessary token generation, thereby reducing computational overhead and latency, all while maintaining prediction accuracy.

The short-m@k framework includes two key variants, each optimized for different environments:

short-1@k: This variant selects the first completed reasoning chain from the k parallel attempts. It is particularly effective in low-resource or latency-sensitive situations, achieving comparable or better accuracy with minimal computational costs.

short-3@k: This version aggregates the results of the first three completed chains. It consistently outperforms traditional majority-voting methods in both accuracy and throughput, making it ideal for large-scale production environments that require high performance and efficiency.

Additionally, the short-m@k approach influences model fine-tuning strategies. By training models with shorter, more effective reasoning sequences, the model can achieve faster convergence, enhancing both inference precision and the overall efficiency of computational resources during training and deployment.

Implications for AI Development and Industry Adoption

Using shorter reasoning chains has a significant impact on AI model development, deployment, and long-term sustainability.

From a training perspective, shorter reasoning chains reduce computational complexity and resource use. This makes training LLMs less expensive and faster. It allows for quicker updates and more frequent improvements without needing more infrastructure.

In deployment, especially in applications that need fast responses, like chatbots, trading platforms, and real-time decision systems, shorter reasoning chains improve processing speed. This not only makes systems faster but also allows them to handle more requests at once. This means systems can perform better and scale more easily under heavy use.

Energy efficiency is another key benefit. By reducing the number of tokens and computations needed during training and inference, AI systems use less power. This lowers costs and helps the environment. As AI becomes more widespread and data centres face pressure to reduce energy consumption, this efficiency becomes more critical.

Finally, these efficiencies help speed up the entire AI development process. With shorter training times and faster inference, organizations can bring AI products and services to market more quickly. This helps them stay competitive and agile in a fast-moving tech world.

Overcoming Implementation Challenges and Strategic Recommendations for Shorter Reasoning Chains

While adopting shorter reasoning chains in LLMs brings clear benefits, there are practical challenges to overcome to make this approach fully effective.

One of the main challenges is the traditional design of AI systems, which have long focused on using longer reasoning chains. These systems were built on the belief that more steps would lead to better results. Moving to shorter chains requires revisiting model architectures, training methods, and optimization techniques. This change demands both technical skills and a willingness to adapt within organizations.

The quality and structure of the data also play a significant role. AI models that were trained on datasets designed for longer reasoning might struggle when switched to shorter reasoning paths. To make shorter chains effective, datasets need to be curated and structured in a way that supports quick, targeted reasoning steps. This is essential to ensure the model can maintain accuracy and performance.

Scalability is another challenge. Shorter reasoning chains work well in controlled environments, but applying them on a large scale, like on e-commerce websites or customer support systems, requires solid infrastructure. The system must handle high volumes of requests without slowing down or losing accuracy. This requires careful planning and resource management to ensure smooth performance.

To overcome these challenges, AI developers can consider the following strategies:

- Adopt the short-m@k inference framework: This approach uses parallel processing and early termination to balance speed and accuracy, making it ideal for real-time, latency-sensitive applications.

- Prioritize concise reasoning during training: Incorporate training methods that focus on shorter reasoning chains to reduce resource use and improve speed.

- Monitor reasoning chain metrics: Regularly track the length of reasoning chains and the model’s performance in real-time. This helps make quick adjustments to keep the system efficient and accurate.

By following these strategies, AI developers can successfully implement shorter reasoning chains, leading to faster, more accurate, and scalable AI systems that meet both operational needs and cost-efficiency goals.

The Bottom Line

The research on shorter reasoning chains brings a new approach to AI development. Using shorter chains helps AI models work faster, more accurately, and with lower costs. This change is essential for industries where speed and cost are key.

By using shorter reasoning chains, AI systems can improve without needing more resources. This can help companies to develop and use AI more efficiently. Moving forward, this approach will help AI become even more valuable and adaptable to different needs. AI developers and companies should explore these new methods to stay ahead in a fast-changing technological world.