Artificial Intelligence

What the GPT-5 Reaction Can Teach Us About the Future of LLMs

The debut of GPT-5 didn’t just spark headlines about smarter reasoning and bigger benchmarks. It also lit up forums, feeds, and communities with frustration. Some users felt blindsided by abrupt model swaps, others lamented the disappearance of familiar behaviors in 4o, and many worried that their workflows had been upended overnight.

That reaction is more than noise—it’s a signal. If language models are becoming infrastructure, then stability isn’t optional. It’s a feature. The rollout of GPT-5 shows us that the future of LLMs won’t only be judged on IQ tests and benchmarks but also on whether people can trust the foundation under their tools.

The Reaction to GPT-5: More Than Excitement

When GPT-5 landed, the narrative most expected was one of technical triumph. Better reasoning, improved memory, smoother interactions—the standard story of incremental but impressive progress. Yet what quickly emerged online was something different: a wave of irritation from everyday users.

They weren’t doubting the advances of the model; they were questioning the disruption it caused. Teams that had calibrated prompt strategies around GPT-4o found them breaking.

Developers who built fine-tuned workflows on specific quirks suddenly had to rethink. For them, GPT-5 was progress wrapped in instability. They didn’t care about the improved ability to review contracts with AI or fancy one-prompt three.js webpages; they cared about continuity.

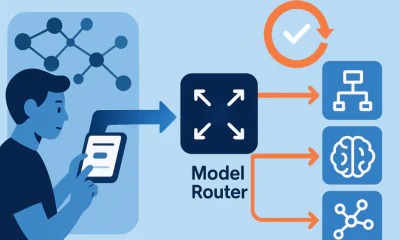

This points to a broader truth: people don’t use LLMs in isolation; they embed them into systems, products, and daily routines. Each model version becomes a piece of infrastructure. Just as a cloud provider can’t casually change how its servers behave, a model provider can’t simply swap out models without ripple effects.

The initial reaction to GPT-5 was therefore less about the science of AI and more about the social contract of product trust. It revealed that progress must be measured not just in raw intelligence but in reliability and predictability.

Stability as the New Frontier

The GPT-5 moment underscored that in AI, stability is as valuable as novelty. Every time a model changes, it risks breaking the invisible scaffolding that supports countless user applications. Think of translation services that suddenly output differently structured text, or customer support systems where tone shifts break alignment with brand voice. These disruptions may look minor from a distance but have outsized effects on operations.

Users voiced frustration because they expect LLMs to act like infrastructure, not experiments. That expectation reframes how future development must be approached. Benchmark wins are still celebrated, but they’re no longer the sole measure of success.

As OpenAI felt it on their own skin, trust is now a performance metric. The companies shaping this space will need to consider stability guarantees, backwards compatibility, and clearer communication around changes. The future of LLMs may look less like a parade of new releases and more like the gradual refinement of stable platforms.

The reaction to GPT-5 shows that raw intelligence has diminishing returns if it comes with unpredictability. A model can solve harder logic puzzles, but if it breaks an API integration overnight, users may feel it’s a step backward. The future belongs to those who balance capability with consistency.

Deprecation and Loss as Breaking Points

The most emotional reactions weren’t about GPT-5’s capabilities at all—they were about the deprecation of 4o. For many, GPT-4o wasn’t just a version; it was a trusted collaborator. People had built habits, systems, even identities around how it behaved. Losing access felt like losing an essential tool.

This mirrors patterns from software history. Deprecating a library or API without a reliable alternative has always sparked backlash. The same dynamics apply here, only magnified by the fact that these models aren’t just tools—they feel conversational, almost alive. Their quirks become familiar, their responses predictable, and their sudden absence jarring.

The lesson is clear: future LLM rollouts need more graceful transitions. Deprecations must be accompanied by long runway periods, especially when OpenAI still has to walk back critical privacy blunders.

Otherwise, each upgrade risks alienating the very communities that championed earlier models. The backlash against GPT-5 was mourning of the old, not the rejection of the new. Developers and users alike need continuity, not rupture, if LLMs are to truly integrate into everyday infrastructure. Sure, maintaining multiple, especially less-efficient models, can be cumbersome, but is it worth sacrificing your customer base in favor of blind accelerationism? I didn’t think so.

Trust as Infrastructure

What became clear from the GPT-5 discourse is that LLMs are now treated as critical, real-world infrastructure. And infrastructure runs on trust. A power grid isn’t judged solely on innovation in energy generation; it’s judged on uptime. The same will be true of LLMs. Users will care less about abstract benchmarks and more about whether the model will work tomorrow as it does today.

That means the future of large models will require new forms of product management. Stability roadmaps, communication strategies, and guarantees of backward compatibility will matter as much as breakthroughs in architecture. Just as cloud providers advertise “five nines” of reliability, LLM providers may need to look into behavioral consistency metrics. Trust, not novelty, becomes the value proposition.

This doesn’t mean innovation slows down. It means innovation must be layered on top of stable foundations. Experimental models can still push the frontier, but production-grade models must act like infrastructure—predictable, stable, and boring in the best possible way. GPT-5’s rocky reception was a reminder that the audience has grown up. They’re not just marveling at magic tricks anymore; they’re depending on reliability.

Conclusion

The GPT-5 rollout was supposed to be about progress, but it revealed something deeper: people now expect language models to behave like stable infrastructure. The backlash wasn’t against intelligence gains but against the erosion of trust. If models are to become the backbone of software and daily workflows, they must earn reliability as much as they earn benchmarks. The future of LLMs will belong to those who understand that stability, communication, and continuity are features in their own right. Progress without trust is fragility. GPT-5’s reception made that lesson impossible to ignore.