Thought Leaders

The New Digital Divide in AI: Why Edge-Ready, CPU-First Models Will Win the Cost War

The global artificial intelligence (AI) market is expanding at a staggering pace. In 2024, it was valued at $257.68 billion, with projections placing it at $371.71 billion by the end of 2025 and surging to an eye-popping $2.4 trillion by 2032. That is nearly a tenfold increase in less than a decade, a trajectory that rivals some of the most transformative technological booms in modern history.

Over the past decade, roughly 1,500 newly founded AI companies have each secured investments exceeding $1.5 million, signaling not just a wave of innovation but also a strong presence of fierce competition. Established companies are not sitting on the sidelines either. According to a January McKinsey industry report, a striking 92% of organizations plan to increase their AI spending over the next three years.

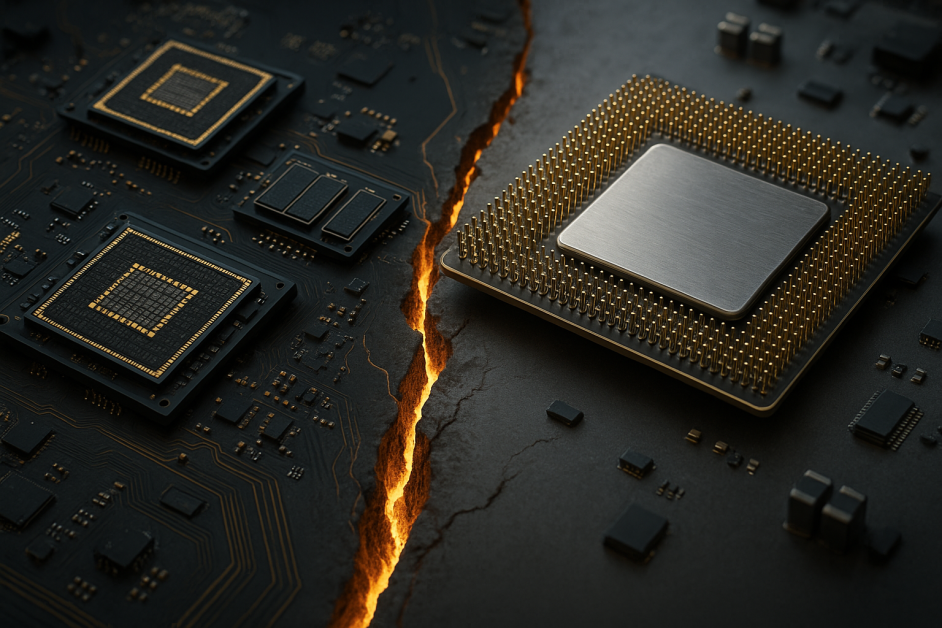

But as AI adoption accelerates, the infrastructure supporting it is showing cracks. Over the last two years, AI has shifted from eye-catching demos to persistent, real-world workloads.

The true bottleneck isn’t just about model quality, it’s around where and how those models run. A new digital divide is forming, not around access to data or talent, but around computing strategy. Organizations face a crucial fork in the road: continue relying on graphic processing unit (GPU) heavy, cloud-centric systems, or embrace leaner, edge-ready, central processing unit (CPU) first architectures that are cheaper to run at scale, easier to deploy in diverse environments, and better aligned with privacy and latency needs.

These architectural choices matter because the real strain isn’t in building models, but in running them day after day. This is where inference costs quickly eclipse training and define the economics of AI at scale.

Inference Is Eating AI Budgets

While headlines often highlight the massive expense of training frontier models, inference is the bill that never stops. Stanford’s 2025 AI Index notes that rapid advances in small models have driven down the cost of achieving “GPT-3.5-level” performance by more than 280× between late 2022 and late 2024. Yet the same report underscores the industry’s obsession with optimizing inference efficiency.

Cloud GPU pricing has only heightened the pressure. Renting high-end GPU instances can, over a three-to-five-year horizon, cost nearly double the price of owning the same hardware outright. Elasticity is useful for spiky workloads, but long-running inference “leases” quietly bleed budgets. Even NVIDIA, whose business depends on accelerators, has spent the past year aggressively optimizing inference across its stack. This is evidence that the real battleground is shifting from training performance to serving economics.

This emerging cost crunch means that organizations unwilling or unable to rethink their compute strategies risk being left behind.

Why the Edge (and CPUs) Change the Cost Curve

The harsh reality is that GPU-centric inference creates unsustainable economics. Running large, real-time AI workloads on expensive GPUs not only drives up costs but also accelerates hardware depreciation. Innovation cycles move so quickly, often less than 18 months between new chip generations, that infrastructure investments lose value quickly. This has led to analysts’ warnings about depreciation costs tied to AI chip purchases as they are already slashing earnings estimates. For instance, Alphabet is projected to absorb $28 billion in depreciation costs by 2026.

Factories, clinics, retail stores, and mobile devices are where AI will increasingly need to operate. Shipping every request to a centralized GPU cluster is often the wrong tool for the job because it’s expensive, energy-intensive, and prone to latency and privacy concerns.

Edge environments aren’t homogeneous GPU farms. They’re diverse fleets of CPUs: servers, ruggedized PCs, laptops, and handheld devices. This diversity makes CPUs a natural foundation for cost-efficient AI deployment.

In this new landscape, CPUs are not just a fallback, they are the cost-smart path to scalable, accessible AI.

GPUs as the “Private Jet” of AI

As models become larger and more complex, they demand more GPU power, which not only drives up infrastructure and energy costs but also concentrates advanced AI capabilities in the hands of those who can afford them.

Studies show that large, general-purpose generative models often use vastly more energy and generate significantly higher carbon emissions per 1,000 inferences compared to smaller, task-specific systems. Even when controlling parameter count, GPU-heavy architectures amplify both financial and operational barriers. Over time, this creates a bottleneck, making it disproportionately difficult for startups, researchers, and under-resourced communities to access cutting-edge AI tools.

It’s an exclusivity problem: GPUs are like the private jets of AI, they’re fast and powerful, but accessible only to a small circle of well-funded organizations.

But acknowledging these limits does not mean dismissing GPUs altogether. They remain exceptional for certain model classes and throughput patterns. A CPU-first strategy is not anti-GPU. It is a cost-smart solution.

This approach broadens access and ensures that AI deployment is driven by efficiency, not prestige. Instead of a future defined by GPU exclusivity, CPUs open the door to scalable, sustainable, and inclusive AI deployment.

The Necessary Switch to CPU-Driven Models

If the AI economy is to scale sustainably, the solution is to reimagine how models are trained and deployed. One approach is to prioritize high-entropy data and edge cases during training. These inputs drive meaningful progress and can reduce the need for massive datasets, allowing models to run with fewer parameters while remaining highly effective.

By being compact enough to operate on commodity CPUs, whether in laptops, smartphones, servers, or Internet of Things (IoT) devices, these models drastically reduce inference costs and energy usage. They also enable real-time processing directly on-device, cutting latency and enhancing privacy by keeping sensitive data local.

This shift is not only about cost; it’s also about equity. In sectors like healthcare, where “deserts” of access already exist, edge-ready CPU deployment could bridge gaps by delivering advanced AI tools directly into clinics, call centers, or field devices without reliance on scarce, centralized compute. The result is broader adoption, improved resilience, and a more inclusive distribution of AI benefits.

From Power to Access: CPUs as the Great Equalizer in AI

The coming years will not just test who can build the most powerful AI models, but who can deliver them efficiently, sustainably, and at scale. CPU-optimized, edge-ready models offer a way forward. By enabling AI to run effectively on commodity hardware, they lower barriers for startups and researchers, reduce dependence on fragile supply chains, and bring advanced applications into environments where centralized GPU clusters are impractical.

Evaluating AI infrastructure through metrics like total cost per transcribed hour, deployability scores, and edge readiness ensures that solutions are judged not only by benchmark accuracy but also by their ability to scale affordably and inclusively in the real world.

The stakes are high. If the industry continues to treat GPUs as the default, access will remain exclusive, innovation will be concentrated, and diffusion into public services, healthcare, and underserved sectors will lag. But if CPU-first, edge-ready strategies take hold, AI can become more resilient, private, and sustainable. This doesn’t just level the playing field, it redefines it.