Robotics

Soft Robot Sweats to Regulate Temperature

Researchers at Cornell University have developed a soft robotic muscle that is capable of regulating its temperature through sweating. The new development is one of many which are transforming the soft robotics field.

The thermal management technique is a fundamental part of creating untethered, high-powered robots that are able to operate for long periods of time without overheating.

The project was led by Rob Shepherd, an associate professor of mechanical and aerospace engineering at Cornell.

The team’s paper titled “Automatic Perspiration in 3D Printed Hydrogel Actuators” was published in Science Robotics.

One of the most difficult aspects of developing enduring, adaptable and agile robots is managing the robots’ internal temperature. According to Shepherd, the robot will malfunction or stop completely if the high-torque density motors and exothermic engines responsible for powering a robot overheat.

This problem is especially present in soft robots since they are made of synthetic material. Soft robots are more flexible, but this increased flexibility causes them to hold heat. This is not the case for metals, which dissipate heat much faster. The problem with an internal cooling technology, such as a fan, is that it would take up too much space inside the robot and increase the weight.

With these challenges in mind, Shepherd’s team looked towards mammals and their natural ability to sweat as inspiration for a cooling system.

“The ability to perspire is one of the most remarkable features of humans,” said co-lead author T.J. Wallin, a research scientist at Facebook Reality Labs. “Sweating takes advantage of evaporated water loss to rapidly dissipate heat and can cool below the ambient environmental temperature. … So as is often the case, biology provided an excellent guide for us as engineers.”

Shepherd’s team partnered with the lab of Cornell engineering professor Emmanual Giannelis. Together, they created nanopolymer materials needed for sweating. They developed these using a 3D-printing technique called multi-material stereolithography, which relies on light to cure resin into pre-designed shapes.

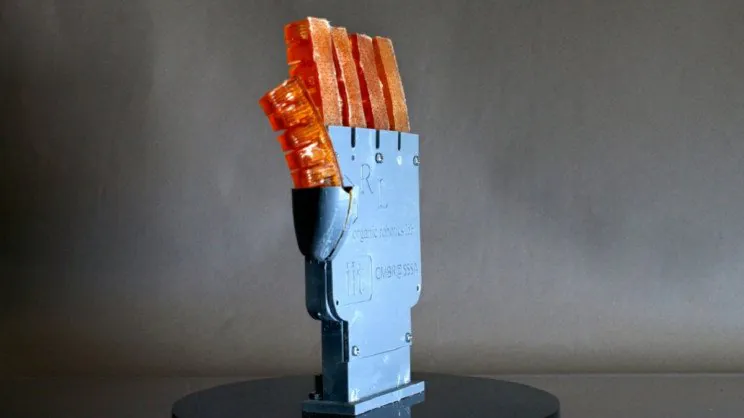

The researchers then fabricated fingerlike actuators that were composed of two hydrogel materials able to retain water and respond to temperature. Another way of looking at it is that these were “smart” sponges. The base layer consists of poly-N-isopropylacrylamide, which reacts to temperatures above 30°C (86°F) by shrinking. This reaction squeezes water up into a top layer of polyacrylamide that is perforated with micron-sized pores. The pores react to the same temperature range, and they release the “sweat” by automatically dilating before closing when the temperature drops below 30°C.

When the water evaporates, the actuator’s surface temperature is reduced by 21°C within 30 seconds. This cooling process is three times more efficient than the one in humans, according to the researchers. When exposed to wind from a fan, the actuators can cool off about six times faster.

One of the issues with the technology is that it can affect a robot’s mobility. The robots are also required to replenish their water supply. Because of this, Shepherd envisions soft robots that eventually will both perspire and drink like mammals.

The new development of this technology follows a very apparent pattern within the robotics industry. Technology is increasingly being developed based on our natural environment. Whether it’s the cooling process of sweating present in mammals, neural networks based on moon jellyfish, or artificial skin, robotics is a field that in many ways builds on what we already have in nature.