Quantum Computing

New Research Makes Breakthrough in Quantum Computing

New research by a team at the Los Alamos National Laboratory has made a breakthrough in quantum computing. A novel theorem demonstrates that convolutional neural networks can always be trained on quantum computers, which overcomes a threat known as “barren plateaus” in optimization problems.

The research was published in Physical Review X.

Barren Plateaus – Fundamental Solvability Problem

Convolutional neural networks can be run on quantum computers to analyze data better than classical computers. However, there has been a fundamental solvability problem called “barren plateaus” that has posed a challenge to researchers by limiting the application of the neural networks for large data sets.

Marco Cerezo is co-author of the research paper titled “Absence of Barren Plateaus in Quantum Convolutional Neural Networks.” Cerezo is a physicist who specializes in quantum computing, quantum machine learning, and quantum information at the lab.

“The way you construct a quantum neural network can lead to a barren plateau — or not,” said Cerezo. “We proved the absence of barren plateaus for a special type of quantum neural network. Our work provides trainability guarantees for this architecture, meaning that one can generically train its parameters.”

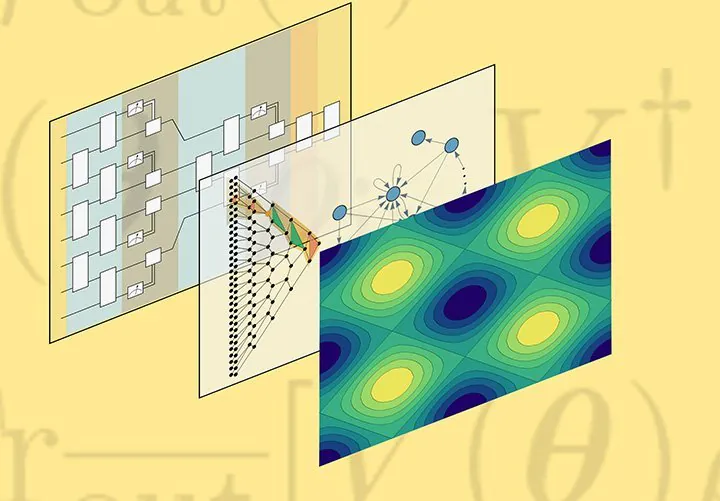

Quantum convolutional neural networks involve a series of convolutional layers that are interleaved with pooling layers, enabling the reduction of the dimension of the data while keeping important features of a data set.

The neural networks can be used for a wide range of applications, such as image recognition and materials discovery. In order for the full potential of quantum computers to be achieved in AI applications, the barren plateaus must be overcome.

According to Cerezo, researchers in quantum machine learning have traditionally analyzed how to mitigate the effects of this problem, but they have yet to develop a theoretical basis for avoiding the entire problem. This is changing with the new research, as the team’s paper demonstrates how some quantum neural networks are immune to barren plateaus.

Patrick Coles is a quantum physicist at Los Alamos and co-author of the research.

“With this guarantee in hand, researchers will now be able to sift through quantum-computer data about quantum systems and use that information for studying material properties or discovering new materials, among other applications,” said Coles.

Vanishing Gradient

The major problem stems from a “vanishing gradient” in the optimization landscape, with the landscape composed of hills and valleys. The goal is to train the model’s parameters to discover a solution by exploring the landscape’s geography, and while the solution usually is at the bottom of the lowest valley, this is not possible when the landscape is flat.

The problem gets even more difficult when the number of data features increases, and the landscape becomes exponentially flat with the feature size. This indicates the presence of a barren plateau, and the quantum neural network cannot be scaled up.

To address this, the team developed a novel graphical approach for analyzing the scaling within a quantum neural network. This neural network is expected to have application in analyzing data from quantum simulations.

“The field of quantum machine learning is still young,” Coles said. “There’s a famous quote about lasers, when they were first discovered, that said they were a solution in search of a problem. Now lasers are used everywhere. Similarly, a number of us suspect that quantum data will become highly available, and then quantum machine learning will take off.”

A scalable quantum neural network could enable a quantum computer to sift through a vast data set about the various states of a given material. Those states could then be correlated with phases, which would help identify the optimal state for high-temperature superconducting.