Artificial Intelligence

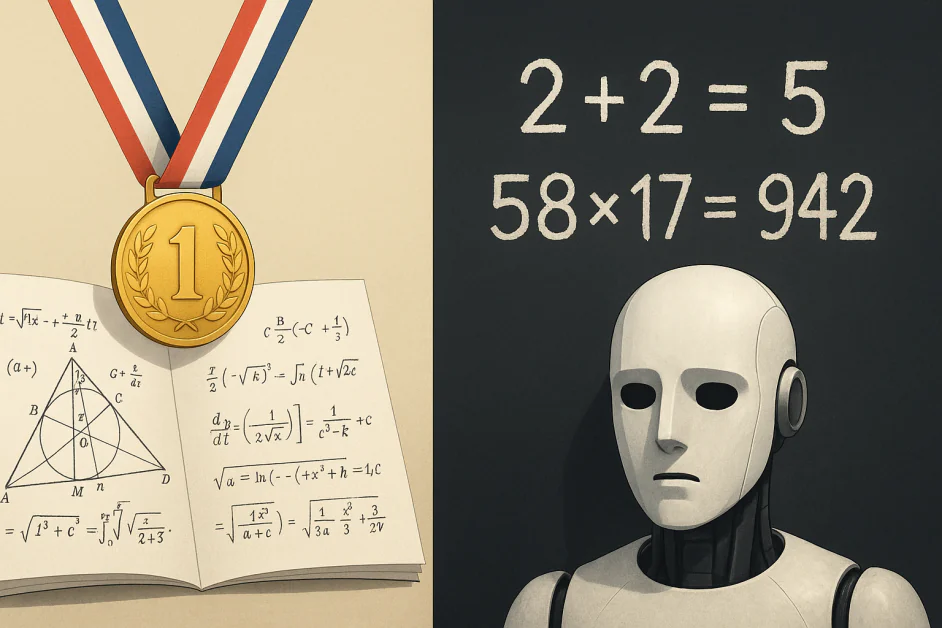

Jagged Intelligence: Why AIs Ace Olympiad Problems but Flounder on School Math

The artificial intelligence community celebrated a remarkable milestone in 2025 when both Google DeepMind and OpenAI systems achieved gold medal performance at the International Mathematical Olympiad. These AI models solved problems that only a handful of the world’s brightest young mathematicians could crack. Still, these same systems often stumble when asked to perform basic arithmetic that any middle school student could handle with ease. This striking paradox reveals something fundamental about the nature of artificial intelligence today. We are witnessing the emergence of what can only be called jagged intelligence, where machines display superhuman abilities in certain domains while failing at tasks we consider elementary.

The Olympiad Triumph

The International Mathematical Olympiad is the leading standard in pre-university mathematical competition. Each year, the brightest young mathematicians from around the world tackle six problems that require deep insight, creative thinking, and high-level proof techniques. In 2025, AI systems from both Google DeepMind and OpenAI scored 35 out of 42 points, enough to earn gold medals. DeepMind’s AlphaGeometry 2 solved a complex geometry problem in just 19 seconds, while AlphaProof solved problems in number theory and algebra that stumped most human contestants.

These achievements build on years of incremental progress. The systems use formal mathematical languages like Lean to construct rigorous proofs. They employ techniques like curriculum learning, where the AI trains on problems of increasing difficulty. This training enables AI to understand complex relationships between mathematical objects, recognize subtle patterns, and construct elegant proofs.

The Elementary Struggle

The same AI systems that achieve gold at Olympiad problems often fail at tasks that seem trivial. For instance, if you ask them to multiply large numbers, they might confidently produce incorrect answers. Similarly, if you try to perform other basic arithmetic operations, their performance becomes unpredictable. The problem is not limited to simple calculation. These systems often struggle with word problems that require tracking multiple quantities, understanding real world context, or applying basic mathematical operations in sequence.

This weakness essentially stems from how these AI models fundamentally work. Large language models predict what text should come next based on patterns they have seen in training data. When they encounter “2 + 2”, they recognize this pattern and correctly predict “4” not because they understand addition, but because this sequence appears countless times in their training data. When you present them with unusual calculations that rarely appear in text, their performance deteriorates rapidly. They are essentially pattern matching machines that excel when patterns are clear and consistent but struggle when forced to compute a unseen problem.

The Architecture Paradox

The contradiction between Olympiad success and arithmetic failure reveals a deeper architectural issue. Modern AI systems excel at problems that can be solved through pattern recognition, logical deduction, and systematic search through solution spaces. Olympiad problems, despite their difficulty, often have elegant structures that AI can exploit. The systems can explore different proof strategies, verify logical steps, and build upon established mathematical frameworks. They operate in a world of symbols and rules where consistency and logic dominate.

In contrast basic arithmetic, paradoxically, poses different challenges. It requires precise manipulation of quantities, not pattern matching. It demands understanding of numerical magnitude and relationships that cannot be approximated. When an AI system approaches arithmetic through language modeling, it treats numbers as tokens to be predicted rather than quantities to be computed. This fundamental mismatch between the task requirements and the model architecture creates the performance gap we observe.

Training Data and Its Limitations

AI capabilities largely depend on the quality and nature of training data. Mathematical proofs and advanced problems often appear in well-structured formats online. Academic papers, textbooks, and educational resources provide clear examples of mathematical reasoning. The internet contains extensive discussions of mathematical concepts, proof techniques, and problem-solving strategies. This rich corpus enables AI systems to learn advanced mathematical thinking.

Elementary mathematics, however, suffers from a different problem. While basic arithmetic appears frequently online, it rarely comes with the detailed reasoning chains that help AI understand underlying processes. Simple calculations are stated as facts rather than explained as procedures. The training data contains the results of computation but not the computational process itself. This creates a fundamental gap in understanding that manifests as poor performance on basic tasks.

Implications for AI Development

This uneven pattern of intelligence has crucial implications for the way we design and use AI systems. We cannot assume that success in complex tasks means competence in simpler ones. An AI capable of proving mathematical theorems might fail at balancing a checkbook. A system that writes computer code might struggle with basic counting. This reality demands careful consideration of AI capabilities and limitations in real world applications.

The phenomenon also reveals the importance of hybrid approaches. Instead of expecting a single model to handle every task, we might need specialized systems for different types of tasks. For example, combining symbolic computation for arithmetic with language models for reasoning could create more reliable solutions. The future of AI may lie in coordinating multiple specialized systems rather than pursuing monolithic general intelligence.

The Path Forward

Recognizing jagged intelligence provides a clearer direction for building more capable AI systems. Researchers are developing methods to integrate computational tools into language models, enabling them to delegate arithmetic to calculators. New training strategies focus on teaching models when to use external tools instead of trying to internalize every skill. This approach mirrors human intelligence, where we rely on calculators for computation and reserve our mental effort for higher-level reasoning.

The paradox of jagged intelligence ultimately teaches us humility about artificial intelligence. These systems are neither universally superior nor uniformly limited. Instead, they display a complex mix of strengths and weaknesses that we must be aware of to effectively use and improve AI capabilities. Success requires not just expanding what AI can do but also addressing its fundamental gaps. The machines that can prove theorems but fail at basic addition show that intelligence, whether artificial or human, remains a multifaceted phenomenon that is not easy to define.

The Bottom Line

AI’s success at solving Olympiad problems but failure at simple math shows that intelligence does not develop evenly. These systems can be brilliant in one area and weak in another. Understanding this uneven pattern is important for how we design and use AI. Instead of expecting one model to do everything, we may need to combine different approaches that play to each system’s strengths. Real progress will come from building AI that works reliably in practice, not from assuming it will be good at every task.