Thought Leaders

Decoding Opportunities and Challenges for LLM Agents in Generative AI

We are seeing a progression of Generative AI applications powered by large language models (LLM) from prompts to retrieval augmented generation (RAG) to agents. Agents are being talked about heavily in industry and research circles, mainly for the power this technology provides to transform Enterprise applications and provide superior customer experiences. There are common patterns for building agents that enable first steps towards artificial general intelligence (AGI).

In my previous article, we saw a ladder of intelligence of patterns for building LLM powered applications. Starting with prompts that capture problem domain and use LLM internal memory to generate output. With RAG, we augment the prompt with external knowledge searched from a vector database to control the outputs. Next by chaining LLM calls we can build workflows to realize complex applications. Agents take this to a next level by auto determining how these LLM chains are to be formed. Let’s look in detail.

Agents – Under the hood

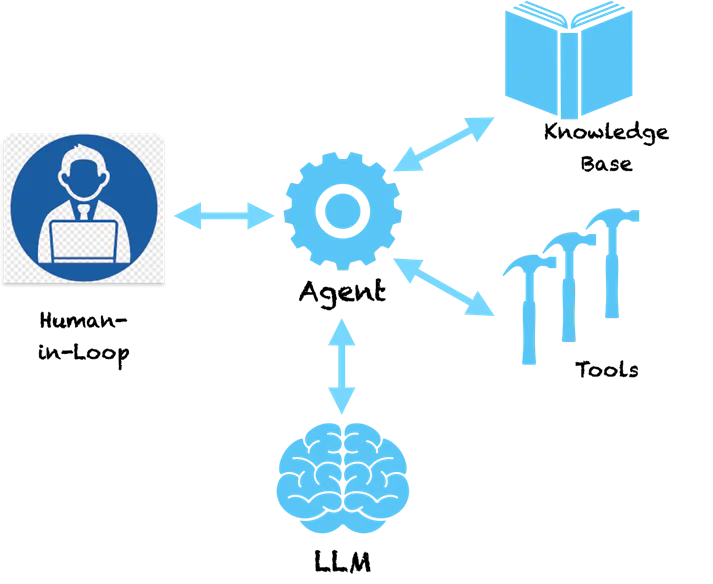

A key pattern with agents is that they use the language understanding power of LLM to make a plan on how to solve a given problem. The LLM understands the problem and gives us a sequence of steps to solve the problem. However, it doesn’t stop there. Agents are not a pure support system that will provide you recommendations on solving the problem and then pass on the baton to you to take the recommended steps. Agents are empowered with tooling to go ahead and take the action. Scary right!?

If we ask an agent a basic question like this:

Human: Which company did the inventor of the telephone start?

Following is a sample of thinking steps that an agent may take.

Agent (THINKING):

- Thought: I need to search for the inventor of the telephone.

- Action: Search [inventor of telephone]

- Observation: Alexander Graham Bell

- Thought: I need to search for a company that was founded by Alexander Graham Bell

- Action: Search [company founded by Alexander Graham Bell]

- Observation: Alexander Graham Bell co-founded the American Telephone and Telegraph Company (AT&T) in 1885

- Thought: I have found the answer. I will return.

Agent (RESPONSE): Alexander Graham Bell co-founded AT&T in 1885

You can see that the agent follows a methodical way of breaking down the problem into subproblems that can be solved by taking specific Actions. The actions here are recommended by the LLM and we can map these to specific tools to implement these actions. We could enable a search tool for the agent such that when it realizes that LLM has provided search as an action, it will call this tool with the parameters provided by the LLM. The search here is on the internet but can as well be redirected to search an internal knowledge base like a vector database. The system now becomes self-sufficient and can figure out how to solve complex problems following a series of steps. Frameworks like LangChain and LLaMAIndex give you an easy way to build these agents and connect to toolings and API. Amazon recently launched their Bedrock Agents framework that provides a visual interface for designing agents.

Under the hood, agents follow a special style of sending prompts to the LLM which make them generate an action plan. The above Thought-Action-Observation pattern is popular in a type of agent called ReAct (Reasoning and Acting). Other types of agents include MRKL and Plan & Execute, which mainly differ in their prompting style.

For more complex agents, the actions may be tied to tools that cause changes in source systems. For example, we could connect the agent to a tool that checks for vacation balance and applies for leave in an ERP system for an employee. Now we could build a nice chatbot that would interact with users and via a chat command apply for leave in the system. No more complex screens for applying for leaves, a simple unified chat interface. Sounds exciting!?

Caveats and need for Responsible AI

Now what if we have a tool that invokes transactions on stock trading using a pre-authorized API. You build an application where the agent studies stock changes (using tools) and makes decisions for you on buying and selling of stock. What if the agent sells the wrong stock because it hallucinated and made a wrong decision? Since LLM are huge models, it is difficult to pinpoint why they make some decisions, hence hallucinations are common in absence of proper guardrails.

While agents are all fascinating you probably would have guessed how dangerous they can be. If they hallucinate and take a wrong action that could cause huge financial losses or major issues in Enterprise systems. Hence Responsible AI is becoming of utmost importance in the age of LLM powered applications. The principles of Responsible AI around reproducibility, transparency, and accountability, try to put guardrails on decisions taken by agents and suggest risk analysis to decide which actions need a human-in-the-loop. As more complex agents are being designed, they need more scrutiny, transparency, and accountability to make sure we know what they are doing.

Closing thoughts

Ability of agents to generate a path of logical steps with actions gets them really close to human reasoning. Empowering them with more powerful tools can give them superpowers. Patterns like ReAct try to emulate how humans solve the problem and we will see better agent patterns that will be relevant to specific contexts and domains (banking, insurance, healthcare, industrial, etc.). The future is here and technology behind agents is ready for us to use. At the same time, we need to keep close attention to Responsible AI guardrails to make sure we are not building Skynet!