Artificial Intelligence

An Introduction To Vertex AI

Given the rapidly evolving landscape of Artificial Intelligence, one of the biggest hurdles tech leaders often come across is transitioning from being “experimental” to being “enterprise-ready”. While consumer chatbots and an interactive platform helps with public imagination, businesses cannot succeed with just a chat interface. In an era where competition is more aggressive than ever before, businesses need a robust, scalable and secure ecosystem, and this is what Google attempts to offer with the Vertex AI, Google Cloud’s unified Artificial Intelligence & Machine Learning platform.

Vertex AI attempts to solidify itself as the backbone for Generative AI integration with modern cloud infrastructure, offering a comprehensive suite of features that bridges the gap between raw foundation models, and production-grade applications. Vertex AI is not merely a wrapper for large language models (LLMs), rather it is a unified Machine Learning and Artificial Intelligence (ML/AI) ecosystem that treats Generative AI as a first-class citizen of modern cloud infrastructure.

At the heart of Vertex AI sits the Model Garden, a central marketplace that provides access to over 200 curated foundation models, including the multi-modal powerhouse Gemini 2.5 Pro, which features a staggering 2-million-token context window. In this article, we will dissect the architecture of Vertex AI, explore how Model Garden serves as the industry’s “App Store” for intelligence, and look at the technical pillars that make this platform the backbone of the next generation of enterprise software.

The Core Architecture : A Unified Platform

Vertex AI is not a loosely coupled collection of tools, rather a unified data and AI ecosystem designed to bridge the fragmentation of data, tools and teams that plagues machine learning to this day. Traditionally, AI development takes place in isolated environments, and sometimes, the data is spread and trapped across multiple repositories. For example, organizations might store customer data in SQL warehouses while the unstructured documents are dumped into a Data Lake. When data is siloed, the AI sees only a “partial truth,” leading to biased results or high hallucination rates because it lacks the full context of the enterprise.

Vertex AI attempts to integrate the entire lifecycle, from raw data ingestion in BigQuery and Cloud Storage to production monitoring, essentially serving as a “connective tissue” between these silos. Vertex AI integrates natively with Cloud Storage and BigQuery, allowing the AI models to retrieve the data without complex Extraction, Transformation, and Load pipelines.

The Foundation : Google’s AI Hypercomputer

The GenAI layer of Vertex AI sits on top of Google’s AI Hypercomputer architecture, an integrated supercomputing system, that consists of:

TPU v5p & v5e (Tensor Processing Units)

Google’s Tensor Processing Units are custom-built ASICs (Application-Specific Integrated Circuits) designed specifically for the matrix multiplication that defines deep learning.

- TPU v5p (Performance): This is the flagship accelerator for massive-scale training. Each TPU v5p pod can scale to 8,960 chips interconnected by Google’s highest-bandwidth Inter-Chip Interconnect (ICI) at 4,800 Gbps. For a tech lead, this means 2.8x faster training for a GPT-3 sized model (175B parameters) compared to the previous generation, drastically reducing the time-to-market.

- TPU v5e (Efficiency): Designed for “cost-optimized” performance, the v5e is the workhorse for medium-scale training and high-throughput inference. It offers up to 2.5x better price-performance, making it the ideal choice for businesses that need to run 24/7 inference without a massive budget.

NVIDIA H100/A100 GPUs for Flexibility

While TPUs are specialized, many development teams rely on the NVIDIA CUDA ecosystem. Vertex AI provides first-class support for NVIDIA’s latest hardware:

- NVIDIA H100 (Hopper): Ideal for fine-tuning the largest open-source models (like Llama 3.1 405B) that require massive memory bandwidth.

- Jupiter Networking: To prevent the “Network Bottleneck,” Google uses its Jupiter data center network fabric. This ensures that data moves between GPUs at lightning speed, supporting RDMA (Remote Direct Memory Access) to bypass CPU overhead and deliver near-local performance across distributed nodes.

Dynamic Orchestration

The most critical technical shift in Vertex AI is Dynamic Orchestration. In a legacy environment, if a GPU node fails during a 3-week training run, the entire job might crash.

- Automated Resiliency: Vertex AI, often powered by Google Kubernetes Engine (GKE) under the hood, features “Self-healing” nodes. If a hardware fault is detected, the platform automatically migrates the workload to a healthy node.

- Dynamic Workload Scheduler: This tool allows teams to request capacity based on urgency. You can opt for Flex Start (cheaper, starts when capacity is available) or Guaranteed Capacity for mission-critical releases.

- Serverless Training: For teams that want zero infrastructure management, Vertex AI Serverless Training allows you to submit your code and data; the platform provides the cluster, runs the job, and tears it down—charging you only for the compute seconds used.

The Three Entry Points: Discovery, Experimentation, and Automation

To accommodate different technical personas—from data scientists to application developers—Vertex AI provides three primary entry points:

- Model Garden: The Marketplace for Discovery.

- Vertex AI Studio: The Playground for Experimentation.

- Vertex AI Agent Builder: The Factory for Automation.

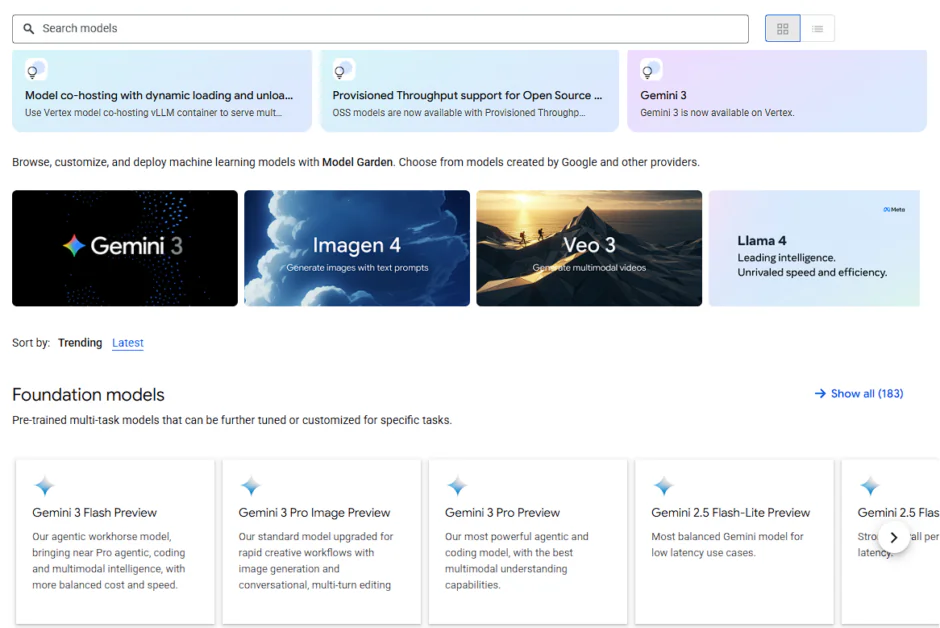

Model Garden: The Marketplace for Discovery

Google Cloud’s Vertex AI Model Garden is a centralized platform within Google Cloud for discovering, testing, customizing, and deploying a wide range of first-party, open-source, and third-party AI models, including multimodal ones (vision, text, code) for various business needs, offering seamless integration with Vertex AI’s tools for streamlined MLOps. It acts as a comprehensive library, helping developers and businesses select the right model (from large foundation models to specialized ones) for their tasks, whether it’s for text generation, image analysis, or code completion, and deploy them efficiently within their Google Cloud environment.

Model Garden categorizes its 200+ models into three distinct tiers, allowing architects to balance performance, cost, and control:

- First-Party (Google) Models: These are the flagship multimodal models available within Vertex AI, and Google offers them in various sizes, ranging from Pro with complex reasoning to Flash with low latency, and high volume, thus allowing developers to optimize their models as per their use cases.

- Third-Party (Proprietary) Models: Through strategic partnerships, Vertex AI offers “Model-as-a-Service” (MaaS) access to titans like Anthropic (Claude 3.5) and Mistral AI. Instead of managing separate billing and security credentials for five different AI providers, a tech team can access all of them through their existing Google Cloud project, using a unified API format.

- Open-Source & Open-Weight Models: This tier includes Meta’s Llama 3.2, Mistral, and Google’s own Gemma. These are ideal for organizations that want to self-deploy models within their own VPC (Virtual Private Cloud) to ensure maximum data isolation.

In a non-unified environment, deploying an open-source model like Llama requires setting up a PyTorch environment, configuring CUDA drivers, and managing a Flask or FastAPI wrapper.

Model Garden eliminates this “Munging” phase through Unified Managed Endpoints:

- One-Click Deployment: For many models, clicking “Deploy” automatically provisions the necessary TPU/GPU resources, wraps the model in a production-ready container, and provides a REST API endpoint.

- Hugging Face Integration: Vertex AI now allows developers to deploy models directly from the Hugging Face Hub into a Vertex endpoint, providing an almost infinite expansion of available intelligence.

- Private Service Connect (PSC): For highly regulated industries, models can be deployed using Private Service Connect, ensuring the model endpoint is never exposed to the public internet—keeping the data traffic strictly within the corporate network.

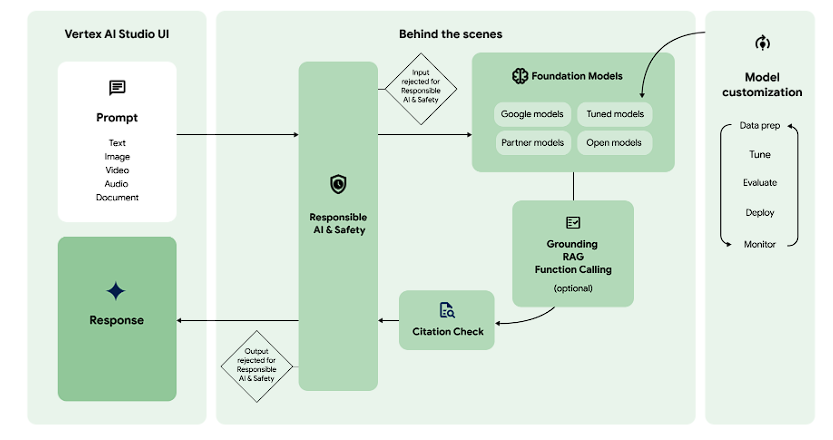

Vertex AI Studio: The Playground for Experimentation

While the Model Garden is about selection, Vertex AI Studio is about precision. Vertex AI Studio can be compared to the compilers and debuggers you come across in the traditional software world. The Vertex AI Studio is the workspace where raw models are sculpted into specific business tools through a combination of prompt engineering, multimodal testing, and advanced hyperparameter tuning.

Multimodal Prototyping: Beyond Text

One of the Studio’s standout features is its native support for multimodality. While other platforms require complex coding to handle non-text data, Vertex AI Studio allows you to drop files directly into the interface to test the Gemini 2.5 reasoning capabilities.

- Video Intelligence: You can upload a 45-minute technical keynote and ask the model to “identify every time a specific API is mentioned and provide a timestamped summary.”

- Document Analysis: Instead of just reading text, the model can analyze the visual layout of a 1,000-page PDF, understanding the relationship between charts, tables, and the surrounding prose.

- Code Execution: Studio now supports code execution in the playground. If you ask a model to solve a complex math problem or analyze a CSV, the model can write and execute Python code in a secure sandboxed environment to provide a verified answer.

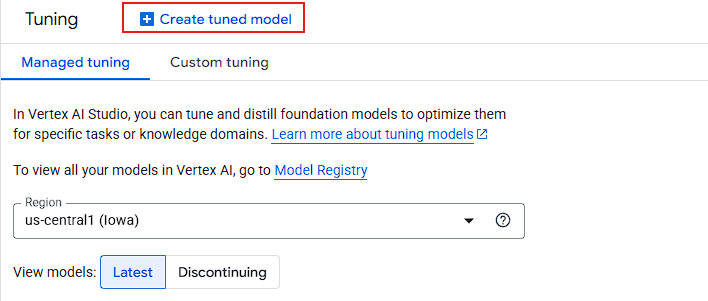

Advanced Customization: The Tuning Pathway

When prompt engineering (Zero-shot or Few-shot) hits a ceiling, Vertex AI Studio provides the heavy machinery: Model Tuning.

- Supervised Fine-Tuning (SFT): Developers provide a dataset of “Prompt/Response” pairs (ideally 100+ examples). This teaches the model to adopt a specific brand voice, output format (like specialized JSON), or domain-specific jargon.

- Context Caching: For enterprises dealing with massive, static datasets (like a legal library or a codebase), the Studio allows for Context Caching. This lets you “pre-load” a million tokens of data into the model’s memory, drastically reducing latency and costs for subsequent queries.

- Distillation (Teacher-Student): This is a high-level architectural move. You can use a massive model (Gemini 2.5 Pro) to “teach” a smaller, faster model (Gemini 2.0 Flash). The result is a lightweight model that performs at a “Pro” level but runs at “Flash” speed and cost.

Vertex AI Agent Builder: The Factory for Automation

Vertex AI Agent Builder is a high-level orchestration framework that allows developers to create these agents by combining foundation models with enterprise data and external APIs.

The Architecture of “Truth”: Grounding & RAG

The primary technical barrier to enterprise AI is hallucination. Agent Builder solves this through a sophisticated Grounding engine.

- Grounding with Google Search: For queries requiring real-time world knowledge (e.g., “What are the current mortgage rates in New York?”), the agent can perform a Google Search, extract the facts, and cite its sources.

- Vertex AI Search (RAG-as-a-Service): Instead of manually building a vector database (Pinecone, Weaviate), developers can use Vertex AI Search to index their own documents (PDFs, HTML, BigQuery). It handles the “chunking,” “embedding,” and “retrieval” steps automatically, ensuring the agent only answers based on your internal “Source of Truth.”

- Vertex AI RAG Engine: For high-scale, custom implementations, this managed service allows for hybrid search (combining vector-based and keyword-based results) to improve accuracy by up to 30% over standard LLM outputs.

Multi-Agent Orchestration (A2A Protocol)

Advanced enterprise workflows often require multiple specialized agents working together. Vertex AI introduces the Agent-to-Agent (A2A) Protocol, an open standard that allows:

- The “Travel Agent” to talk to the “Finance Agent” to ensure a flight booking is within the corporate budget.

- Interoperability: Because it uses an open protocol, agents built on Vertex can communicate with those built on other frameworks like LangChain or CrewAI.

The Developer Stack: ADK and Agent Engine

For the “tech platform” audience, the Agent Builder offers two distinct paths:

- No-Code Console: A visual drag-and-drop interface for rapid prototyping and business-user configuration.

- Agent Development Kit (ADK): A code-first Python toolkit for engineers. It allows for “Prompt-as-Code,” version control integration, and the ability to deploy to the Vertex AI Agent Engine—a managed runtime that handles session persistence, scaling, and state management automatically.

Conclusion: From “What if” to “What’s Next”

The transition from a flashy AI demo to a production-grade enterprise application has long been the “valley of death” for digital transformation projects. As we have explored, Vertex AI is designed specifically to bridge this gap. By unifying the fragmented silos of data, infrastructure, and model orchestration, Google Cloud has moved the conversation away from the raw power of Large Language Models and toward the operational maturity of the AI lifecycle.