Artificial Intelligence

AI Models Stumble on Basic Clock Reading While Humans Excel

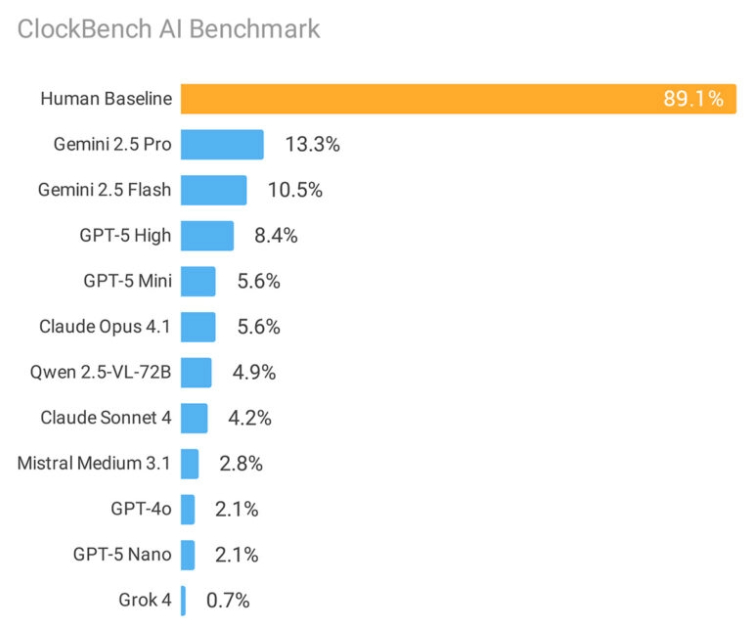

A comprehensive study testing 11 leading AI models against humans in reading analog clocks has exposed a surprising weakness in current artificial intelligence systems. While humans achieved 89.1% accuracy in telling time, Google’s best-performing model managed just 13.3% accuracy on the same test.

The ClockBench study, conducted by researcher Alek Safar, demonstrates that even the most advanced AI systems struggle with visual tasks that most people master as children. The benchmark tested systems from Google, OpenAI, Anthropic, and other major AI labs using 180 custom-designed analog clocks.

This goes beyond clocks. The results highlight fundamental limitations in how AI systems process and reason about visual information. “Reading analog clocks sets a high bar for doing reasoning within the visual space,” Safar notes in the research paper. The task requires models to identify clock hands, understand their relationships, and translate visual positioning into numerical time.

The performance gap becomes even more striking when examining error patterns. When humans made mistakes, the median error was just three minutes. AI models, by contrast, missed the mark by one to three hours – roughly equivalent to random guessing on a 12-hour clock.

Specific Weaknesses Revealed

AI systems particularly struggled with:

- Roman numerals (3.2% accuracy)

- Mirrored or backwards clock faces

- Colorful backgrounds or complex designs

- Clocks with second hands requiring precise readings

Interestingly, when AI models did successfully read a clock, they performed well on follow-up tasks like adding time or converting time zones. This suggests the core challenge lies in initial visual recognition rather than mathematical reasoning.

Industry Performance Breakdown

Google’s models led the pack, with Gemini 2.5 Pro achieving 13.3% accuracy and Gemini 2.5 Flash reaching 10.5%. OpenAI’s GPT-5 scored 8.4%, while Anthropic’s Claude models performed lower, with Claude 4 Sonnet at 4.2% and Claude 4.1 Opus at 5.6%.

xAI’s Grok 4 posted surprisingly poor results at 0.7% accuracy, though this stemmed from the model incorrectly flagging 63% of all clocks as showing impossible times when only 20.6% actually did.

Source: Alek Safar

Broader Implications for AI Development

The study builds on the “easy for humans, hard for AI” benchmark approach seen in tests like ARC-AGI and SimpleBench. While AI systems have rapidly conquered knowledge-intensive tasks and even surpassed human performance on many standardized tests, basic visual reasoning remains problematic.

The research suggests current scaling approaches may not solve visual reasoning challenges. Safar hypothesizes that analog clocks may be underrepresented in training data and that translating visual clock representations into text for reasoning creates additional complications.

ClockBench joins a growing collection of benchmarks designed to identify AI limitations that aren’t immediately obvious from performance on traditional tests. The full dataset remains private to prevent contamination of future AI training, with only small samples made public for testing.

The results raise questions about whether existing AI development paradigms can address these visual reasoning gaps or whether entirely new approaches will be needed – similar to how test-time computing unlocked progress in other domains.

For now, the humble analog clock stands as an unexpected fortress against artificial intelligence, readable by virtually any human but confounding the world’s most sophisticated AI systems.