Künstliche Intelligenz

Modell-Router und die Feedback-Falle: Wie KI von sich selbst lernt

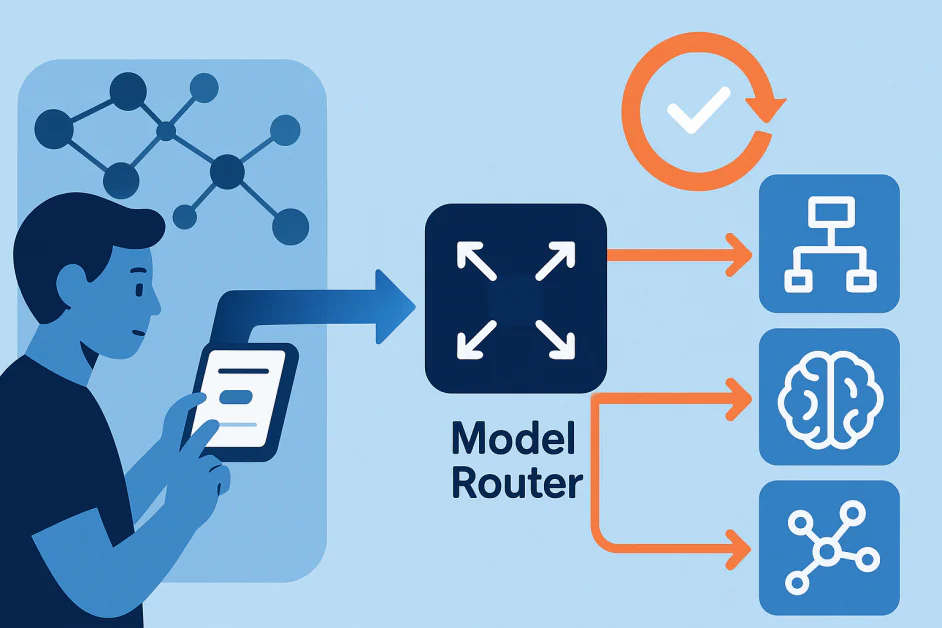

Moderne KI-Systeme basieren nicht mehr auf einem einzigen Modell, das alle Aufgaben bewältigt. Stattdessen basieren sie auf einer Reihe von Modellen, die jeweils für bestimmte Zwecke entwickelt wurden. Im Zentrum dieses Aufbaus steht der Modellrouter, eine Komponente, die die Anfrage eines Benutzers interpretiert und entscheidet, welches Modell sie bearbeiten soll. Beispielsweise in Systemen wie GPA-5 von OpenAIBeispielsweise kann ein Router aus Geschwindigkeitsgründen eine einfache Abfrage an ein leichtes Modell senden, während er komplexe Schlussfolgerungsaufgaben an ein fortgeschritteneres Modell weiterleitet.

Router sind nicht nur Verkehrsmanager. Sie lernen aus dem Nutzerverhalten, etwa wenn Nutzer das Modell wechseln oder bestimmte Antworten bevorzugen. Dadurch entsteht ein Kreislauf: Der Router weist die Anfrage zu, das Modell liefert eine Antwort, Nutzerreaktionen geben Feedback und der Router aktualisiert seine Entscheidungen. Laufen diese Zyklen unbemerkt im Hintergrund ab, können sich versteckte Feedbackschleifen bilden. Solche Schleifen können Verzerrungen verstärken, fehlerhafte Muster untermauern oder die Leistung schrittweise und schwer erkennbar reduzieren.

In diesem Artikel wird untersucht, wie Modellrouter funktionieren, wie Rückkopplungsschleifen entstehen und welche Risiken sie im Zuge der Weiterentwicklung von KI-Systemen bergen.

Modellrouter in der KI verstehen

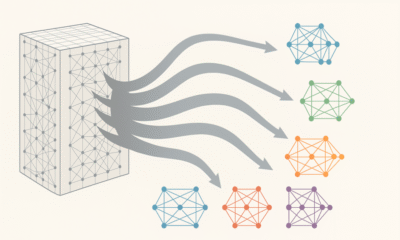

A Routermodell ist die Entscheidungsebene in einem Multi-Modell-KI-System. Ihre Aufgabe besteht darin, das für eine Aufgabe am besten geeignete Modell zu bestimmen. Die Wahl hängt von Faktoren wie Abfragekomplexität, Benutzerabsicht, Kontext und der Abwägung zwischen Kosten, Genauigkeit und Geschwindigkeit ab.

Im Gegensatz zu Systemen, die festen Regeln folgen, sind die meisten Modellrouter selbst maschinelle Lernsysteme. Sie werden anhand realer Signale trainiert und passen sich mit der Zeit an. Sie können aus dem Benutzerverhalten lernen, beispielsweise durch den Wechsel zwischen Modellen, die Bewertung von Antworten oder die Umformulierung von Eingabeaufforderungen, sowie durch automatisierte Auswertungen, die die Qualität der Ausgabe messen.

Diese Anpassungsfähigkeit macht Router leistungsstark, aber auch riskant. Sie steigern die Effizienz und bieten ein besseres Benutzererlebnis, doch dieselben Feedbackprozesse, die ihre Entscheidungen verfeinern, können auch verstärkende Schleifen erzeugen. Mit der Zeit können diese Schleifen nicht nur die Routing-Strategien beeinflussen, sondern auch das Verhalten des größeren KI-Systems.

Wie Feedbackschleifen entstehen

A Rückkopplungsschleife tritt auf, wenn die Ausgabe eines Systems die Daten beeinflusst, aus denen es später lernt. Ein einfaches Beispiel ist ein Empfehlungssystem: Wenn Sie auf ein Sportvideo klicken, zeigt Ihnen das System weitere Sportinhalte, die beeinflussen, was Sie als Nächstes ansehen. Mit der Zeit verstärkt das System seine eigenen Muster. Ein weiteres Beispiel zum Verständnis der Rückkopplungsschleife ist die vorausschauende Polizeiarbeit. Ein Algorithmus kann eine höhere Kriminalität in bestimmten Vierteln vorhersagen, was zu mehr Patrouillen führen könnte. Verstärkte Patrouillen decken mehr Vorfälle auf, die dann die Vorhersage des Algorithmus bestätigen. Das System sieht genau aus, aber die Daten sind durch seinen eigenen Einfluss verzerrt. Rückkopplungsschleifen können direkt oder versteckt sein. Direkte Schleifen sind leicht zu erkennen, wie z. B. ein Empfehlungssystem, das seine eigenen Vorschläge neu trainiert. Versteckte Schleifen sind subtiler, weil sie entstehen, wenn verschiedene Teile eines Systems sich gegenseitig indirekt beeinflussen.

Modellrouter können ähnliche Schleifen erzeugen. Die Entscheidung eines Routers bestimmt, welches Modell die Antwort liefert. Diese Antwort prägt das Benutzerverhalten, das wiederum als Feedback für den Router dient. Mit der Zeit kann der Router beginnen, Muster zu verstärken, die in der Vergangenheit funktioniert haben, anstatt konsequent das beste Modell zu wählen. Diese Schleifen sind schwer zu erkennen und können KI-Systeme unbemerkt in unbeabsichtigte Richtungen drängen.

Warum Rückkopplungsschleifen in Routern riskant sind

Feedbackschleifen helfen Routern zwar, die Aufgabenzuordnung zu verbessern, bergen aber auch Risiken, die das Systemverhalten verzerren können. Ein Risiko besteht darin, anfängliche Verzerrungen zu verstärken. Sendet ein Router wiederholt eine bestimmte Art von Abfrage an Modell A, stammt das meiste Feedback von den Ausgaben von Modell A. Der Router könnte dann davon ausgehen, dass Modell A immer das Beste ist, und Modell B ausgrenzen, selbst wenn es manchmal bessere Leistungen erbringen könnte. Diese ungleiche Nutzung kann sich selbst verstärken. Modelle, die bei gerouteten Aufgaben gut abschneiden, ziehen mehr Anfragen an, was ihre Stärken verstärkt. Unterausgelastete Modelle erhalten weniger Verbesserungsmöglichkeiten, was zu Ungleichgewichten und geringerer Vielfalt führt.

Die Verzerrungen können auch von den Bewertungsmodellen herrühren, die zur Beurteilung der Richtigkeit verwendet werden. Weist das „Beurteiler“-Modell blinde Flecken auf, werden seine Verzerrungen direkt an den Router weitergegeben, der dann die Werte des Beurteilers und nicht die tatsächlichen Nutzerbedürfnisse optimiert. Das Nutzerverhalten trägt zusätzlich zur Komplexität bei. Gibt ein Router tendenziell bestimmte Antwortstile zurück, passen Nutzer ihre Anfragen möglicherweise an diese Muster an und verstärken diese so noch weiter. Mit der Zeit kann dies sowohl das Nutzerverhalten als auch die Systemreaktionen einschränken. Router können auch lernen, bestimmte Abfragemuster oder demografische Merkmale mit bestimmten Modellen zu verknüpfen. Dies kann zu systematisch unterschiedlichen Erfahrungen zwischen Gruppen führen und bestehende gesellschaftliche Vorurteile potenziell verstärken.

Ein weiteres wichtiges Problem ist die langfristige Abweichung. Die Entscheidungen, die ein Router heute trifft, beeinflussen die Trainingsdaten von morgen. Werden Modelle anhand von Ergebnissen, die vom Routing beeinflusst werden, neu trainiert, lernen sie möglicherweise die Präferenzen des Routers statt unabhängiger Ansätze. Dies kann zu einheitlicheren Antworten verschiedener Modelle führen und Verzerrungen einbetten, die über die Zeit bestehen bleiben.

Strategien zum Durchbrechen des Kreislaufs

Um das Risiko versteckter Schleifen zu reduzieren, sind aktives Design und Überwachung erforderlich. Das Training sollte verschiedene Datenquellen nutzen, nicht nur Benutzerklicks oder -schalter. Gelegentliches zufälliges Routing kann zudem verhindern, dass ein Modell einen Aufgabentyp monopolisiert. Überwachung ist unerlässlich. Regelmäßige Audits können zeigen, ob ein Router bestimmten Mustern folgt oder sich zu sehr auf ein einzelnes Modell verlässt. Transparenz bei Router-Entscheidungen hilft Forschern, Verzerrungen frühzeitig zu erkennen.

Router sollten außerdem regelmäßig mit aktuellen, ausgewogenen Daten neu trainiert werden, damit sich alte Vorurteile nicht festsetzen. Die Einbeziehung menschlicher Kontrolle, insbesondere in sensiblen Bereichen, sorgt für zusätzliche Verantwortlichkeit. Menschen können erkennen, wenn ein Router systematisch ein Modell bevorzugt oder bestimmte Abfragen falsch klassifiziert.

Der Schlüssel liegt darin, den Router als ein Modell zu betrachten, das Feedback unterliegt, und nicht als feste oder neutrale Komponente. Indem Forscher und Entwickler erkennen, wie Router selbst durch die von ihnen erzeugten Daten geprägt werden, können sie Systeme entwerfen, die langfristig fair, anpassungsfähig und zuverlässig bleiben.

Fazit

Modellrouter bieten klare Vorteile in Bezug auf Effizienz und Anpassungsfähigkeit, bergen aber auch versteckte Risiken. Rückkopplungsschleifen innerhalb dieser Systeme können Verzerrungen verstärken, die Reaktionsvielfalt einschränken und Modelle auf enge Verhaltensmuster festlegen. Da diese Architekturen immer häufiger zum Einsatz kommen, ist das frühzeitige Erkennen und Angehen dieser Risiken entscheidend für die Entwicklung fairer, zuverlässiger und wirklich anpassungsfähiger KI-Systeme.