Robotics

Human-Robot Collaboration System Based on AI Ready for Factory Floor

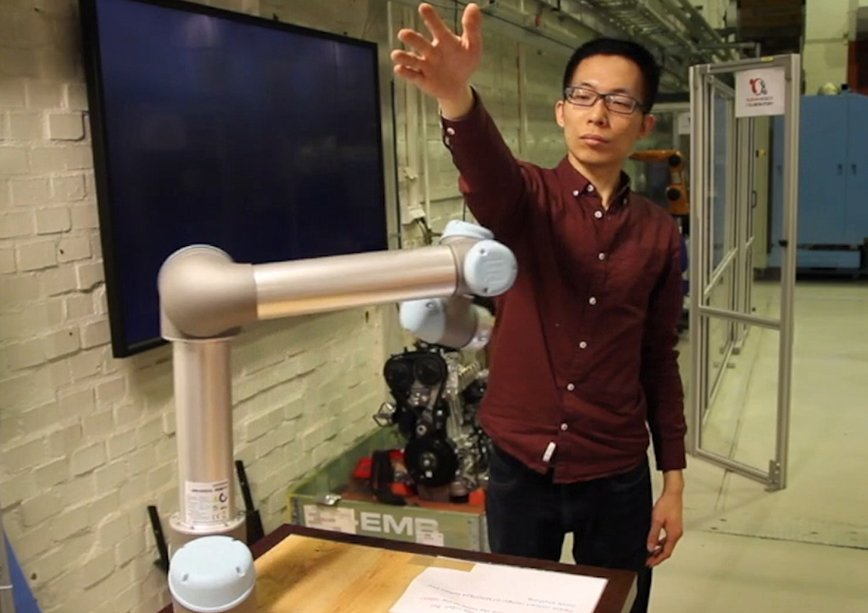

A new human-robot collaboration system based on artificial intelligence (AI) is ready for the factory floor. The system provides robots with context around the work environment and circumstances to predict what people will do next, meaning they can work alongside humans on assembly lines like never before.

A traditional system can only judge distance between itself and human co-workers, but the newly developed system can identify each separate worker and the person’s skeletal mode. According to Hongyi Liu, researcher at KTH Royal Institute of Technology, the robot system can use this information to recognize the worker’s pose and predict the next one. All of this means the robot becomes aware of context while interacting with human workers.

KTH Professor Lihui Wang was co-author of the publication in Robotics and Computer Integrated Manufacturing.

Artificial Intelligence and Transfer Learning

The system utilizes artificial intelligence, which means it requires less computational power and smaller datasets than traditional machine learning methods. The human-robot collaboration system specifically uses transfer learning, a type of machine learning developed through training before being used in an operational model.

The technology is out and follows today’s International Organization for Standards (ISO) requirement for collaborative robot safety. In order for the system to be implemented, there needs to be industrial action.

Compared to the one-dimensional interaction workers currently have with robots, the new system is more efficient given its context awareness.

“Under the ISO standard and technical specification, when a human approaches a robot it slows down, and if he or she comes close enough it will stop. If the person moves away it resumes. That’s a pretty low level of context awareness,” Liu says.

“It jeopardizes efficiency. Production is slowed and humans cannot work closely to robots,” he continues.

The context-aware robot system can be compared to a self-driving car that recognizes how long a stoplight has been red. That vehicle can then anticipate when it will move again, so instead of braking or downshifting, it adjusts its speed and cruises toward the intersection. This behavior stops the brakes and transmission from facing too much wear.

In experiments, the new system demonstrated that a robot can operate more safely and efficiently, without slowing down production, thanks to its ability to understand context.

In one specific test, the robot adjusted after its arm’s path was blocked unexpectedly by a person’s hand. Instead of stopping, the robot predicted the future trajectory of the hand to position its arm in a different spot.

“This is safety not just from the technical point of view in avoiding collisions, but being able to recognize the context of the assembly line,” Liu says. “This gives an additional layer of safety.”