인공지능

직접 선호도 최적화: 전체 가이드

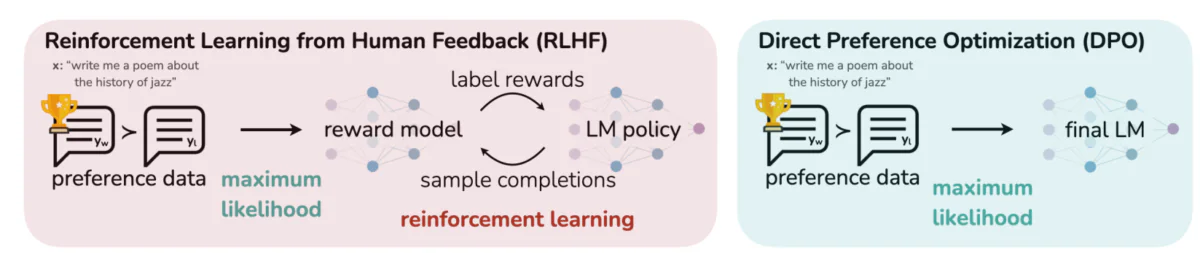

인간의 가치와 선호도에 맞춰 대규모 언어 모델(LLM)을 조정하는 것은 어렵습니다. 등의 전통적인 방법 사람의 피드백을 통한 강화 학습 (RLHF)는 사람의 입력을 통합하여 모델 출력을 개선함으로써 길을 열었습니다. 그러나 RLHF는 복잡하고 리소스 집약적일 수 있으며 상당한 계산 능력과 데이터 처리가 필요합니다. 직접 선호도 최적화 (DPO)는 새롭고 더욱 간소화된 접근 방식으로 등장하여 이러한 기존 방법에 대한 효율적인 대안을 제공합니다. DPO는 최적화 프로세스를 단순화함으로써 계산 부담을 줄일 뿐만 아니라 인간의 선호도에 빠르게 적응하는 모델의 능력을 향상시킵니다.

이 가이드에서는 DPO에 대해 자세히 알아보고 DPO의 기초, 구현 및 실제 응용 프로그램을 살펴보겠습니다.

선호 정렬의 필요성

DPO를 이해하려면 LLM을 인간의 선호도에 맞추는 것이 왜 그렇게 중요한지 이해하는 것이 중요합니다. 인상적인 역량에도 불구하고 방대한 데이터 세트로 훈련된 LLM은 때때로 일관성이 없거나 편향적이거나 인간의 가치와 맞지 않는 출력을 생성할 수 있습니다. 이러한 불일치는 다양한 방식으로 나타날 수 있습니다.

- 안전하지 않거나 유해한 콘텐츠 생성

- 부정확하거나 오해의 소지가 있는 정보 제공

- 훈련 데이터에 존재하는 편향 표시

이러한 문제를 해결하기 위해 연구자들은 인간의 피드백을 사용하여 LLM을 미세 조정하는 기술을 개발했습니다. 이러한 접근법 중 가장 두드러진 것은 RLHF였습니다.

RLHF 이해: DPO의 선구자

인간 피드백을 통한 강화 학습(RLHF)은 LLM을 인간 선호도에 맞추는 데 사용되는 방법이었습니다. 복잡성을 이해하기 위해 RLHF 프로세스를 분석해 보겠습니다.

a) 감독형 미세 조정(SFT): 프로세스는 고품질 응답 데이터 세트에서 사전 훈련된 LLM을 미세 조정하는 것으로 시작됩니다. 이 단계는 모델이 대상 작업에 대해 보다 관련성이 높고 일관된 출력을 생성하는 데 도움이 됩니다.

b) 보상 모델링: 인간의 선호도를 예측하기 위해 별도의 보상 모델을 학습합니다. 여기에는 다음이 포함됩니다.

- 주어진 프롬프트에 대한 응답 쌍 생성

- 인간이 선호하는 반응을 평가하게 함

- 이러한 선호도를 예측하기 위한 모델 학습

c) 강화 학습: 미세 조정된 LLM은 강화 학습을 사용하여 더욱 최적화됩니다. 보상 모델은 피드백을 제공하여 LLM이 인간 선호도에 맞는 응답을 생성하도록 안내합니다.

다음은 RLHF 프로세스를 설명하기 위한 단순화된 Python 의사코드입니다.

RLHF는 효과적이지만 몇 가지 단점이 있습니다.

- 여러 모델(SFT, 보상 모델, RL 최적화 모델)을 훈련하고 유지 관리해야 합니다.

- RL 프로세스는 불안정하고 하이퍼파라미터에 민감할 수 있습니다.

- 계산 비용이 많이 들고 모델을 통해 많은 정방향 및 역방향 통과가 필요합니다.

이러한 제한으로 인해 더 간단하고 효율적인 대안을 찾게 되었고, 이는 DPO 개발로 이어졌습니다.

직접 선호 최적화: 핵심 개념

이 이미지는 LLM 출력을 인간 선호도에 맞추는 두 가지 접근 방식, 즉 인간 피드백을 통한 강화 학습(RLHF)과 직접 선호도 최적화(DPO)를 대조합니다. RLHF는 보상 모델을 사용하여 반복적인 피드백 루프를 통해 언어 모델의 정책을 안내하는 반면, DPO는 선호도 데이터를 사용하여 인간이 선호하는 응답과 일치하도록 모델 출력을 직접 최적화합니다. 이 비교는 각 방법의 장점과 잠재적인 적용을 강조하여 미래의 LLM이 인간의 기대에 더 잘 부응하도록 훈련할 수 있는 방법에 대한 통찰력을 제공합니다.

DPO의 핵심 아이디어:

a) 암시적 보상 모델링: DPO는 언어 모델 자체를 암시적 보상 함수로 취급하여 별도의 보상 모델이 필요하지 않습니다.

b) 정책 기반 공식화: DPO는 보상 함수를 최적화하는 대신 정책(언어 모델)을 직접 최적화하여 선호 응답 확률을 최대화합니다.

c) 폐쇄형 솔루션: DPO는 최적의 정책에 대한 폐쇄형 솔루션을 허용하는 수학적 통찰력을 활용하여 반복적인 RL 업데이트가 필요하지 않습니다.

DPO 구현: 실용적인 코드 연습

아래 이미지는 PyTorch를 사용하여 DPO 손실 기능을 구현하는 코드 조각을 보여줍니다. 이 기능은 언어 모델이 인간 선호도에 따라 출력의 우선순위를 지정하는 방법을 개선하는 데 중요한 역할을 합니다. 주요 구성 요소에 대한 분석은 다음과 같습니다.

- 기능 서명다음

dpo_loss함수는 정책 로그 확률(pi_logps), 참조 모델 로그 확률(ref_logps), 선호 및 비선호 완성을 나타내는 지수(yw_idxs,yl_idxs). 추가적으로,beta매개변수는 KL 페널티의 강도를 제어합니다. - 로그 확률 추출: 코드는 정책 모델과 참조 모델 모두에서 선호 완료 및 비선호 완료에 대한 로그 확률을 추출합니다.

- 로그 비율 계산: 선호 완료와 비선호 완료에 대한 로그 확률의 차이는 정책 모델과 참조 모델 모두에 대해 계산됩니다. 이 비율은 최적화의 방향과 규모를 결정하는 데 중요합니다.

- 손실 및 보상 계산: 손실은 다음을 사용하여 계산됩니다.

logsigmoid기능을 수행하는 반면, 보상은 정책 로그 확률과 참조 로그 확률 간의 차이를 다음과 같이 조정하여 결정됩니다.beta.

이러한 목표를 달성하는 방법을 이해하기 위해 DPO 이면의 수학을 자세히 살펴보겠습니다.

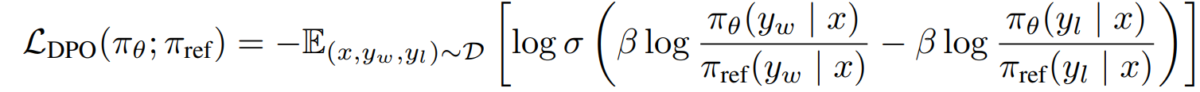

DPO의 수학

DPO는 선호 학습 문제를 영리하게 재구성한 것입니다. 단계별 분석은 다음과 같습니다.

a) 출발점: KL 제약 보상 극대화

원래 RLHF 목표는 다음과 같이 표현될 수 있습니다.

- πθ는 우리가 최적화하는 정책(언어 모델)입니다.

- r(x,y)는 보상 함수입니다.

- πref는 참조 정책(보통 초기 SFT 모델)입니다.

- β는 KL 발산 제약 조건의 강도를 제어합니다.

b) 최적의 정책 형태: 이 목표에 대한 최적의 정책은 다음과 같은 형식을 취한다는 것을 알 수 있습니다.

π_r(y|x) = 1/Z(x) * πref(y|x) * exp(1/β * r(x,y))여기서 Z(x)는 정규화 상수입니다.

c) 보상 정책 이중성: DPO의 핵심 통찰력은 최적의 정책 측면에서 보상 기능을 표현하는 것입니다.

r(x,y) = β * log(π_r(y|x) / πref(y|x)) + β * log(Z(x))d) 선호 모델 선호가 Bradley-Terry 모델을 따른다고 가정하면 y1보다 y2을 선호할 확률을 다음과 같이 표현할 수 있습니다.

p*(y1 ≻ y2 | x) = σ(r*(x,y1) - r*(x,y2))여기서 σ는 로지스틱 함수입니다.

e) DPO 목표 보상 정책 이중성을 선호 모델로 대체하면 다음과 같은 DPO 목표에 도달합니다.

L_DPO(πθ; πref) = -E_(x,y_w,y_l)~D [log σ(β * log(πθ(y_w|x) / πref(y_w|x)) - β * log(πθ(y_l|x) / πref(y_l|x)))]이 목표는 RL 알고리즘 없이도 표준 경사하강법을 사용하여 최적화할 수 있습니다.

DPO 구현

이제 DPO의 기본 이론을 이해했으므로 이를 실제로 구현하는 방법을 살펴보겠습니다. 우리는 사용할 것이다 Python 그리고 파이 토치 이 예의 경우:

import torch

import torch.nn.functional as F

class DPOTrainer:

def __init__(self, model, ref_model, beta=0.1, lr=1e-5):

self.model = model

self.ref_model = ref_model

self.beta = beta

self.optimizer = torch.optim.AdamW(self.model.parameters(), lr=lr)

def compute_loss(self, pi_logps, ref_logps, yw_idxs, yl_idxs):

"""

pi_logps: policy logprobs, shape (B,)

ref_logps: reference model logprobs, shape (B,)

yw_idxs: preferred completion indices in [0, B-1], shape (T,)

yl_idxs: dispreferred completion indices in [0, B-1], shape (T,)

beta: temperature controlling strength of KL penalty

Each pair of (yw_idxs[i], yl_idxs[i]) represents the indices of a single preference pair.

"""

# Extract log probabilities for the preferred and dispreferred completions

pi_yw_logps, pi_yl_logps = pi_logps[yw_idxs], pi_logps[yl_idxs]

ref_yw_logps, ref_yl_logps = ref_logps[yw_idxs], ref_logps[yl_idxs]

# Calculate log-ratios

pi_logratios = pi_yw_logps - pi_yl_logps

ref_logratios = ref_yw_logps - ref_yl_logps

# Compute DPO loss

losses = -F.logsigmoid(self.beta * (pi_logratios - ref_logratios))

rewards = self.beta * (pi_logps - ref_logps).detach()

return losses.mean(), rewards

def train_step(self, batch):

x, yw_idxs, yl_idxs = batch

self.optimizer.zero_grad()

# Compute log probabilities for the model and the reference model

pi_logps = self.model(x).log_softmax(-1)

ref_logps = self.ref_model(x).log_softmax(-1)

# Compute the loss

loss, _ = self.compute_loss(pi_logps, ref_logps, yw_idxs, yl_idxs)

loss.backward()

self.optimizer.step()

return loss.item()

# Usage

model = YourLanguageModel() # Initialize your model

ref_model = YourLanguageModel() # Load pre-trained reference model

trainer = DPOTrainer(model, ref_model)

for batch in dataloader:

loss = trainer.train_step(batch)

print(f"Loss: {loss}")

도전과 앞으로의 방향

DPO는 기존 RLHF 접근 방식에 비해 상당한 이점을 제공하지만 여전히 추가 연구를 위한 과제와 영역이 있습니다.

a) 더 큰 모델로의 확장성:

언어 모델의 크기가 계속 증가함에 따라 수천억 개의 매개변수가 있는 모델에 DPO를 효율적으로 적용하는 것은 여전히 해결해야 할 과제로 남아 있습니다. 연구원들은 다음과 같은 기술을 탐구하고 있습니다.

- 효율적인 미세 조정 방법(예: LoRA, 프리픽스 튜닝)

- 분산 훈련 최적화

- 경사 체크포인트 및 혼합 정밀도 훈련

DPO와 함께 LoRA를 사용하는 예:

from peft import LoraConfig, get_peft_model

class DPOTrainerWithLoRA(DPOTrainer):

def __init__(self, model, ref_model, beta=0.1, lr=1e-5, lora_rank=8):

lora_config = LoraConfig(

r=lora_rank,

lora_alpha=32,

target_modules=["q_proj", "v_proj"],

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM"

)

self.model = get_peft_model(model, lora_config)

self.ref_model = ref_model

self.beta = beta

self.optimizer = torch.optim.AdamW(self.model.parameters(), lr=lr)

# Usage

base_model = YourLargeLanguageModel()

dpo_trainer = DPOTrainerWithLoRA(base_model, ref_model)

b) 다중 작업 및 Few-Shot 적응:

제한된 선호도 데이터로 새로운 작업이나 영역에 효율적으로 적응할 수 있는 DPO 기술을 개발하는 것이 활발한 연구 분야입니다. 탐색 중인 접근 방식은 다음과 같습니다.

- 신속한 적응을 위한 메타러닝 프레임워크

- DPO를 위한 프롬프트 기반 미세 조정

- 일반 선호 모델에서 특정 도메인으로 학습 전이

c) 모호하거나 상충되는 선호사항 처리:

실제 선호도 데이터에는 모호함이나 충돌이 포함되는 경우가 많습니다. 이러한 데이터에 대한 DPO의 견고성을 향상시키는 것이 중요합니다. 잠재적인 솔루션은 다음과 같습니다.

- 확률적 선호 모델링

- 모호함을 해결하기 위한 능동적 학습

- 다중 에이전트 기본 설정 집계

확률적 선호 모델링의 예:

class ProbabilisticDPOTrainer(DPOTrainer):

def compute_loss(self, pi_logps, ref_logps, yw_idxs, yl_idxs, preference_prob):

# Compute log ratios

pi_yw_logps, pi_yl_logps = pi_logps[yw_idxs], pi_logps[yl_idxs]

ref_yw_logps, ref_yl_logps = ref_logps[yw_idxs], ref_logps[yl_idxs]

log_ratio_diff = pi_yw_logps.sum(-1) - pi_yl_logps.sum(-1)

loss = -(preference_prob * F.logsigmoid(self.beta * log_ratio_diff) +

(1 - preference_prob) * F.logsigmoid(-self.beta * log_ratio_diff))

return loss.mean()

# Usage

trainer = ProbabilisticDPOTrainer(model, ref_model)

loss = trainer.compute_loss(pi_logps, ref_logps, yw_idxs, yl_idxs, preference_prob=0.8) # 80% confidence in preference

d) DPO를 다른 정렬 기술과 결합:

DPO를 다른 정렬 접근 방식과 통합하면 더욱 강력하고 유능한 시스템이 될 수 있습니다.

- 명시적 제약조건 만족을 위한 헌법적 AI 원칙

- 복잡한 선호도 도출을 위한 토론 및 재귀적 보상 모델링

- 기본 보상 함수를 추론하기 위한 역 강화 학습

DPO와 헌법 AI를 결합한 예:

class ConstitutionalDPOTrainer(DPOTrainer):

def __init__(self, model, ref_model, beta=0.1, lr=1e-5, constraints=None):

super().__init__(model, ref_model, beta, lr)

self.constraints = constraints or []

def compute_loss(self, pi_logps, ref_logps, yw_idxs, yl_idxs):

base_loss = super().compute_loss(pi_logps, ref_logps, yw_idxs, yl_idxs)

constraint_loss = 0

for constraint in self.constraints:

constraint_loss += constraint(self.model, pi_logps, ref_logps, yw_idxs, yl_idxs)

return base_loss + constraint_loss

# Usage

def safety_constraint(model, pi_logps, ref_logps, yw_idxs, yl_idxs):

# Implement safety checking logic

unsafe_score = compute_unsafe_score(model, pi_logps, ref_logps)

return torch.relu(unsafe_score - 0.5) # Penalize if unsafe score > 0.5

constraints = [safety_constraint]

trainer = ConstitutionalDPOTrainer(model, ref_model, constraints=constraints)

실제 고려 사항 및 모범 사례

실제 응용 프로그램에 대해 DPO를 구현할 때 다음 팁을 고려하십시오.

a) 데이터 품질: 선호도 데이터의 품질이 중요합니다. 데이터세트가 다음과 같은지 확인하세요.

- 다양한 범위의 입력과 원하는 동작을 포괄합니다.

- 일관되고 안정적인 기본 설정 주석이 있습니다.

- 다양한 유형의 선호도(예: 사실성, 안전성, 스타일)의 균형을 유지합니다.

b) 하이퍼 파라미터 튜닝: DPO는 RLHF보다 하이퍼파라미터 수가 적지만 튜닝은 여전히 중요합니다.

- β(베타): 선호도 만족과 참조 모델과의 차이 간의 균형을 제어합니다. 주변의 가치부터 시작하세요 0.1-0.5.

- 학습률: 일반적으로 다음 범위에서 표준 미세 조정보다 낮은 학습률을 사용합니다. 1e-6 ~ 1e-5.

- 배치 크기: 더 큰 배치 크기(32-128) 종종 선호 학습에 적합합니다.

c) 반복적 개선: DPO는 반복적으로 적용될 수 있습니다.

- DPO를 사용하여 초기 모델 학습

- 훈련된 모델을 사용하여 새로운 응답 생성

- 이러한 응답에 대한 새로운 선호도 데이터 수집

- 확장된 데이터 세트를 사용하여 재훈련

이 이미지는 Direct Preference Optimization(DPO), Supervised Fine-Tuning(SFT), Proximal Policy Optimization(PPO)을 포함한 다양한 훈련 기법에서 인간의 판단과 비교한 GPT-4와 같은 LLM의 성능을 보여줍니다. 이 표는 GPT-4의 출력이 특히 요약 작업에서 인간의 선호도와 점점 더 일치하고 있음을 보여줍니다. GPT-4와 인간 검토자 간의 합의 수준은 이 모델이 인간이 생성한 콘텐츠와 거의 마찬가지로 인간 평가자에게 공감을 얻는 콘텐츠를 생성할 수 있는 능력을 보여줍니다.

사례 연구 및 애플리케이션

DPO의 효율성을 설명하기 위해 실제 애플리케이션과 그 변형을 살펴보겠습니다.

- 반복적인 DPO: Snorkel(2023)에서 개발한 이 변형은 거부 샘플링과 DPO를 결합하여 훈련 데이터에 대한 보다 정교한 선택 프로세스를 가능하게 합니다. 여러 라운드의 선호도 샘플링을 반복함으로써 모델은 잡음이 많거나 편향된 선호도에 대한 과적합을 더 잘 일반화하고 방지할 수 있습니다.

- IPO (반복적 선호도 최적화): Azar 등이 소개함. (2023), IPO는 선호도 기반 최적화에서 일반적인 문제인 과적합을 방지하기 위해 정규화 용어를 추가합니다. 이 확장을 통해 모델은 선호도 준수와 일반화 기능 유지 간의 균형을 유지할 수 있습니다.

- 공사(지식 이전 최적화): Ethayarajh et al.의 최신 변종입니다. (2023), KTO는 바이너리 기본 설정을 완전히 생략합니다. 대신, 참조 모델의 지식을 정책 모델로 이전하는 데 중점을 두고 인간 가치와 보다 원활하고 일관되게 정렬되도록 최적화합니다.

- 도메인 간 학습을 위한 다중 모드 DPO Xu et al. (2024): DPO가 텍스트, 이미지, 오디오 등 다양한 형식에 걸쳐 적용되는 접근 방식으로, 다양한 데이터 유형 전반에 걸쳐 인간의 선호도에 맞춰 모델을 조정하는 다재다능함을 보여줍니다. 이 연구는 복잡한 다중 모드 작업을 처리할 수 있는 보다 포괄적인 AI 시스템을 만드는 데 있어 DPO의 잠재력을 강조합니다.