Sirdoonka Artificial

Hagaha Buuxa ee Hagaajinta Hababka Luuqadaha Waaweyn

Tusmada

Moodooyinka luqadaha waaweyn (LLMs) sida GPT-4, LaMDA, PaLM, iyo kuwa kale ayaa dunida ku qabsaday duufaan iyaga oo leh awooddooda cajiibka ah ee ay ku fahmaan oo ay u dhaliyaan qoraal u eg bini'aadamka oo ku saabsan mowduucyo aad u badan. Moodooyinkan waxaa horay loogu tababbaray xog-ururin ballaaran oo ka kooban balaayiin kelmadood oo laga helay internetka, buugaag, iyo ilo kale.

Marxaladdan tababbarka ka hor waxay soo jiidanaysaa moodooyinka leh aqoon guud oo ballaaran oo ku saabsan luqadda, mowduucyada, awoodaha sababaynta, iyo xitaa eexda qaarkood ee ku jira xogta tababarka. Si kastaba ha ahaatee, inkasta oo ballaadhka cajiibka ah ee ay leeyihiin, LLM-yadan horay loo tababaray ayaa ka maqan khibrad gaar ah oo ku saabsan goobo ama hawlo gaar ah.

Tani waa meesha hagaajinta hagaajintu ay ka timaaddo - habka laqabsiga LLM horay loo tababaray si uu ugu fiicnaado codsi gaar ah ama kiis isticmaal. Tababar dheeraad ah oo ku saabsan moodeelka xog-yar oo hawl-gaar ah, waxaan habayn karnaa awoodaheeda si ay ula jaanqaado nuucyada iyo shuruudaha goobtaas.

Hagaajinta hagaajintu waxay la mid tahay wareejinta aqoonta ballaadhan ee aqoonyahanka guud ee aqoonta sare leh si loo farsameeyo khabiir mawduuca ku takhasusay qayb gaar ah. Hagahan, waxaanu ku sahamin doonaa waxa, sababta, iyo sida hagaajinta LLMs.

Waa maxay Fine-Tuning?

Dhinaciisa, hagaajinta ku lug leh qaadashada qaab weyn oo horay loo tababbaray iyo cusboonaysiinta cabbirkeeda iyadoo la adeegsanayo wejigii tababarka labaad ee xog-ururinta ee ku habboon hawshaada bartilmaameedka ama goobtaada. Tani waxay u oggolaanaysaa moodalku inuu barto oo uu dhex geliyo nuucyada, qaababka, iyo ujeedooyinka u gaarka ah aaggaas cidhiidhiga ah.

Halka tababarka ka hor uu ka soo qaato fahamka luqadda ballaadhan ee qoraalka weyn oo kala duwan, hagaajinta fiican waxay ku takhasustay kartida guud. Waxay la mid tahay qaadashada nin Renaissance oo iyaga u qaabeeya khabiir warshadeed.

Miisaanka qaabka horay loo tababaray, ee qeexaya aqoontiisa guud, ayaa loo adeegsadaa bilowga ama bilowga habka hagaajinta. Qaabka ayaa markaa la sii tababaray, laakiin markan tusaalayaal si toos ah u khuseeya codsiga dhamaadka.

Markaynu tusaalaha u soo bandhigno qaybinta xogtan khaaska ah iyo hagaajinta cabbirada tusaalaha si waafaqsan, waxaanu ka dhigaynaa LLM mid sax ah oo wax ku ool u ah kiiska isticmaalka bartilmaameedka, anagoo wali ka faa'iidaysanayna awoodaha balaadhan ee horay loo tababaray ee aasaaska u ah.

Waa maxay sababta Fine-Tune LLMs?

Waxaa jira dhowr sababood oo muhiim ah oo laga yaabo inaad rabto inaad hagaajiso qaabka luqadda weyn:

- Habaynta Domain: Goob kasta, laga bilaabo sharci ilaa dawo ilaa injineernimada software, waxay leedahay xeerar luuqadeed oo u gaar ah, jargon, iyo macnaha guud. Hagaajinta hagaajintu waxay kuu ogolaanaysaa inaad habayso moodal guud si aad u fahamto oo aad u soo saarto qoraalka ku habboon qaybta gaarka ah.

- Takhasuska Hawsha: LLM-yada waxa lagu hagaajin karaa hawlo habayneed oo kala duwan oo dabiici ah sida soo koobida qoraalka, tarjumaada mashiinka, ka jawaabista su'aalaha iyo wixii la mid ah. Takhasuskan ayaa kor u qaadaya waxqabadka hawsha la hiigsanayo.

- U Hogaansanaanta Xogta: Warshadaha aadka loo nidaamiyay sida daryeelka caafimaadka iyo maaliyadda ayaa leh xog adag oo loo baahan yahay sir. Hagaajinta wanagsan waxay ogolaataa tababarida LLM-yada xogta hay'adaha gaarka ah iyadoo la ilaalinayo macluumaadka xasaasiga ah.

- Xogta Summadaysan oo xadidan: Helitaanka xog-ururin calaamadaysan oo loogu talagalay moodooyinka tababbarka meel eber waxay noqon kartaa mid adag. Hagaajinta wanagsan waxa ay saamaxdaa in la gaadho hawlqabad xoog leh oo laga soo qaatay tusaalayaal xaddidan oo la kormeerayo iyada oo la adeegsanaayo awoodaha qaabka hore loo tababaray.

- Cusbooneysiinta Model: Marka xogta cusub ay soo baxdo waqti ka dib, waxaad si fiican u hagaajin kartaa moodooyinka si loogu daro aqoonta iyo kartida ugu dambeeyay.

- Yaraynta EexdaLLMs waxay ka qaadan karaan eexda bulshada xogta tababbarka ka hor ballaaran. Si fiican u habaynta xog-ururinta ayaa kaa caawin karta yaraynta iyo hagaajinta eexdan aan loo baahnayn.

Nuxur ahaan, hagaajinta hagaajinta waxay isku xireysaa farqiga u dhexeeya qaab guud, ballaaran iyo shuruudaha diirada saaraya codsi gaar ah. Waxay wanaajisaa saxnaanta, badbaadada, iyo ku habboonaanta wax-soo-saarka moodalka kiisaska isticmaalka la beegsaday.

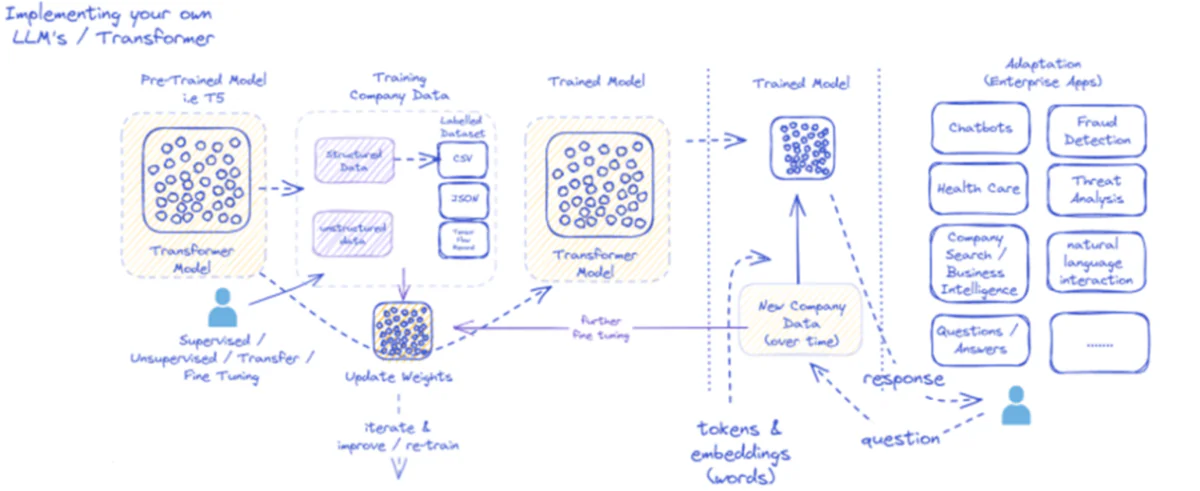

Jaantuska la bixiyay ayaa qeexaya habka hirgelinta iyo ka faa'iidaysiga moodooyinka luqadaha waaweyn (LLMs), gaar ahaan codsiyada ganacsiga. Markii hore, qaabka horay loo tababaray sida T5 waxaa la quudiyaa xogta shirkadda oo habaysan iyo qaabaysan, kuwaas oo ku iman kara qaabab kala duwan sida CSV ama JSON. Xogtan waxa ay martaa la kormeero, iyada oo aan la kormeerin, ama wareejinta habab hagaajinta wanaagsan, iyada oo kor u qaadaysa ku habboonaanta moodeelka baahiyaha gaarka ah ee shirkadda.

Marka moodelku si fiican loogu habeeyo xogta shirkadda, miisaankiisa ayaa loo cusbooneysiiyaa si waafaqsan. Qaabka la tababaray ka dib wuxuu ku celceliyaa iyada oo loo marayo wareegyo tababar oo dheeraad ah, si joogto ah u wanaajinaya jawaabaheeda waqti ka dib xogta shirkadda cusub. Nidaamku waa mid soo noqnoqonaya oo firfircoon, oo leh barashada moodeelka iyo dib u dhiska si uu ula qabsado qaababka xogta ee kobcaya.

Soo saarida qaabkan tababaran-calaamadaha iyo gundhigga ereyada-waxaa la geeyaa codsiyo ganacsi oo kala duwan. Codsiyadani waxay ka koobnaan karaan chatbots ilaa daryeel caafimaad, mid kastaa wuxuu u baahan yahay moodelka si loo fahmo oo looga jawaabo weydiimaha warshadaha gaarka ah. Dhanka maaliyadda, codsiyada waxaa ka mid ah ogaanshaha khiyaanada iyo falanqaynta khatarta; xagga daryeelka caafimaadka, moodooyinka ayaa kaa caawin kara su'aalaha bukaanka iyo ogaanshaha.

Awoodda moodeelka la tababaray si uu uga baaraandego ugana jawaabo xogta shirkadda cusub waqti ka dib waxa ay hubisaa in adeegsigiisu sii jiro oo uu kordho. Natiijadu waxay tahay, isticmaalayaasha ganacsigu waxay la falgali karaan moodalka iyagoo adeegsanaya arji, weydiinaya su'aalo iyo inay helaan jawaabo xog ogaal ah oo ka tarjumaya tababarka moodeelka iyo hagaajinta xogta gaarka ah ee domainka.

Kaabayaashani waxa ay taageerayaan codsiyo ganacsi oo kala duwan, oo muujinaya wax-ka-beddelka iyo la-qabsiga LLM-yada marka si habboon loo hirgeliyo oo lagu ilaaliyo xaalad ganacsi.

Hababka Hagaajinta Fine

Waxaa jira laba xeeladood oo aasaasiga ah marka ay timaaddo hagaajinta qaababka luqadda waaweyn:

1) Qaabka Buuxa ee Hagaajinta Fine

Habka hagaajinta buuxda, dhammaan halbeegyada (miisaanka iyo eexda) ee qaabka horay loo tababaray waa la cusboonaysiiyay inta lagu jiro wajiga labaad ee tababarka. Nashqadani waxa ay soo bandhigaysaa xogta hawsha gaarka ah ee la calaamadeeyay, iyo habka tababarka caadiga ah waxa uu wanaajiyaa qaabka oo dhan ee qaybinta xogtaas.

Tani waxay u oggolaanaysaa moodalku inuu sameeyo isbeddelo dhammaystiran oo si dhammaystiran ula qabsado hawsha bartilmaameedka ama goobta. Si kastaba ha noqotee, dib-u-habeyn buuxda ayaa leh qaar ka mid ah cilladaha:

- Waxay u baahan tahay ilo xisaabeed oo muhiim ah iyo waqti si loo tababaro, oo la mid ah wejigii tababarka ka hor.

- Shuruudaha kaydinta ayaa aad u sarreeya, maadaama aad u baahan tahay inaad haysato nuqul gaar ah oo si fiican loo habeeyey ee moodeelka hawl kasta.

- Waxaa jirta khatar ah "hilmaamo masiibo ah", halkaasoo hagaajinta hagaajinta ay keento moodelku inuu lumiyo qaar ka mid ah awoodaha guud ee la bartay intii lagu jiray tababarka ka hor.

Iyadoo ay jiraan xaddidaadyadaas, hagaajinta buuxda ayaa weli ah farsamo xooggan oo si ballaaran loo isticmaalo marka sahaydu ogolaato oo hawsha bartilmaameedku si weyn uga duwanaato luqadda guud.

2) Hababka Hagaajinta Fiican ee Waxtarka leh

Si looga gudbo caqabadaha xisaabinta ee hagaajinta buuxda, cilmi-baarayaashu waxay soo saareen xeelado wax ku ool ah oo cusboonaysiiya qayb yar oo ka mid ah cabbirrada tusaalaha inta lagu jiro hagaajinta wanaagsan. Farsamooyinkan wax-ku-oolka ah ee wax-ku-oolka ah waxay keenayaan dheellitirka u dhexeeya takhasuska iyo dhimista shuruudaha kheyraadka.

Qaar ka mid ah hababka wanaagsan ee hagaajinta hufan ee caanka ah waxaa ka mid ah:

Horgale-TuningHalkan, tiro yar oo hawlo gaar ah oo ka mid ah shaqaliyeyaasha ama "horgalayaasha" ayaa la soo bandhigay oo loo tababaray si ay u sharxaan qaabka horay loo tababaray ee dareenka hawsha la beegsanayo. Kaliya horgalayaashan ayaa la cusboonaysiiyaa marka la hagaajinayo.

LoRA (Laqabsiga Darajada Hoose): LoRA wuxuu duraa shaybaarro heer hoose ah oo la tababari karo lakab kasta oo ka mid ah moodeelka horay loo tababaray inta lagu jiro hagaajinta wanaagsan. Hagaajinta darajadan yar waxay caawisaa inay ku takhasusto moodeelka oo leh cabbirro tababar oo aad uga yar marka loo eego hagaajinta buuxda.

Hubaal, waxaan ku siin karaa sharaxaad faahfaahsan LoRA (Laqabsiga Darajada Hoose) oo ay la socoto qaabaynta xisaabta iyo tusaalooyinka koodka. LoRA waa farsamo-habayn wanaagsan oo hufan (PEFT) caan ah oo kasbatay soo jiidasho la taaban karo xagga laqabsiga qaabka luqadda waaweyn (LLM).

Waa maxay LoRA?

LoRA waa hab si fiican loo habeeyey oo soo bandhigaya tiro yar oo cabbirro tababbaran oo LLM ah oo horay loo tababbaray, taas oo u oggolaanaysa la qabsiga hufan ee hawlaha hoose iyadoo la ilaalinayo inta badan aqoonta moodeelkii asalka ahaa. Halkii si fiican loo hagaajin lahaa dhammaan cabbirrada LLM, LoRA waxay ku duri kartaa hawlo gaar ah oo darajada hoose ah lakabyada moodeelka, taasoo awood u siinaysa kaydinta xisaabinta iyo xusuusta muhiimka ah inta lagu jiro habka hagaajinta.

Habaynta Xisaabta

LoRA (Laqabsiga Darajada-hooseeya) waa hab si wanagsan loogu hagaajinayo moodooyinka luqadaha waaweyn (LLMs) kaas oo soo bandhigaya cusboonaysiinta darajada hoose ee miisaanka miisaanka. Matrix miisaan 0∈W0∈Rd×k, LoRA waxay ku daraysaa matrix darajo hoose BA, la A∈Rr×k iyo B∈Rd×r, halkaas oo r waa darajada. Habkani wuxuu si weyn u yareynayaa tirada cabbirrada la tababari karo, taasoo awood u siinaysa la qabsiga wax ku ool ah ee hawlaha hoose ee leh ilaha xisaabinta ugu yar. Matrixka miisaanka la cusboonaysiiyay waxaa bixiyay W=W0+B⋅A.

Cusboonaysiinta darajada hoose waxaa loo tarjumi karaa wax ka beddelka shaxanka miisaanka asalka ah $W_{0}$ iyadoo lagu darayo darajo hoose $BA$. Faa'iidada ugu muhiimsan ee qaabayntan ayaa ah in halkii laga cusboonaysiin lahaa dhammaan $d \ times k$ halbeegyada $W_{0}$, LoRA waxay u baahan tahay oo kaliya inay hagaajiso halbeegyada $r \ times (d + k)$ $ A$ iyo $B $, oo si weyn u yaraynaysa tirada qiyaasaha la tababari karo.

Waa kuwan tusaale ku jira Python iyadoo la adeegsanayo peft maktabad si aad LoRA u dalbato LLM horay loo tababaray si loogu kala saaro qoraalka:

</div> <div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color="">from</span> transformers <span class="token" data-darkreader-inline-color="">import</span> AutoModelForSequenceClassification </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color="">from</span> peft <span class="token" data-darkreader-inline-color="">import</span> get_peft_model<span class="token" data-darkreader-inline-color="">,</span> LoraConfig<span class="token" data-darkreader-inline-color="">,</span> TaskType </code></div> <div data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># Load pre-trained model</span> </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color="">model <span class="token" data-darkreader-inline-color="">=</span> AutoModelForSequenceClassification<span class="token" data-darkreader-inline-color="">.</span>from_pretrained<span class="token" data-darkreader-inline-color="">(</span><span class="token" data-darkreader-inline-color="">"bert-base-uncased"</span><span class="token" data-darkreader-inline-color="">,</span> num_labels<span class="token" data-darkreader-inline-color="">=</span><span class="token" data-darkreader-inline-color="">2</span><span class="token" data-darkreader-inline-color="">)</span> </code></div> <div data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># Define LoRA configuration</span> </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color="">peft_config <span class="token" data-darkreader-inline-color="">=</span> LoraConfig<span class="token" data-darkreader-inline-color="">(</span>task_type<span class="token" data-darkreader-inline-color="">=</span>TaskType<span class="token" data-darkreader-inline-color="">.</span>SEQ_CLS<span class="token" data-darkreader-inline-color="">, </span>r<span class="token" data-darkreader-inline-color="">=</span><span class="token" data-darkreader-inline-color="">8</span><span class="token" data-darkreader-inline-color="">,</span> <span class="token" data-darkreader-inline-color=""># Rank of the low-rank update</span> lora_alpha<span class="token" data-darkreader-inline-color="">=</span><span class="token" data-darkreader-inline-color="">16</span><span class="token" data-darkreader-inline-color="">,</span></code><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># Scaling factor for the low-rank update</span> </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""> target_modules<span class="token" data-darkreader-inline-color="">=</span><span class="token" data-darkreader-inline-color="">[</span><span class="token" data-darkreader-inline-color="">"q_lin"</span><span class="token" data-darkreader-inline-color="">,</span> <span class="token" data-darkreader-inline-color="">"v_lin"</span><span class="token" data-darkreader-inline-color="">]</span><span class="token" data-darkreader-inline-color="">,</span> <span class="token" data-darkreader-inline-color=""># Apply LoRA to the query and value layers</span> <span class="token" data-darkreader-inline-color="">)</span> </code></div> <div data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># Create the LoRA-enabled model</span> </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color="">model <span class="token" data-darkreader-inline-color="">=</span> get_peft_model<span class="token" data-darkreader-inline-color="">(</span>model<span class="token" data-darkreader-inline-color="">,</span> peft_config<span class="token" data-darkreader-inline-color="">)</span> </code></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># Fine-tune the model with LoRA</span> </code></div> <div data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""></div> <div class="code-block__code !my-0 !rounded-t-lg !text-sm !leading-relaxed" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><code class="language-python" data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color=""><span class="token" data-darkreader-inline-color=""># ... (training code omitted for brevity)</span></code></div> </div> <div data-darkreader-inline-bgimage="" data-darkreader-inline-bgcolor="" data-darkreader-inline-color="">

Tusaalahan, waxaanu ku shubaynaa qaabka BERT ee horay loo tababaray ee kala soocida isku xigxiga waxaanu qeexnaa qaabaynta LoRA. The r Halbeegtu waxay qeexaysaa darajada cusboonaysiinta darajada hoose, iyo lora_alpha waa arrin miisaan u leh cusbooneysiinta. The target_modules Halbeeggu wuxuu tilmaamayaa lakabyada moodeelka ay tahay inay helaan cusboonaysiinta darajada hoose. Ka dib markii la abuuro qaabka LoRA-karti u yahay, waxaan ku sii wadi karnaa habka hagaajinta iyadoo la adeegsanayo habka tababarka caadiga ah.

Lakabiyaha Adapter: Si la mid ah LoRA, laakiin halkii laga heli lahaa cusboonaysiinta darajada hoose, lakabyo khafiif ah oo "adapter" ah ayaa la dhex geliyaa qayb kasta oo ka mid ah qaabka hore loo tababaray. Kaliya halbeegyada dhowr lakab oo cusub ayaa la tababaray.

Daawaynta degdega ahHabkani waxa uu dhigayaa in qaabka hore loo tababaray uu si buuxda u barafoobay. Taa baddalkeeda, ku-xidhka "degdegga ah" ee la tababari karo ayaa loo soo bandhigay sidii talobixin ahaan si loo dhaqaajiyo aqoonta horay loo tababaray ee hawsha la beegsanayo.

Hababkan wax ku oolka ah waxay bixin karaan ilaa 100x xisaabin dhimis marka la barbar dhigo hagaajinta buuxda, iyadoo weli la gaarayo waxqabadka tartan ee hawlo badan. Waxay sidoo kale yareeyaan baahida kaydinta iyagoo ka fogaanaya ku-buuxinta moodeelka buuxa.

Si kastaba ha ahaatee, waxqabadkoodu waxa laga yaabaa inuu dib u habayn buuxda ku sameeyo hawlaha si weyn uga duwan luqadda guud ama u baahan takhasus dhammaystiran.

Habka Habaynta Wanaagsan

Iyadoo aan loo eegin istaraatiijiyada hagaajinta wanaagsan, habka guud ee takhasuska LLM wuxuu raacayaa qaab guud:

- Diyaarinta Xogta: Waxaad u baahan doontaa inaad hesho ama aad samayso xog summaan oo khariidado gelinaysa (soo jeedin) wax soo saarka la rabo ee hawshaada yoolkaaga. Hawlaha qoraalka qoraalka sida soo koobidda, tani waxay noqonaysaa qoraal la gelinayo oo la soo koobay lammaane wax soo saar.

- Qaybinta Xogta: Iyadoo la raacayo dhaqamada ugu wanaagsan, u kala qaybi xogtaada calaamadsan tareen, ansaxinta, iyo sets imtixaan. Tani waxay kala saaraysaa xogta tababarka moodeelka, hagaajinta hyperparameter, iyo qiimaynta kama dambaysta ah.

- Dabaylaha Hyperparameter: Halbeegyada sida heerka waxbarashada, cabbirka dufcadda, iyo jadwalka tababarka waxay u baahan yihiin in lagu hagaajiyo hagaajinta ugu waxtarka badan ee xogtaada. Tani waxay inta badan ku lug leedahay go'an ansaxinta yar.

- Tababarka Model: Isticmaalka hyperparameter-ka la hagaajiyay, ku socodsii habka hagaajinta hagaajinta ee habka tababarka buuxa ilaa waxqabadka moodeelka ee habka ansaxinta uu joojiyo horumarinta (horta joojinta).

- Qiimaynta: Qiimee qaab dhismeedka si fiican loo habeeyey ee qaabka tijaabada la qabtay, oo sida ugu habboon ka kooban tusaalayaal adduunka dhabta ah ee kiis adeegsiyeedka, si loo qiyaaso waxtarka adduunka dhabta ah.

- Hawlgelinta iyo la socodka: Marka lagu qanco, qaabka si fiican loo habeeyey ayaa la geyn karaa si loo tixgaliyo waxyaabo cusub. Waa muhiim in lala socdo waxqabadkeeda iyo saxnaanteeda waqti ka dib si fikradda loo leexiyo.

Iyadoo tani ay qeexayso habka guud, nuances badan ayaa saameyn ku yeelan kara hagaajinta hagaajinta LLM ama hawl gaar ah. Xeeladaha sida barashada manhajka, hagaajinta hawlo badan, iyo dhiirigelin yar ayaa kor u qaadi karta waxqabadka.

Intaa waxaa dheer, hababka hagaajinta hufan waxay ku lug leeyihiin tixgelin dheeraad ah. Tusaale ahaan, LoRA waxay u baahan tahay farsamooyin sida qaboojinta wax soo saarka qaabka horay loo tababaray iyada oo loo marayo lakabka isku-darka. Hagaajinta degdega ah waxay u baahan tahay dardargelin si taxadar leh loo habeeyey si loo dhaqaajiyo dabeecadaha saxda ah.

Hagaajinta Sare ee Fine: Ku darida Jawaab celinta Aadanaha

In kasta oo hagaajinta heerka wanaagsan ee la kormeerayo iyadoo la adeegsanayo xog-ururin sumadeysan ay waxtar leedahay, xuduud xiiso leh ayaa si toos ah u tababaraysa LLM-yada iyadoo la adeegsanayo dookhyada iyo jawaab celinta aadanaha. Habkan bini'aadamka-in-loop-ka-loop ah wuxuu ka faa'iidaysanayaa farsamooyinka barashada xoojinta:

PPO (Kordhinta Siyaasada Ku Dhaw)Halkan, LLM waxaa loola dhaqmaa sidii wakiilka xoojinta waxbarashada, iyada oo wax soo saarkeedu ay yihiin "ficil". Qaabka abaalgudka waxa loo tababbaray in uu saadaaliyo qiimaynta bini'aadamka ama buundooyinka tayada ee wax soo saarkan. PPO waxay markaas wanaajisaa LLM si ay u soo saarto wax soo saar sare u qaadaya buundooyinka moodeelka.

RLHFXoojinta Barashada Jawaab celinta Aadanaha)Tani waxay kordhinaysaa PPO iyadoo si toos ah loogu darayo jawaab celinta aadanaha habka waxbarashada. Halkii laga heli lahaa qaab abaal-marineed go'an, abaal-marintu waxay ka imanaysaa qiimaynta bini'aadamka ee isdaba-joogga ah ee wax-soo-saarka LLM xilliga hagaajinta.

Iyadoo xisaabin ahaan aad u adag, hababkani waxay u oggolaanayaan qaabaynta habdhaqanka LLM si sax ah oo ku salaysan sifooyinka la rabo ee ay qiimeeyaan bini'aadamka, oo ka baxsan waxa lagu qabsan karo xogta xogta taagan.

Shirkadaha sida Anthropic waxay adeegsadeen RLHF si ay ugu soo bandhigaan moodalkooda luqadeed sida Claude oo leh run-sheegnimo, anshax, iyo wacyi-gelin badbaado oo ka baxsan kartida shaqada.

Khatarta suurtagalka ah iyo xaddidnaanta

Iyadoo ay aad u awood badan tahay, hagaajinta LLMs maaha khataro ay tahay in si taxadar leh loo maareeyo:

Kordhinta Eexda: Haddii xogta hagaajinta ay ka kooban tahay eexda bulshada ee xagga jinsiga, jinsiyadda, da'da, ama sifaadka kale, qaabka ayaa kordhin kara eexdan aan loo baahnayn. Soo saarista wakiillada iyo xog-ururinta eexda ayaa muhiim ah.

Xaqiiqda Dhabta ahXataa ka dib marka si fiican loo habeeyo xogta tayada sare leh, moodooyinka luqaddu waxay "dhammayn karaan" xaqiiqooyinka khaldan ama soo saarista aan waafaqsanayn tusaalooyinka tababarka ee wada sheekaysiga dheer ama kicinta. Waxaa laga yaabaa in loo baahdo habab xaqiiqo ah.

Caqabadaha Miisaanka: Habaynta buuxda ee moodooyinka waaweyn sida GPT-3 waxay u baahan tahay agab xisaabeed oo aad u weyn oo laga yaabo in ay suurtogal u tahay ururo badan. Hagaajinta wax ku oolka leh ayaa qayb ahaan yaraynaysa tan laakiin waxay leedahay ganacsi-offs.

Illowsi musiibo ah: Inta lagu jiro hagaajinta buuxda, moodooyinka waxay la kulmi karaan iloobin masiibo ah, halkaas oo ay lumiyaan qaar ka mid ah awoodaha guud ee la bartay intii lagu jiray tababarka ka hor. Barashada hawlo badan ayaa laga yaabaa in loo baahdo.

IP iyo Khatarta QarsoodigaXogta lahaanshaha ee loo isticmaalo hagaajinta wanagsan waxay ku daadan kartaa soo saarista qaabka luqadda ee si guud loo sii daayay, taasoo keenaysa khataro. Farqiga u gaarka ah ee kala duwan iyo farsamooyinka yaraynta khatarta macluumaadka waa meelaha firfircoon ee cilmi-baarista.

Guud ahaan, iyadoo si gaar ah faa'iido u leh, hagaajinta hagaajintu waa hab-raac nuanceed oo u baahan daryeel ku saabsan tayada xogta, tixgelinta aqoonsiga, yaraynta khataraha, iyo dheellitirka wax-ku-oolnimada ganacsi-off ee ku salaysan shuruudaha isticmaalka.

Mustaqbalka: Qaabka Luqadda Isbeddelka Marka la eego Qiyaasta

Hore u fiirsashada, horumarinta farsamooyinka hagaajinta iyo moodeelka ayaa muhiim u ah furitaanka awoodda buuxda ee moodooyinka luqadda waaweyn ee codsiyada iyo qaybaha kala duwan.

Hababka wax ku oolka badan ee awood u siinaya hagaajinta xitaa moodooyinka waaweyn sida PaLM oo leh ilo xaddidan ayaa dimuqraaddiyi kara gelitaanka. Atoomatiga dhuumaha abuurista xogta iyo injineernimada degdega ah waxay hagaajin kartaa takhasuska

Farsamooyinka is-maamulka ah ee lagu hagaajinayo xogta cayriinka ah ee aan lahayn calaamaduhu waxay furi karaan xuduudo cusub. Iyo habab isku dhafan oo la isku daro qaabab-hoosaadyo si fiican loo hagaajiyay oo lagu tababaray hawlo kala duwan ama xog ayaa u oggolaan kara in la dhiso moodallo si heer sare ah loo habeeyey marka loo baahdo.

Ugu dambayntii, sida LLM-yadu u noqdaan kuwo meel walba ka jira, awoodda lagu habeeyo oo loogu takhasuso si aan kala go 'lahayn kiis kasta oo la isticmaali karo ayaa noqon doona mid muhiim ah. Habayn-wanaagsan iyo xeeladaha la qabsiga moodeelka ee la xidhiidha ayaa ah tillaabooyinka muhiimka ah ee lagu xaqiijinayo aragtida moodooyinka waaweyn ee luuqadaha sida dabacsanaan, badbaado, iyo kaaliyeyaasha AI ee awoodda leh ee kordhinaya awoodda aadanaha ee qayb kasta iyo dadaal kasta.

Waxaan ku qaatay shantii sano ee la soo dhaafay aniga oo ku milmay adduunka xiisaha leh ee Barashada Mashiinka iyo Barashada qoto dheer. Dareenkayga iyo khibradayda ayaa ii horseeday inaan wax ku biiriyo in ka badan 50 mashruuc oo injineernimo oo software ah, oo si gaar ah diiradda u saaray AI / ML. Xiisaha joogtada ah ayaa sidoo kale ii soo jiidatay Habraaca Luqadda Dabiiciga ah, oo ah goob aan aad u xiiseeyo in aan wax badan sahamiyo.

Waad jeclaan kartaa

Daah-furka ChatGPT-4o: Tilmaamaha Gen- Xiga iyo Saamayntooda Isbadal

Wax walba oo aad uga baahan tahay inaad ka ogaato Llama 3 | Qaabka Isha Furan ee Ugu Awooda Badan Wali | Fikradaha Isticmaalka

BlackMamba: Isku-dhafka Khubarada ee Qaababka-Gobolka

AI GPTs ee Xogta PostgreSQL: Ma shaqayn karaan?

RIGA (Ku Wanaagsan Hawlaha Xisaabinta): Laga soo bilaabo Aqoonta Luqadda ilaa Xisaabta Genius

Dagaalka Isha Furan vs Qaababka Luuqadaha Isha Xiran: Falanqaynta Farsamada

Recent Posts

- AI Chatbots-ku waa ballan-qaad laakiin xaddidan horumarinta isbeddelka habdhaqanka caafimaadka leh

- Daah-furka Guddiga Xakamaynta: Halbeegyada Muhiimka ah ee Habaynaya Wax-soo-saarka LLM

- Kobcinta Kalsoonida: Sida Interactive AI u dhisto kalsoonida Dhakhaatiirta iyo ogaanshaha AI

- BrushNet: Ku xidh oo Ciyaar Rinjiyeynta Sawirka oo leh Faafin Labada Laan

- Willson Cross, Maamulaha Guud & Aasaasaha Borderless AI - Taxanaha Wareysiga